As machine learning models continue to grow in complexity and use, the demand for explainable AI (XAI) has skyrocketed. XAI aims to help us understand, interpret, and trust the decisions made by machine learning models.

Among the popular tools to achieve this are SHAP, LIME, and several other XAI frameworks designed to illuminate the “black box” of AI decision-making.

In this article, we’ll explore what makes these tools unique, how they compare, and which might be the best fit for various applications. Let’s dive in!

What Is Explainable AI (XAI) and Why Does It Matter?

Defining Explainable AI (XAI)

Explainable AI (XAI) encompasses methods that make machine learning models more transparent. Complex models—like deep neural networks or ensemble methods—are often difficult to interpret, even for experts. XAI tools aim to clarify these decisions, allowing users to see what’s driving outcomes.

Why does this matter? Well, AI is influencing high-stakes sectors like healthcare, finance, and criminal justice. Misinterpretation or mistrust of a model’s decision could lead to harmful outcomes or mistrust in the system. That’s why interpretability and transparency are no longer “nice-to-haves” but essential.

The Core Goals of XAI

In general, XAI frameworks aim to:

- Increase trust in model decisions by making them understandable.

- Provide insights into model biases or weaknesses.

- Enhance model debugging and improvement.

- Facilitate compliance with ethical and legal standards (e.g., GDPR).

The ultimate goal of XAI is to help stakeholders, from engineers to end users, understand AI behavior with confidence.

SHAP: The Power of Shapley Values in Interpretability

What Is SHAP?

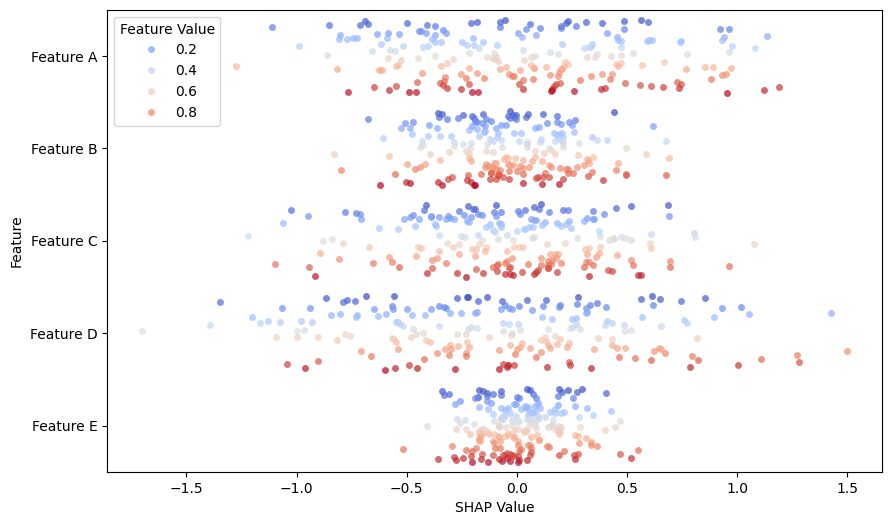

SHAP (SHapley Additive exPlanations) is a popular XAI technique based on Shapley values, a concept from cooperative game theory. The Shapley values evaluate each feature’s contribution to the model’s prediction, giving a sense of “feature importance.” SHAP aims to distribute the “payoff” (or prediction) fairly among features, indicating how much each feature contributed to the output.

SHAP offers consistent, theoretically grounded explanations by measuring each feature’s average contribution across various subsets of features.

Key Strengths of SHAP

- Theoretically Sound: SHAP explanations are grounded in Shapley values, ensuring each feature’s contribution is additive and fair.

- Global and Local Interpretability: SHAP can explain individual predictions (local) and broader model behavior (global).

- Visualizations: SHAP provides rich visuals, like beeswarm plots and summary plots, to make interpretability more accessible.

Limitations of SHAP

While SHAP provides powerful insights, it can be computationally expensive, especially with complex models. Calculating Shapley values for every feature and instance may be prohibitive for large datasets or deep neural networks.

LIME: Local Interpretability for Model Decisions

What Is LIME?

LIME (Local Interpretable Model-agnostic Explanations) is another widely used XAI method that focuses on local interpretability. LIME approximates a black-box model with a simpler interpretable model around a specific instance to understand what’s driving that prediction. It essentially answers, “Why did the model make this particular decision?”

LIME is model-agnostic, meaning it can be applied to any classifier or regressor without needing to know the model’s inner workings.

Key Strengths of LIME

- Model-agnostic: LIME works with virtually any type of model, from simple linear regression to complex neural networks.

- Focuses on Individual Explanations: By creating interpretable explanations for single predictions, LIME is especially useful for understanding why certain instances were classified in a particular way.

- Flexibility: LIME allows for tuning and experimenting with different interpretable models to best match the black-box behavior.

Limitations of LIME

LIME’s main downside is that it focuses on local explanations, which may not represent the overall model behavior. Additionally, the explanations may vary significantly if the data is noisy, leading to potential inconsistencies in interpretability.

Comparing SHAP and LIME: Strengths and Use Cases

Key Differences Between SHAP and LIME

While both SHAP and LIME are popular XAI techniques, they have notable differences:

- Interpretability Scope: SHAP provides global and local explanations, while LIME primarily offers local interpretability.

- Consistency: SHAP is based on Shapley values, ensuring a fair allocation of feature contributions. LIME approximates black-box behavior, which can lead to less consistent results.

- Computation: SHAP can be resource-intensive, whereas LIME is generally faster but less reliable in some cases.

Best Use Cases for SHAP

- When detailed and theoretically grounded explanations are needed.

- For high-stakes applications where precise interpretability is critical, such as medical diagnoses or financial predictions.

- When global and local insights are both required.

Best Use Cases for LIME

- Quick insights into why a model made a specific prediction, especially for instance-specific explanations.

- When working with model-agnostic frameworks, as LIME doesn’t require model-specific information.

- Situations where computational resources are limited, and faster results are needed.

| Category | SHAP | LIME |

|---|---|---|

| Interpretability Scope | Global & Local – SHAP can provide insights at both the global model and individual prediction levels. | Local Only – LIME focuses on explaining individual predictions and doesn’t offer a global view. |

| Computation Speed | Slower – SHAP’s calculations can be computationally intensive, especially for large models. | Faster – LIME generally requires fewer computations, making it faster for individual explanations. |

| Model Requirements | Flexible – Works with any model type but is especially robust with tree-based models due to optimized algorithms. | Flexible – Can explain any model type, as it uses perturbations of the input data and retraining. |

| Typical Applications | Tabular, Text, & Image – Frequently used for complex models in structured data and image analysis, especially with deep learning models. | Tabular & Text – Commonly applied to simpler models in structured data or text, often in fields requiring quick local interpretability. |

– represents interpretability scope

– represents computation speed

– represents model requirements

– represents typical applications

This table format visually highlights the differences, using icons to represent each category and clarify the comparison.

Other XAI Techniques: Partial Dependence Plots, Integrated Gradients, and Counterfactual Explanations

Partial Dependence Plots (PDPs)

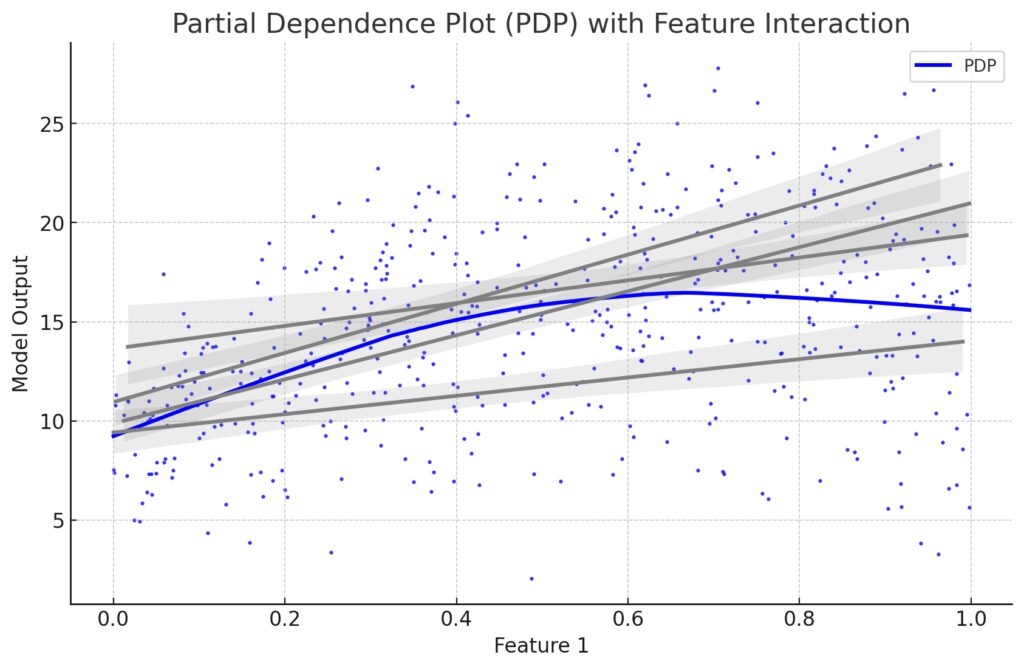

Partial Dependence Plots show the relationship between selected features and the predicted outcome while keeping all other features constant. PDPs are particularly useful for interpreting global behavior by illustrating how changes in specific features impact model predictions across a dataset.

Strengths

- Great for visualizing feature impact across the entire dataset.

- Ideal for simpler models, like decision trees or linear models.

Limitations

- Limited in representing interactions among features.

- May struggle with high-dimensional or non-linear models where interactions play a significant role.

Blue Curve: Main PDP for Feature 1, with a smooth trend line representing its influence on model output.

Gray Lines: Represent interaction effects with different values of Feature 2, indicating variations in predictions when both features are considered.

This visualization highlights how Feature 1 impacts the model, while the additional lines reveal potential interactions, helping to interpret complex feature relationships.

Integrated Gradients

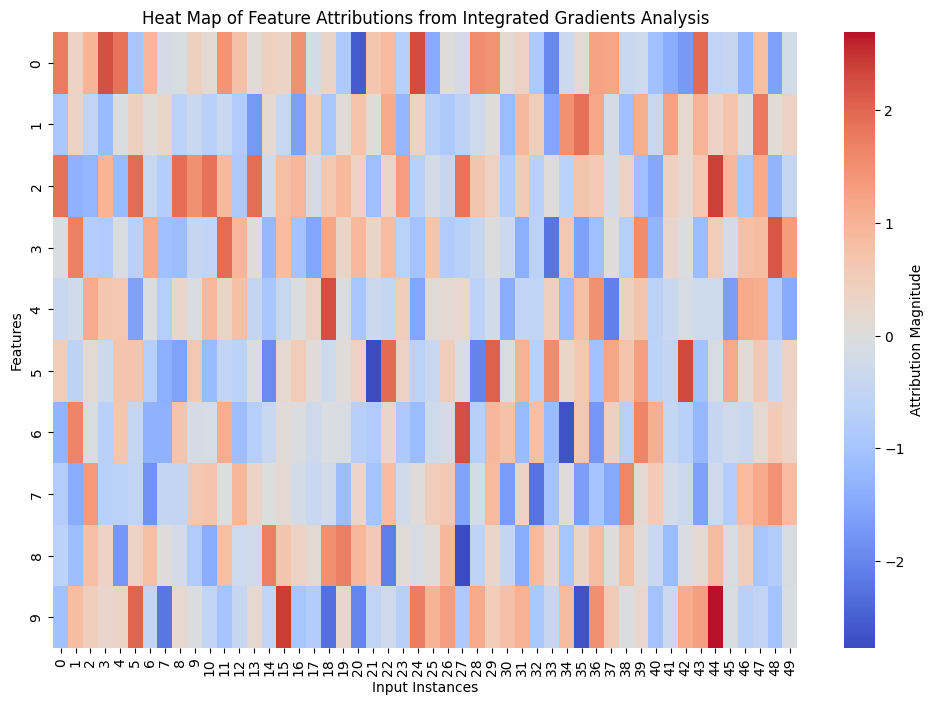

Integrated Gradients is an interpretability technique specific to neural networks. It calculates the gradient of the model’s output with respect to each input feature, helping determine the impact of each feature on the prediction. By considering the average of gradients taken along the input path, Integrated Gradients offers robust local explanations for complex models.

Strengths

- Effective for explaining neural networks and deep learning models.

- Offers smooth, continuous insights on how input features influence predictions.

Limitations

- Works only with differentiable models, making it unsuitable for many traditional models.

- Complex to implement and may require significant computational power.

Rows: Represent individual features.

Columns: Represent different input instances.

Color Gradient: Shows attribution magnitudes, with blue for negative influences and red for positive influences, indicating each feature’s impact direction on the prediction.

Counterfactual Explanations

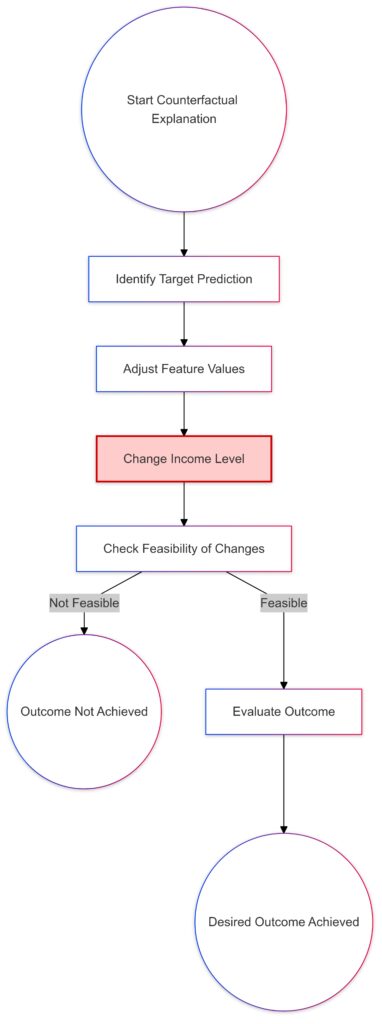

Counterfactual Explanations explore “what if” scenarios to illustrate how small changes in features could alter the prediction outcome. For instance, a counterfactual explanation could reveal that if a loan applicant’s income was $500 higher, they might have been approved.

Strengths

- Useful for understanding decision boundaries and actionable insights.

- Helps in generating user-friendly explanations, particularly in high-stakes fields.

Limitations

- May be computationally intensive, especially for large datasets or complex models.

- Finding plausible counterfactuals can be challenging, as changes to some features may not be feasible in real-world scenarios.

Identify Target Prediction: The desired outcome or prediction.

Adjust Feature Values: Modify features to achieve the target outcome, with emphasis on specific changes, such as income.

Check Feasibility: Assess whether the feature adjustments are viable.If Feasible: Move forward with the adjusted outcome.

If Not Feasible: Conclude with an alternative explanation.

Highlighted Feature (e.g., Income): Adjustments are specifically noted to show their effect on the final outcome.

Choosing the Right XAI Framework for Your Needs

Selecting the right XAI framework depends on your goals, model type, and available resources. For instance:

- If global insights with theoretical rigor are essential, SHAP is ideal.

- For quick, instance-level interpretability, LIME provides flexibility.

- When working with neural networks, Integrated Gradients or Counterfactual Explanations may offer deeper insights.

In conclusion, SHAP, LIME, and other XAI tools each bring unique strengths to the table.

Bringing It All Together: Choosing the Right XAI Technique

In the rapidly growing field of AI, choosing the right XAI framework is essential for interpreting and trusting machine learning models. Each technique—SHAP, LIME, Partial Dependence Plots, Integrated Gradients, and Counterfactual Explanations—serves unique interpretability needs and goals.

- SHAP is powerful for delivering consistent and theoretically grounded explanations. It’s ideal for applications needing both global and local insights.

- LIME shines in instance-specific interpretations and can be used across different models without modification, though it’s best suited for quick, local explanations.

- Partial Dependence Plots work well for global interpretability in simpler models, while Integrated Gradients offer in-depth local interpretations for neural networks.

- Counterfactual Explanations provide intuitive, “what-if” insights, especially useful in applications where actionable feedback is necessary.

As machine learning continues to evolve, explainable AI frameworks will remain essential for balancing innovation with ethical and transparent AI practices. Each of these XAI methods plays a critical role in ensuring models are not only effective but also understandable and trustworthy.

FAQs

How does SHAP differ from other interpretability tools?

SHAP uses Shapley values from game theory to measure feature contributions to predictions. Unlike other tools, it offers theoretically sound explanations and ensures fairness in how feature importance is calculated. SHAP provides both local and global interpretability, making it ideal for complex models where understanding each feature’s role is crucial.

When should I use LIME over SHAP?

LIME is useful when you need quick, local explanations and are working with a model-agnostic tool. If your goal is to understand why a specific instance was classified a certain way, LIME is often more efficient than SHAP. However, LIME may be less consistent and is best suited for single-instance interpretations rather than broader model insights.

What are Partial Dependence Plots (PDPs) best suited for?

Partial Dependence Plots are ideal for understanding the global influence of features across the dataset. PDPs are great for visualizing how changes in certain features affect model predictions, especially with simpler models. However, they are less effective in models with strong feature interactions, where individual feature impacts are harder to isolate.

Can Integrated Gradients work with any model?

Integrated Gradients is specific to differentiable models, like neural networks. It calculates the influence of each input feature on the prediction, providing smooth explanations ideal for deep learning applications. If you’re working with non-differentiable models, like decision trees, consider other methods like SHAP or LIME.

Are counterfactual explanations effective for interpretability?

Yes, counterfactual explanations are valuable, especially for providing actionable insights. By showing “what-if” scenarios, they help users understand what changes would alter a prediction. This approach is intuitive for many users and is especially useful in applications requiring direct feedback, such as loan approvals or credit scoring.

How do I choose the best XAI framework for my project?

Selecting an XAI framework depends on your interpretability goals, model type, and resources. For consistent, feature-based insights, SHAP is ideal. If you need quick, local explanations, LIME offers flexibility. For complex neural networks, Integrated Gradients is recommended. Finally, counterfactual explanations are best for situations where actionable insights are crucial.

How do SHAP values help in model debugging?

SHAP values can pinpoint which features are driving predictions, making them highly effective for debugging models. If a particular feature consistently contributes to incorrect predictions, it may indicate issues like data bias, overfitting, or feature misrepresentation. By revealing the impact of each feature, SHAP helps data scientists identify problem areas and refine model training to improve accuracy and reliability.

Is LIME accurate for high-dimensional datasets?

LIME can struggle with high-dimensional datasets, as generating interpretable local approximations becomes challenging with many features. While it’s still possible to use LIME, it may require dimensionality reduction techniques (e.g., PCA or feature selection) to simplify the dataset. SHAP is generally more effective in handling high-dimensional data, though it can be computationally demanding.

Can SHAP and LIME be used together?

Yes, SHAP and LIME can complement each other. SHAP provides consistent, feature-level importance scores, making it ideal for understanding both global and local model behavior. LIME, meanwhile, can offer quick, instance-specific insights. Using SHAP for feature importance and LIME for local approximations can yield a comprehensive understanding of model behavior from multiple angles.

Are there any challenges in implementing XAI techniques?

Yes, implementing XAI techniques can pose challenges, such as:

- High Computational Costs: SHAP, in particular, can be resource-intensive with complex models and large datasets.

- Data Privacy Concerns: XAI may expose sensitive information, especially in fields like healthcare, requiring careful handling of explanations.

- Risk of Over-Interpretation: Interpretations may sometimes be taken as definitive, even when they’re approximations. Balancing interpretability with accuracy is crucial.

How do Counterfactual Explanations support ethical AI?

Counterfactual explanations promote ethical AI by offering insights into model fairness and bias. By showing alternative outcomes based on small changes, they can help reveal potential biases or unfair decision-making processes within a model. For instance, if increasing income leads to loan approval but adjusting gender does not, it indicates fairness, while a different outcome may highlight bias.

What is model-agnostic interpretability, and why is it important?

Model-agnostic interpretability refers to methods that can be applied to any model, regardless of its structure or type. This is important because it enables a consistent approach to explainability across various models, from simple regressions to complex neural networks. Techniques like LIME and SHAP (in some implementations) are model-agnostic, making them versatile tools in the XAI toolkit.

Which industries benefit the most from XAI?

Industries that rely on high-stakes decisions—such as healthcare, finance, criminal justice, and autonomous vehicles—benefit most from XAI. In these sectors, understanding AI-driven decisions is critical for compliance, transparency, and safety. XAI frameworks provide clarity on model decisions, allowing stakeholders to use AI with greater trust and accountability.

Is XAI necessary for all machine learning models?

Not necessarily. For straightforward models, such as linear regression or simple decision trees, explainability is often built-in. XAI becomes more critical as model complexity increases, especially with black-box models like deep neural networks or ensemble models (e.g., random forests). When models impact significant decisions, implementing XAI ensures that these decisions are understandable and justified.

How do I measure the effectiveness of an XAI framework?

Effectiveness in XAI can be measured by evaluating:

- User Trust: Do stakeholders feel more confident in the model after explanations?

- Model Improvement: Has interpretability helped identify and correct model errors or biases?

- Clarity and Usability: Are the explanations easy for non-experts to understand?

- Compliance: Does the model now meet regulatory and ethical standards for transparency?

Can SHAP and LIME be used with both classification and regression models?

Yes, both SHAP and LIME are versatile and can be applied to classification and regression models alike. They work by explaining the contribution of features toward a specific prediction, regardless of the prediction type. SHAP’s use of Shapley values and LIME’s local approximation methods are compatible with any output type, making them widely applicable across different types of machine learning tasks.

How do XAI techniques handle feature interactions?

Some XAI techniques handle feature interactions better than others. SHAP can naturally capture interactions between features due to its foundation in Shapley values, which consider contributions across combinations of features. LIME, however, does not inherently account for interactions because it generates linear approximations around individual instances, making it less effective for highly interactive models. For models with strong feature interactions, SHAP or techniques specifically designed for feature interaction analysis, like Interaction Effects with Partial Dependence Plots (PDP), may provide clearer insights.

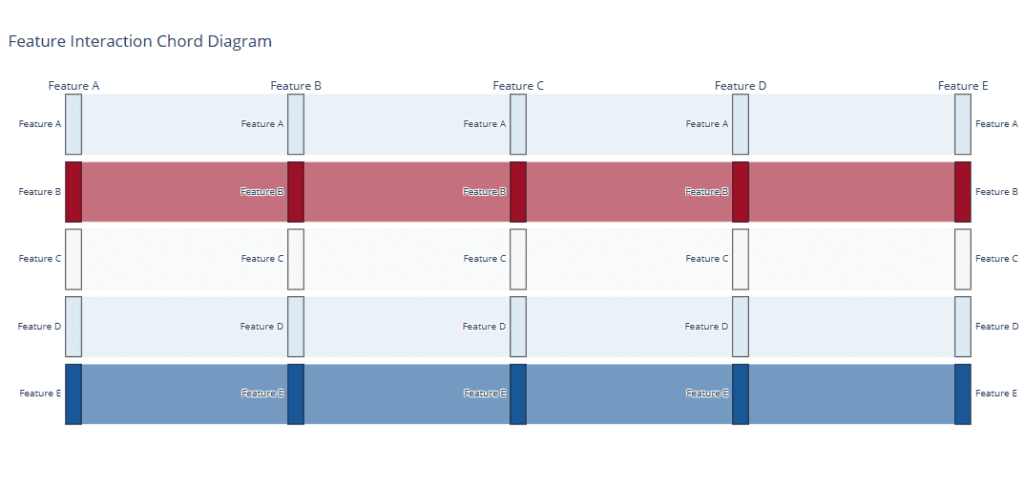

Features: List of feature names.

Interaction Matrix: Symmetric matrix defining interaction strength (random in this example).

Colorscale: The

RdBu colorscale indicates strength and direction.Is there a risk of “explanation bias” with XAI tools?

Yes, certain XAI tools may introduce explanation bias, especially if they rely on simplified local approximations. LIME, for instance, uses a linear model to approximate local behavior, which might not perfectly capture complex, non-linear decision boundaries, potentially leading to biased or incomplete explanations. It’s essential to interpret these approximations cautiously, cross-referencing results from multiple XAI tools when possible to get a more balanced understanding.

Can XAI techniques reveal sensitive or confidential information?

Yes, XAI techniques can sometimes unintentionally expose sensitive information within the data. For example, explanations may reveal individual-specific data points or identify influential features that correlate with private attributes. To mitigate this, it’s essential to apply privacy-preserving methods, especially in regulated industries like healthcare and finance, to ensure that explanations don’t compromise data privacy or security.

How do SHAP and LIME perform in real-time or production environments?

In real-time environments, computational cost is a major consideration. SHAP, while powerful, can be computationally expensive, which may not be ideal for real-time applications unless using approximate versions (e.g., TreeSHAP for tree-based models). LIME is generally faster, but it still may not be suitable for high-frequency, low-latency applications unless precomputed explanations are used. For production environments, it’s critical to balance interpretability needs with performance constraints, sometimes by pre-calculating explanations or using streamlined, approximate versions of these techniques.

What role does XAI play in regulatory compliance?

XAI is essential for meeting regulatory standards such as the EU’s General Data Protection Regulation (GDPR) and the proposed Artificial Intelligence Act, which mandate a “right to explanation” for automated decisions. XAI tools like SHAP and LIME provide the transparency needed to ensure that AI models meet compliance standards, offering traceability and clarity around decisions. Organizations using XAI tools can demonstrate transparency, fairness, and accountability, key requirements under many modern regulatory frameworks.

Are SHAP and LIME compatible with deep learning models?

Yes, SHAP and LIME can be used with deep learning models, though they have specific constraints. SHAP is compatible with deep models through Deep SHAP, a variant designed for neural networks. However, SHAP can still be computationally intensive with deep models. LIME, being model-agnostic, can also explain deep learning predictions by generating local approximations, but its linear nature may limit its ability to capture complex feature interactions within deep networks. For deep models, other techniques like Integrated Gradients or Grad-CAM may also provide more intuitive, model-specific explanations.

How are visualizations used to enhance explanations in XAI?

Visualization is a powerful component in XAI, turning complex explanations into intuitive, easily interpretable visuals. SHAP offers a range of visual tools, such as beeswarm plots, summary plots, and dependence plots, that display feature contributions across instances. LIME generates visual explanations for each instance, often showing bar charts of feature contributions. These visuals help stakeholders understand which features drive predictions, making the information accessible to non-experts and increasing overall transparency.

What are some common challenges in using SHAP and LIME with large datasets?

With large datasets, SHAP and LIME can encounter scalability issues. SHAP’s reliance on Shapley values can make it computationally demanding when dealing with many features or data points. TreeSHAP can help mitigate this for tree-based models but doesn’t fully eliminate the challenge. LIME is faster but may still be impractical with very large datasets due to the need for repeated sampling and local approximation. Sampling techniques, dimensionality reduction, or using subsets of data can help make SHAP and LIME more feasible with large datasets, though some loss in precision may occur.

How can XAI be used to improve model performance?

XAI is invaluable for model diagnostics and improvement. By highlighting which features contribute most to errors or inconsistencies, XAI frameworks like SHAP and LIME help identify overfitting, bias, and data quality issues. For instance, if SHAP identifies a feature that contributes disproportionately to errors, data scientists can revisit data preprocessing or feature engineering steps. Additionally, XAI can reveal underperforming model segments, allowing targeted model retraining and tuning that leads to better overall performance and robustness.

Resources

Blogs and Websites

- SHAP Documentation and GitHub Repository

The official SHAP GitHub repository includes comprehensive documentation, tutorials, and example code for implementing SHAP in Python. It’s regularly updated and maintained by Scott Lundberg, the creator of SHAP. - LIME Documentation and GitHub Repository

The official LIME GitHub repository provides documentation and examples to help users get started with LIME. It also offers links to practical use cases and additional resources. - Towards Data Science

Towards Data Science hosts numerous articles on XAI, SHAP, and LIME, with tutorials, real-world applications, and case studies contributed by data scientists. Search the platform for hands-on guides on specific XAI techniques.

Tutorials and Code Examples

- Kaggle Notebooks

Kaggle has a range of open-source notebooks covering XAI techniques, including SHAP and LIME. These notebooks provide practical demonstrations and allow users to experiment with sample datasets and real-world models. Start with Kaggle’s SHAP tutorial for an interactive guide. - SHAP and LIME on Google Colab

Several Google Colab notebooks are available that walk users through SHAP and LIME implementations in Python. They’re a great option if you want to try XAI tools without needing to set up a local environment. You can find free tutorials with a quick search on Colab’s community resources.

Additional Resources and Blogs

- Distill.pub

Known for its innovative, interactive explanations of machine learning topics, Distill provides visually engaging articles on interpretability and XAI. While not specific to SHAP or LIME, it covers foundational concepts that enhance understanding of XAI. - Google’s People + AI Research (PAIR) Initiative

Google’s PAIR Initiative offers articles, tools, and frameworks to make AI more understandable and inclusive. It’s a good resource for learning about broader XAI applications, especially if you’re interested in ethical AI and user-centered design.