Unlocking the Power of Code Embeddings: Trends and Innovations

Introduction to Code Embeddings

Code embeddings are revolutionizing how we handle and interpret software code. By transforming code snippets into fixed-size vectors, these embeddings capture the semantic meaning and functional intent behind code, facilitating tasks like code search, bug detection, and code summarization.

Why Code Embeddings Matter

Code embeddings enhance code search accuracy by understanding the context and purpose of code snippets. They also streamline clone detection by identifying similar code segments and improve code summarization, making it easier to generate human-readable documentation.

Generating Code Embeddings

There are several approaches to generating code embeddings:

- Token-Based Methods: Treating code as sequences of tokens, similar to text, and using models like Word2Vec or TF-IDF.

- Tree-Based Methods: Leveraging the structure of Abstract Syntax Trees (ASTs) to capture syntactic information.

- Graph-Based Methods: Representing code as graphs and using Graph Neural Networks (GNNs) to understand relationships.

- Neural Network-Based Methods: Employing Recurrent Neural Networks (RNNs), LSTM networks, or Transformer models like CodeBERT.

Key Models for Code Embedding

- Code2Vec: This model uses paths in the AST to capture the meaning of code snippets.

- CodeBERT: A pre-trained transformer model that supports multiple programming languages.

- GraphCodeBERT: An extension of CodeBERT that incorporates data flow information.

- CuBERT: BERT model fine-tuned on code datasets for enhanced code classification and other tasks.

Applications of Code Embeddings

- Intelligent Code Editors: Autocompletion and error detection.

- Automated Code Review: Highlighting potential issues during reviews.

- Code Migration and Refactoring: Suggesting improvements or transformations.

Emerging Trends in Code Embedding

Artificial Intelligence is significantly influencing code embedding. AI tools, such as ChatGPT, assist in debugging and automating repetitive coding tasks, improving overall efficiency and code quality.

Low-Code and No-Code Solutions are also gaining popularity, making coding accessible to a broader audience. These platforms simplify complex coding tasks, enabling more people to create and innovate without extensive coding knowledge.

Remote Work and Hybrid Models have become prevalent post-pandemic. Coders now have more flexibility, but they must also navigate challenges such as maintaining productivity and resolving technical issues independently.

Sustainable Software Development is becoming crucial as environmental awareness grows. Developers focusing on optimizing code for minimal resource consumption will be at the forefront of this movement.

Cybersecurity remains a top priority. Developers must adopt a security-first approach throughout the development cycle to mitigate risks and protect sensitive data.

Blockchain Applications are expanding beyond cryptocurrencies into logistics, intellectual property protection, and secure data storage, offering new opportunities for developers.

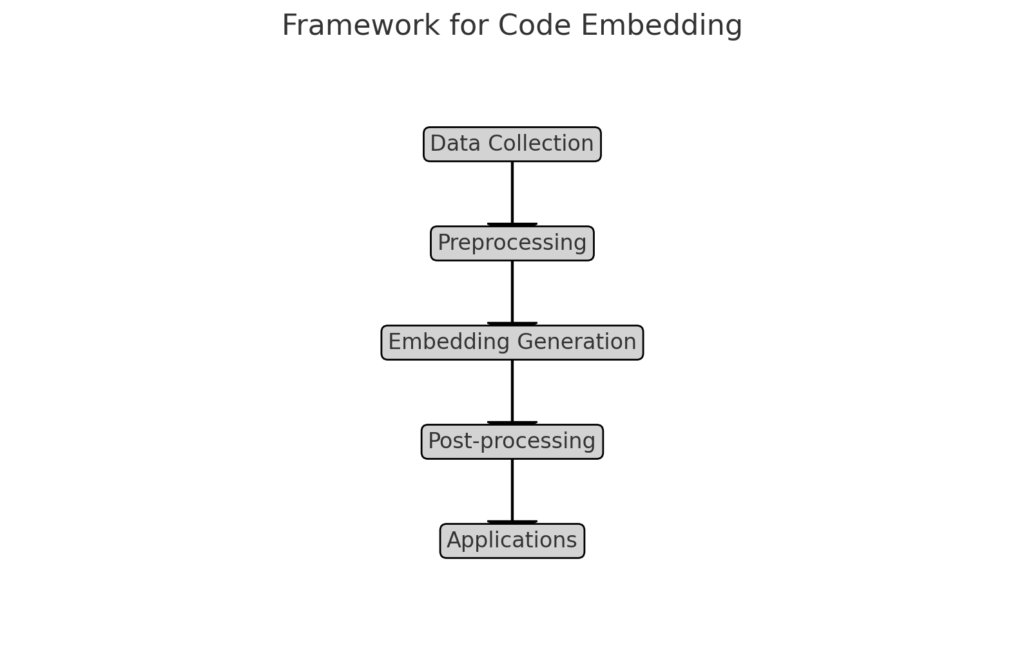

Here is a visual representation of the framework for code embedding. It outlines the key components and stages involved in the process:

- Data Collection: Gathering source code, documentation, and snippets.

- Preprocessing: Tokenization, normalization, and parsing of the code.

- Embedding Generation: Model selection, training, and vectorization.

- Post-processing: Dimensionality reduction and clustering.

- Applications: Implementing code search, summarization, completion, and bug detection.

Unlocking Insights from Complex Data with Vector Embeddings

Vector embedding transforms complex data like text, images, or code into continuous, dense vectors of fixed dimensions. This transformation captures the semantic relationships within the data, facilitating easier processing and understanding by machine learning models.

Why Vector Embeddings Matter

Vector embeddings are crucial for advanced applications in machine learning and artificial intelligence:

Enhanced Search and Retrieval: Embedding documents or code snippets into vector space helps identify similar items based on their proximity in that space.

Recommendation Systems: Embeddings help recommend items semantically similar to what a user has shown interest in.

Natural Language Processing: They are fundamental in tasks like sentiment analysis, language translation, and text generation.

Types of Vector Embeddings

Word Embeddings

- Word2Vec: Developed by Google, it captures word relationships by predicting surrounding words in a sentence.

- GloVe (Global Vectors for Word Representation): Developed by Stanford, it captures co-occurrence statistics of words in a corpus.

Sentence and Document Embeddings

- Doc2Vec: An extension of Word2Vec that generates vectors for entire documents.

- BERT (Bidirectional Encoder Representations from Transformers): A transformer-based model creating context-aware embeddings for sentences and documents.

Image Embeddings

- Convolutional Neural Networks (CNNs): Extract features from images and transform them into embeddings for tasks like image classification and object detection.

Code Embeddings

- Code2Vec: Captures the meaning of code snippets by considering paths in the abstract syntax tree.

- GraphCodeBERT: Extends CodeBERT by incorporating data flow information, improving understanding of the code’s structure and semantics.

How Vector Embeddings Work

Vector embeddings map high-dimensional data into a lower-dimensional vector space. The process involves training on large datasets where similar data points are positioned closely together in the vector space, reflecting their semantic similarity.

For example, in text embeddings:

- Training Phase: The model learns word associations from a corpus.

- Embedding Generation: New text is transformed into vectors based on learned associations.

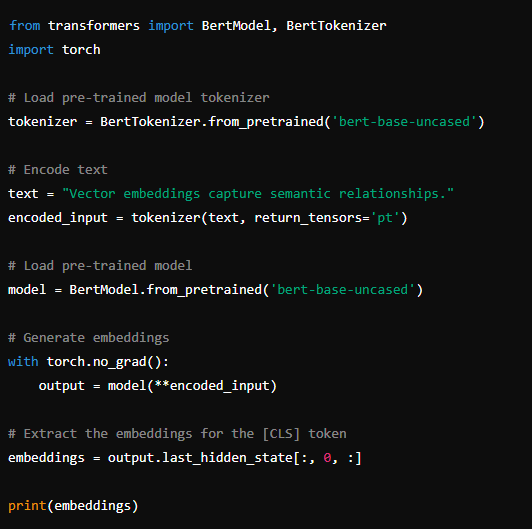

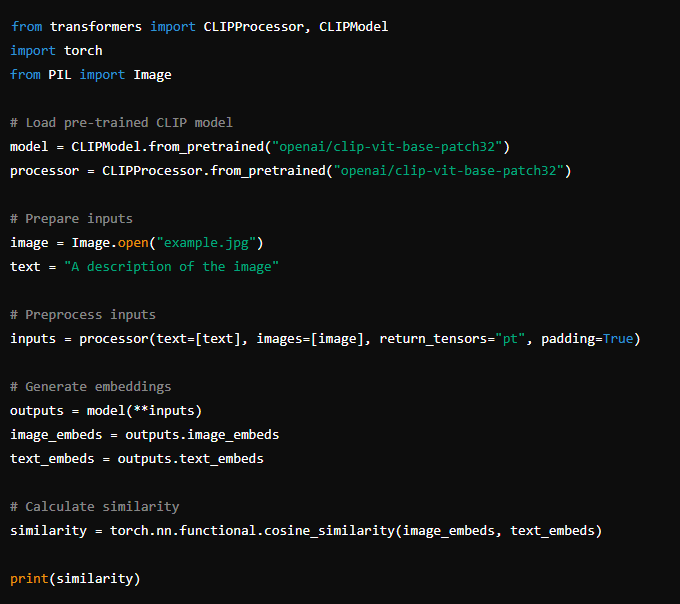

Code Example: Generating Text Embeddings with BERT

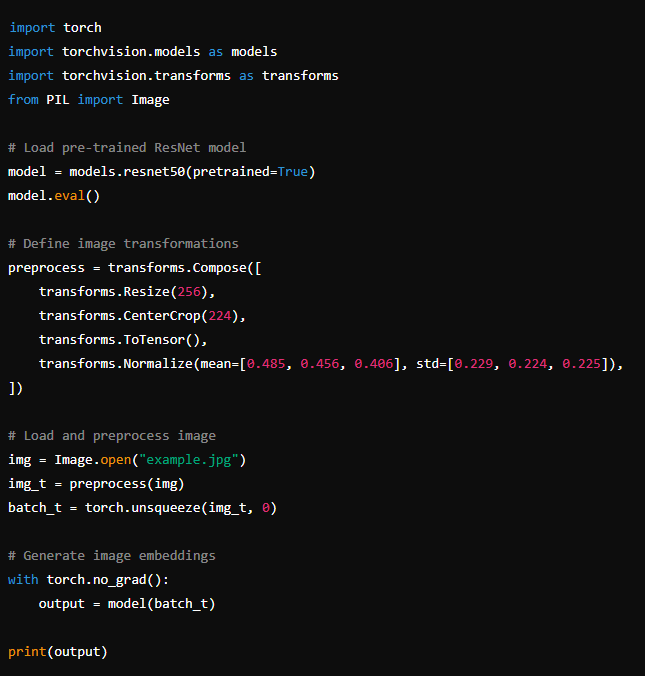

Code Example: Image Embeddings with CNN

Code Example: Combining Text and Image Embeddings

These examples demonstrate how to generate embeddings for text, images, and combined text-image scenarios using BERT and CLIP models.

Applications of Vector Embeddings

Search Engines

Enhancing search accuracy by understanding the context and meaning of queries.

Recommendation Systems

Suggesting similar products or content by comparing user preferences in vector space.

NLP Tasks

Improving the performance of tasks like translation, summarization, and sentiment analysis.

Image Recognition

Identifying objects and features in images for applications in security, healthcare, and more.

Code Analysis

Facilitating code search, summarization, and bug detection.

Challenges and Future Directions

Scalability

Handling embeddings for large datasets efficiently remains a challenge.

Interpretability

Making the embeddings and their impact on decisions understandable to humans is crucial for trust.

Cross-Domain Applications

Developing embeddings that work across different types of data (text, images, code) is an ongoing area of research.

Continuous Learning

Adapting embeddings to evolving data and contexts is essential for maintaining their relevance.

Emerging Trends

Adoption in Enterprise Data Management: Vector embeddings are becoming a core enterprise data type, with new database platforms optimized for vectorization.

Advancements in Model Flexibility: New embedding models allow for flexible dimension adjustments, improving efficiency and reducing costs (OpenAI) (OpenAI).

Hybrid Retrieval Techniques: Combining dense and sparse vector techniques enhances retrieval accuracy, essential for applications like image similarity searches and NLP tasks.

Vector embeddings are rapidly evolving, with significant advancements improving their efficiency, scalability, and applicability across various domains. As these technologies integrate deeper into enterprise strategies, they will unlock new possibilities for data-driven insights and applications.

TransformCode: A Framework for Code Embedding

Introduction

Code embedding is a technique used to represent source code in a continuous vector space. This representation is useful for various tasks such as code completion, code summarization, bug detection, and more. TransformCode is a framework designed to facilitate the generation and use of code embeddings. It leverages state-of-the-art deep learning models to create high-quality embeddings that capture the syntactic and semantic properties of code.

Key Components of TransformCode

- Tokenizer:

- Tokenization is the process of converting code into a sequence of tokens.

- TransformCode supports various programming languages and uses language-specific tokenizers to handle the syntactic rules of each language.

- Embedding Models:

- The framework provides pre-trained models based on transformer architectures like BERT, GPT, and their variants.

- These models are fine-tuned on large code repositories to understand the context and structure of the code.

- Training Pipeline:

- Users can train custom models using the provided pipeline.

- The pipeline includes data preprocessing, model training, and evaluation steps.

- Evaluation Metrics:

- TransformCode includes several metrics to evaluate the quality of code embeddings, such as precision, recall, F1-score, and BLEU score for tasks like code translation and summarization.

- APIs and Interfaces:

- The framework offers easy-to-use APIs for embedding generation, model training, and evaluation.

- It also provides interfaces for integrating with popular IDEs and code editors to facilitate real-time code analysis and completion.

Use Cases

- Code Completion:

- TransformCode can be integrated into IDEs to provide intelligent code completion suggestions based on the context of the code being written.

- Code Summarization:

- The embeddings can be used to generate summaries of code snippets, making it easier to understand the functionality of the code.

- Bug Detection and Fixing:

- By comparing code embeddings, the framework can identify potential bugs and suggest fixes based on similar code patterns.

- Code Search and Retrieval:

- Developers can search for code snippets using natural language queries, with TransformCode converting the queries and code into a common embedding space for efficient retrieval.

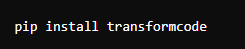

Getting Started with TransformCode

Installation:

- Install the framework via pip:

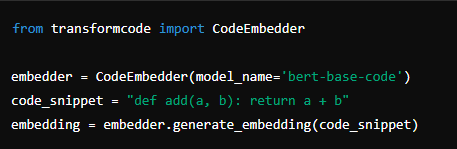

Generating Embeddings:

- Load a pre-trained model and generate embeddings for a given code snippet

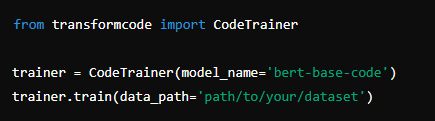

Training a Custom Model:

- Prepare your dataset and use the training pipeline:

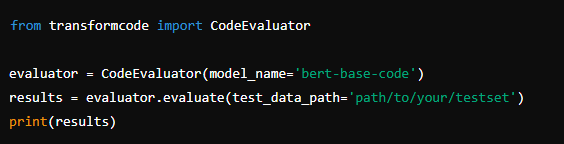

Evaluating the Model:

- Evaluate the performance of your model on a test set:

Conclusion

TransformCode aims to simplify the process of generating and utilizing code embeddings, making it accessible to developers and researchers. With its comprehensive set of tools and pre-trained models, it enables various applications in the software development lifecycle, ultimately enhancing productivity and code quality.

Code embedding is an evolving field with immense potential. By understanding and leveraging these embeddings, developers can significantly enhance their efficiency and the quality of their software projects. Staying updated with the latest trends and technologies in this area will be crucial for any developer aiming to excel in the industry.

FAQ: Understanding Code and Vector Embeddings

Q1: What are code embeddings?

A1: Code embeddings are a way of transforming code snippets into dense, fixed-size vectors that capture the semantic meaning of the code. This helps in various tasks like code search, bug detection, and code summarization.

Q2: How are vector embeddings different from code embeddings?

A2: Vector embeddings refer to the general technique of converting data into numerical vectors, applicable to text, images, and more. Code embeddings are a specific application of vector embeddings, focused on converting programming code into vectors.

Q3: Why are code embeddings important?

A3: They enhance the accuracy of code search, streamline clone detection, improve code summarization, and assist in debugging by understanding the context and functionality of code snippets.

Q4: What methods are used to generate code embeddings?

A4: Common methods include:

- Token-Based Methods: Using models like Word2Vec or TF-IDF.

- Tree-Based Methods: Utilizing Abstract Syntax Trees (AST).

- Graph-Based Methods: Employing Graph Neural Networks (GNNs).

- Neural Network-Based Methods: Using RNNs, LSTM networks, and Transformer models like CodeBERT.

Q5: Can you give examples of models used for code embeddings?

A5: Popular models include:

- Code2Vec: Uses AST paths to capture code meaning.

- CodeBERT: A transformer model supporting multiple programming languages.

- GraphCodeBERT: Incorporates data flow information for better understanding.

- CuBERT: Fine-tuned BERT model for code datasets.

Q6: What are the applications of code embeddings?

A6: Applications include:

- Intelligent Code Editors: For autocompletion and error detection.

- Automated Code Review: Highlighting potential issues.

- Code Migration and Refactoring: Suggesting improvements and transformations.

Q7: What are the emerging trends in code embeddings?

A7: Trends include the integration of AI, the rise of low-code/no-code solutions, increased prevalence of remote and hybrid work models, focus on sustainable software development, enhanced cybersecurity, and expanding blockchain applications (Codementor) (Unite.AI) (SnapLogic).

Q8: How do vector embeddings work?

A8: Vector embeddings map high-dimensional data into a lower-dimensional vector space by training on large datasets. Similar data points are positioned closely together, reflecting their semantic similarity.

Q9: What challenges exist with code and vector embeddings? A9: Challenges include scalability, interpretability, cross-domain application, and the need for continuous learning to adapt to evolving data and contexts.

References

- Code2Vec: Learning Distributed Representations of Code

- CodeBERT: A Pre-Trained Model for Programming and Natural Languages

- GraphCodeBERT: Pre-Training Code Representations with Data Flow

- CuBERT: BERT for Code

- 6 Coding Trends for 2024 and Beyond

- All About AI: Top Data Trends and Predictions for 2024

- Code Embedding: A Comprehensive Guide

- Interpretability and Explainability in Machine Learning

- SpikeGPT Language

- GraphCast