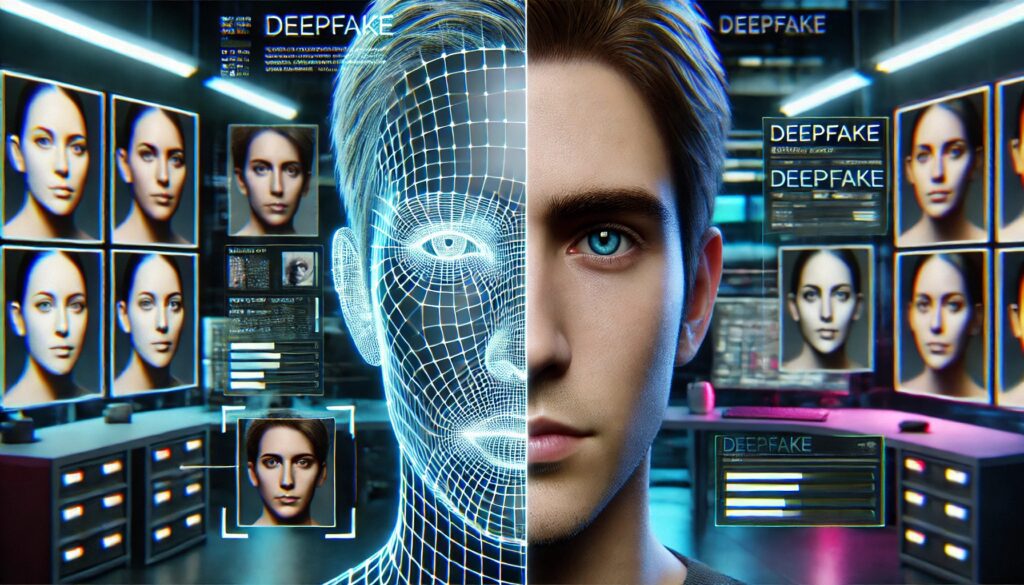

In the rapidly evolving digital landscape, deepfakes—AI-generated images and videos—pose a significant threat to authenticity and trust. However, researchers have discovered a fascinating method to unmask these convincing forgeries by focusing on the reflections in the eyes.

The Window to Authenticity

A study led by researchers from the University of Hull has revealed that the reflections in human eyes can be a reliable indicator of whether an image is real or AI-generated. When you look at a photo of a real person, the light reflections in both eyes should be consistent. However, in deepfake images, these reflections often don’t match due to the AI’s inability to accurately replicate the physics of light.

The Gini Index: From Economics to Eye Analysis

The researchers employed the Gini index, a tool originally used in economics to measure income inequality, to analyze the light distribution in the eyes. They found that in real photos, the reflections are evenly spread, while in fake images, inconsistencies are evident. This method, adapted from techniques used in astronomy to study galaxies, helps in identifying these discrepancies effectively.

Celestial Techniques for Terrestrial Problems

This innovative approach borrows from astronomical methods used to study galaxies. By applying techniques like the CAS (concentration, asymmetry, smoothness) system, researchers can analyze the light reflections in the eyes. The reflections in a real person’s eyes should be symmetrical and consistent, but deepfake images often fail this test. The CAS system measures the distribution of light in galaxies and has proven useful in comparing the reflections between the left and right eyes.

Automated Detection Tools

A team from the University at Buffalo developed a tool that automates this process, achieving a 94% success rate in experiments. This tool examines the light reflections in the eyes, looking for minute differences in shape, intensity, and other features. The cornea’s reflective properties make it an ideal target for this kind of analysis.

Limitations and Future Directions

While promising, these methods are not foolproof. They require a clear source of light reflected in the eyes, and mismatches can sometimes be corrected in post-editing. Moreover, the technique doesn’t work if the subject’s eyes aren’t visible. Despite these challenges, this approach represents a significant step forward in the fight against deepfakes.

Broader Implications

This eye-reflection technique is part of a larger toolkit for identifying AI-generated images. As AI technology continues to advance, so must our methods for detecting its creations. This research is a crucial development in the ongoing battle to maintain the integrity of digital media.

Researchers are continually refining these methods, exploring other potential markers of AI-generated images and videos. The goal is to stay ahead of the evolving technology used to create deepfakes, ensuring that we can reliably identify and challenge these digital deceptions.

By combining this eye-reflection technique with other detection methods, such as analyzing inconsistencies in the blink rates or facial movements of subjects in videos, we can develop a robust defense against the growing threat of deepfakes. This multifaceted approach will be crucial for social media platforms, news organizations, and everyday users to navigate the increasingly blurry line between real and fake in our digital world.

For more in-depth information, you can explore the studies and tools discussed here and here.

AI Apocalypse: Threat to Democracy and Humanity?