What Are VAEs and GANs? A Simple Breakdown

When it comes to generating new data with deep learning, two of the most exciting approaches are Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs).

These models belong to the class of deep generative models, which are designed to learn the underlying patterns in data and create new, similar instances.

While VAEs and GANs might seem like two sides of the same coin, their architectures, training methods, and the types of outputs they generate differ significantly. VAEs focus on learning a smooth latent space and are useful for generating data with some randomness but in a controlled way. GANs, on the other hand, are a competition between two networks, resulting in incredibly realistic outputs, sometimes so good they’re indistinguishable from real data.

Let’s dive deeper into their mechanics and see where each one shines.

The Purpose of Generative Models in AI

Before we explore how VAEs and GANs work, it’s important to understand the purpose of generative models in AI. Generative models are like creative engines—unlike models that just predict or classify data, these learn the patterns and structure of data to generate entirely new samples from scratch.

Think of tasks like creating new artwork, synthesizing human faces, or filling in missing parts of an image—these are the kinds of problems where generative models thrive. They help machines understand the essence of data, allowing them to produce original content that follows similar rules.

Both VAEs and GANs have earned their place as powerhouses in this field. Yet, they each go about the process in very different ways.

How Variational Autoencoders (VAEs) Work: Strengths and Weaknesses

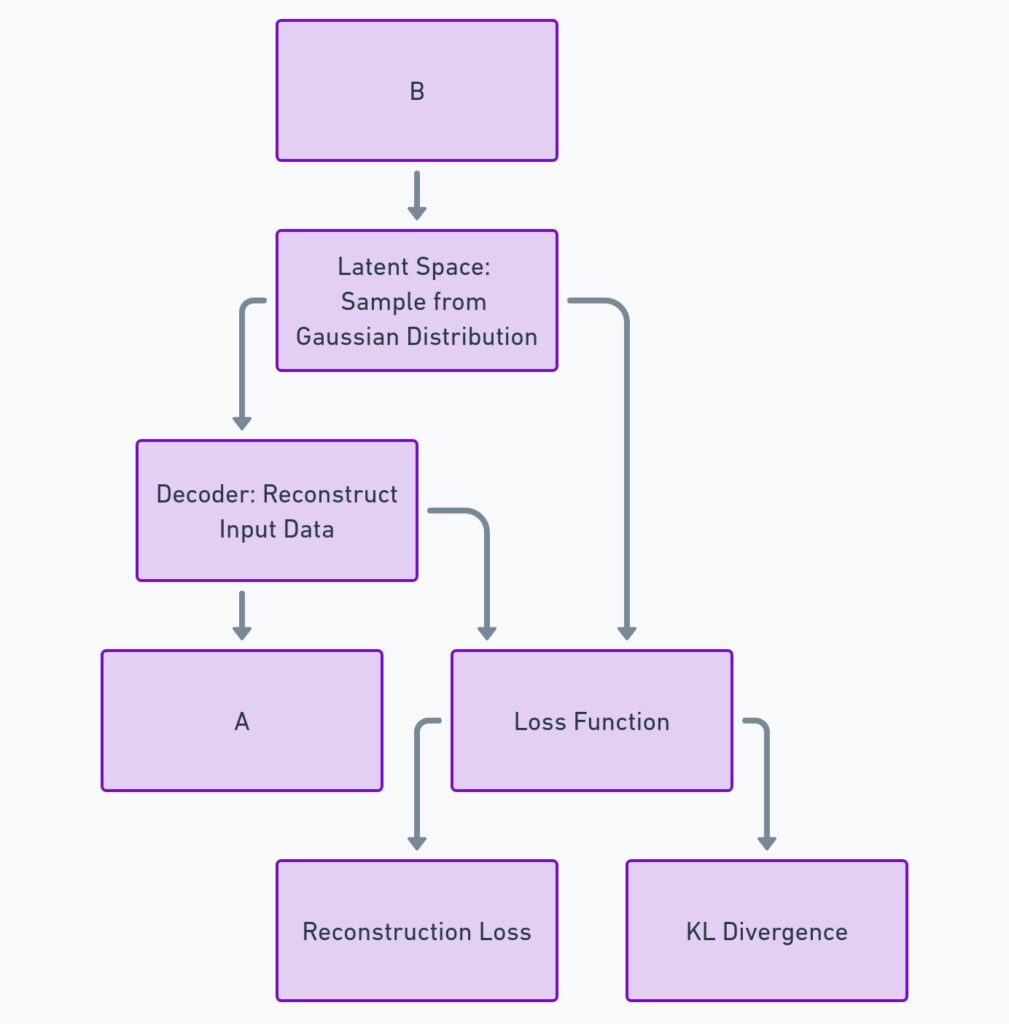

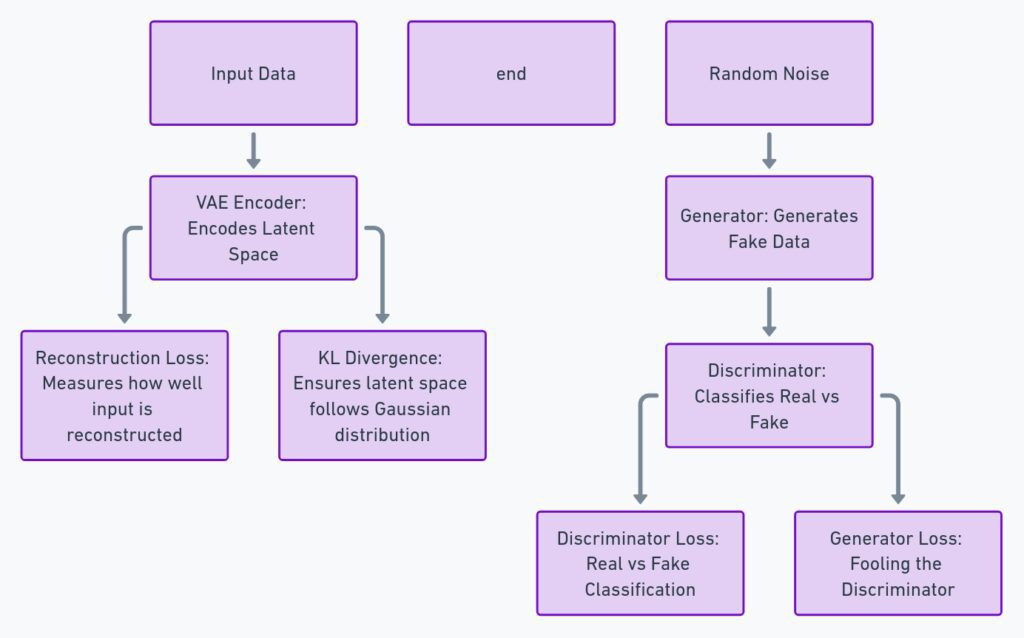

At their core, VAEs are built around the idea of learning a compressed latent space where data can be sampled from and reconstructed. VAEs consist of two main parts: an encoder and a decoder. The encoder takes the input data and compresses it into a lower-dimensional latent space, while the decoder tries to reconstruct the original data from that compressed form.

The unique aspect of VAEs is that they introduce randomness in the form of a probabilistic latent space. Instead of mapping data to a single point, VAEs map it to a distribution, meaning that each data point is represented by a range of possibilities. This makes them excellent for generating diverse and controlled outputs, which is key in tasks like image generation and data augmentation.

However, VAEs often produce blurry or less sharp images compared to GANs, especially when dealing with high-dimensional data like photos or videos. This is because their focus is on learning a smooth representation of the data, which can sometimes come at the expense of fine details in the output.

How Generative Adversarial Networks (GANs) Work: Pros and Cons

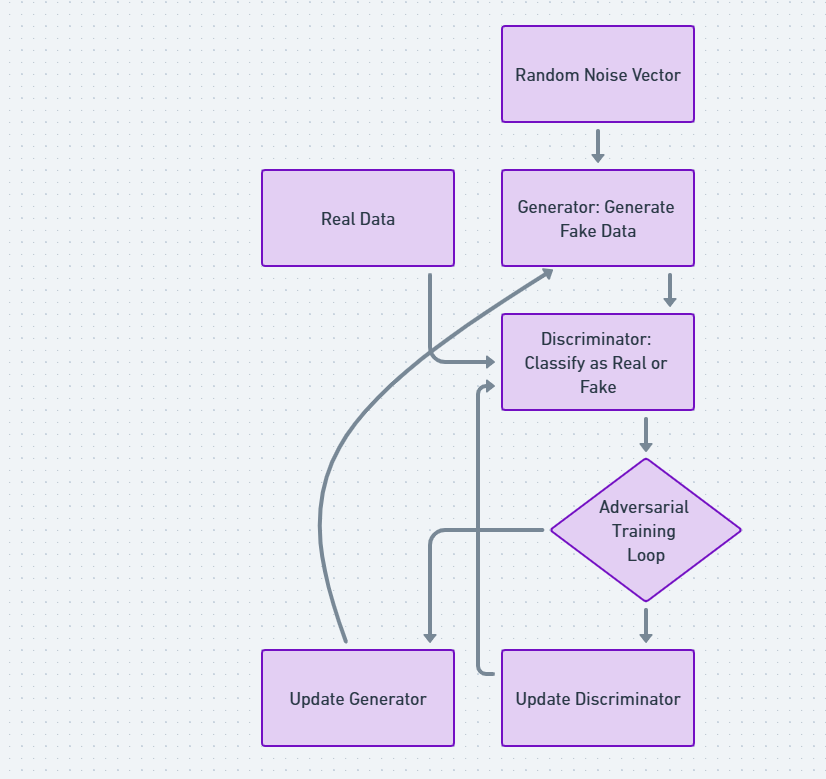

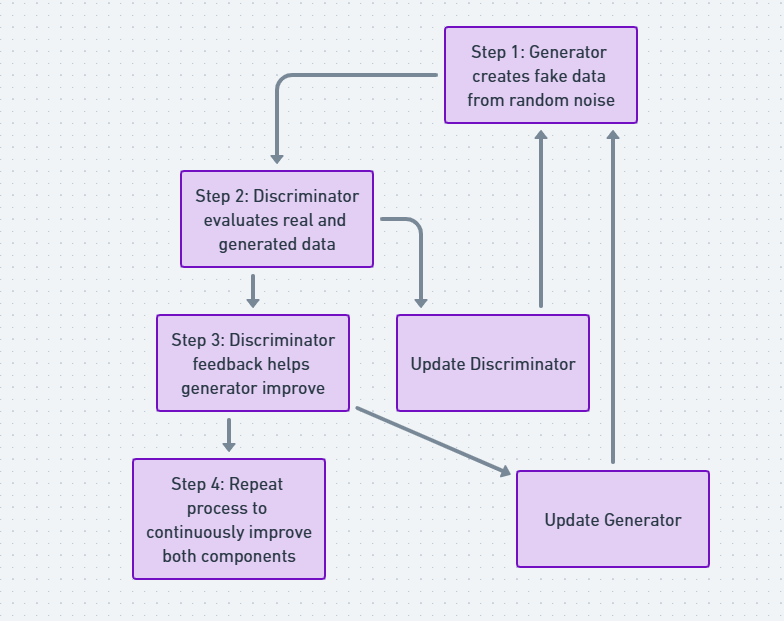

GANs take a radically different approach. Instead of using an encoder-decoder structure like VAEs, GANs rely on two neural networks—the generator and the discriminator—locked in a game with one another. The generator tries to create data that’s as close to the real thing as possible, while the discriminator’s job is to distinguish between real and generated data.

The beauty of GANs lies in this adversarial setup. The generator is constantly improving, trying to “fool” the discriminator, which leads to increasingly realistic outputs. Over time, the generator produces data that can be almost indistinguishable from real data—whether it’s images, text, or even music.

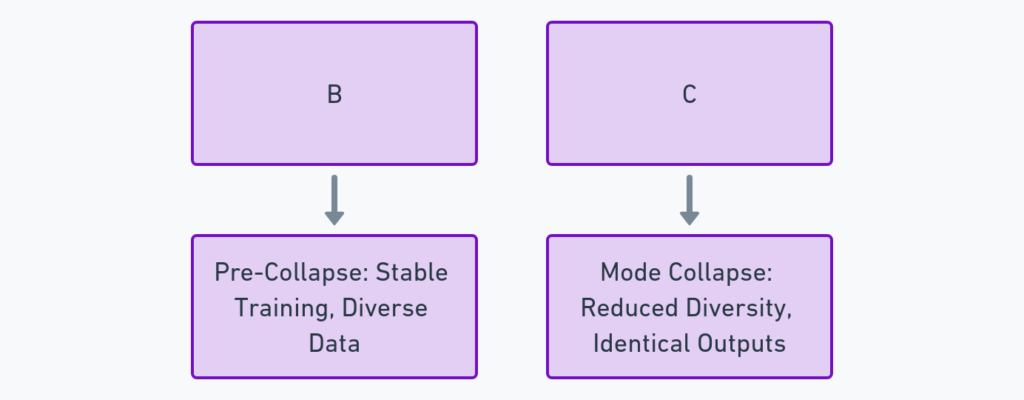

However, GANs are known to be tricky to train. The delicate balance between the generator and discriminator can easily collapse, resulting in poor outputs or a mode collapse where the generator produces very limited varieties of data. Despite these challenges, GANs are celebrated for their ability to create stunningly high-resolution images and photo-realistic content.

VAE vs GAN: Key Differences in Training and Architecture

The training process for VAEs and GANs highlights their fundamental differences. VAEs are trained using a combination of reconstruction loss and KL divergence, which ensures that the latent space is well-structured and the generated outputs match the original data distribution. This approach allows VAEs to generalize well but can sometimes result in less sharp or detailed outputs.

On the other hand, GANs use a completely different technique. The training is adversarial, meaning the generator is trained to outsmart the discriminator, and the discriminator is trained to spot fake data. This dynamic leads to better visual quality, especially in terms of fine details. However, training GANs is more fragile, often requiring careful hyperparameter tuning and model architecture adjustments to avoid instability.

In short, VAEs prioritize a structured, smooth latent space, while GANs focus on producing hyper-realistic samples at the cost of training complexity.

Which One Produces Better Outputs? Visual Quality Compared

When it comes to visual quality, GANs typically take the crown. Thanks to their adversarial training process, GANs generate images with incredible sharpness and detail, often to the point where they’re nearly indistinguishable from real-world images. This is especially noticeable in applications like high-resolution image generation and deepfake creation, where the realism of the output is paramount.

VAEs, while powerful, struggle in this department. The probabilistic nature of their latent space can introduce blurriness, especially in more complex data. This is because VAEs try to balance reconstruction accuracy and ensuring that the latent space follows a well-defined distribution (typically Gaussian), which leads to slightly more averaged or smoothed outputs.

However, it’s not always a simple case of one being better than the other. VAEs are better suited for scenarios where the emphasis is on generating diverse samples that capture the broader characteristics of the dataset, rather than fine details. For instance, if you need to explore a wide range of variations in your generated data, VAEs can provide more flexibility in navigating the latent space.

Exploring the Latent Space: VAEs vs GANs

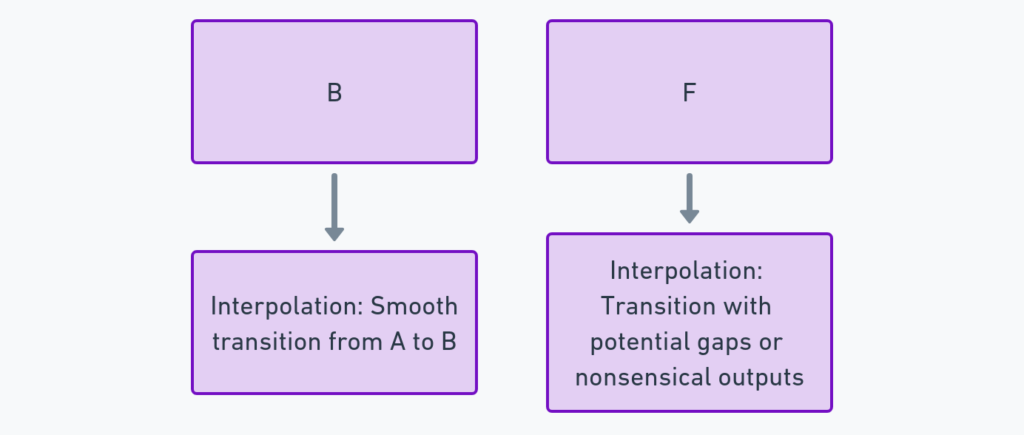

One of the fascinating aspects of deep generative models is the concept of a latent space—an abstract, lower-dimensional representation of the data from which new samples can be generated. This is where VAEs truly shine. The latent space in a VAE is smooth and continuous, meaning that you can interpolate between two points in the latent space and generate a meaningful sequence of outputs that blend the characteristics of both.

For example, with a VAE, you can gradually morph between two different faces in a way that feels natural and controlled. This smoothness makes VAEs incredibly useful for applications like image interpolation and data augmentation, where you want to explore variations in the data systematically.

In contrast, GANs have a more fragmented latent space. Although GANs can generate highly realistic images, navigating their latent space is less intuitive. It’s harder to ensure smooth transitions between generated samples, and the latent space may contain dead zones where inputs lead to unrealistic outputs. GANs are optimized for realism rather than for exploration of the data’s variability, making them better suited for high-quality generation over latent space manipulation.

Challenges in Training VAEs and GANs

Training deep generative models is not without its challenges, and both VAEs and GANs come with their own set of hurdles.

For VAEs, one of the main issues is balancing the trade-off between the reconstruction loss and the KL divergence term, which ensures the latent space follows a Gaussian distribution. This balance can be tricky, and if not done right, it can lead to either overly smooth reconstructions (poor image quality) or a poorly structured latent space (leading to meaningless interpolations). However, once trained, VAEs are generally more stable and less prone to catastrophic failures than GANs.

GANs, on the other hand, are infamous for being difficult to train. The adversarial nature of their architecture means you’re training two models (the generator and discriminator) simultaneously, and if one model becomes too powerful, the other can collapse. Mode collapse is a common issue, where the generator only produces a small variety of outputs, losing the ability to create diverse samples. Instability during training often leads to long periods of trial and error, requiring careful tuning of hyperparameters like learning rates and batch sizes.

Overall, while VAEs tend to be more stable and predictable in training, GANs require more finesse but can produce far more realistic results if trained correctly.

VAEs for Robustness: Where They Excel

While VAEs may not produce the most visually stunning results, they excel in areas that require robustness and interpretability. The structured latent space of VAEs ensures that the model has a good understanding of the underlying data distribution, which can be useful for tasks like anomaly detection, data compression, and feature extraction.

For instance, in medical imaging, VAEs are often used to generate variations of healthy tissues, which can then be compared with actual scans to identify abnormalities. The controlled latent space also makes VAEs great for data augmentation, as they allow for easy generation of new samples that are similar to but distinct from the original data, helping improve the performance of machine learning models in low-data environments.

Additionally, VAEs offer a level of interpretability that GANs do not. Since VAEs are probabilistic, you can easily understand and manipulate the latent variables to explore the various factors of the data, making them more suitable for research where insight into the data structure is key.

GANs for Realism: Where They Outshine

When realism is the top priority, GANs are hard to beat. The generator-discriminator framework pushes GANs to create samples that are strikingly realistic, making them the preferred choice for applications like image synthesis, video generation, and artificial intelligence-based creative work.

For example, deepfakes, which have gained notoriety for their ability to create highly realistic fake videos, rely on the superior image quality that GANs provide. GANs are also frequently used in the creation of photo-realistic game assets, virtual environments, and even super-resolution tasks, where they can upscale low-resolution images to high definition.

However, this level of realism comes with a trade-off in training complexity. It’s not uncommon for GANs to require weeks of fine-tuning to achieve their results, but the payoff is worth it in scenarios where output quality is paramount.

Synergistic Approaches: Combining VAEs and GANs

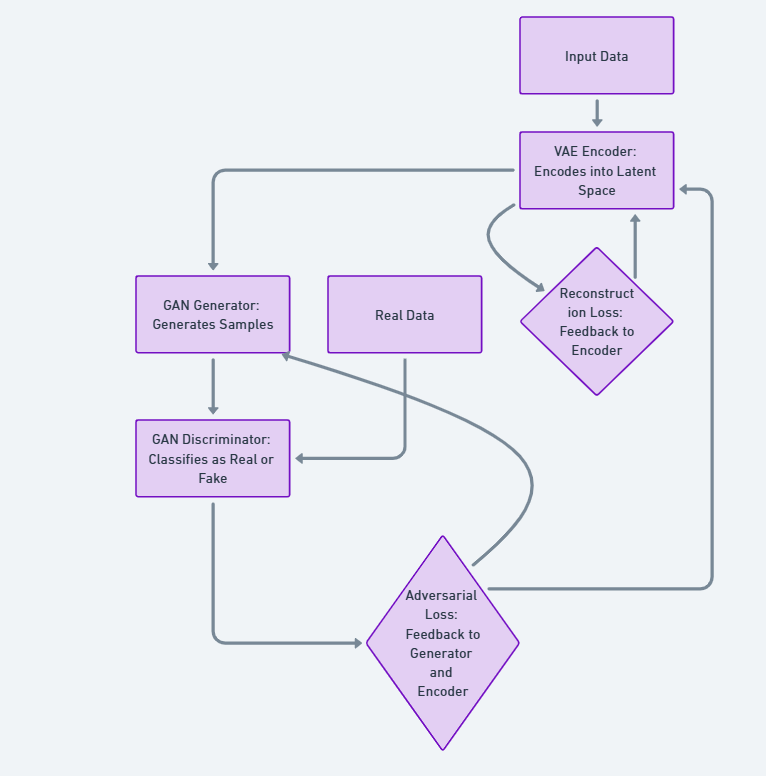

Despite their differences, VAEs and GANs don’t have to be viewed as competitors. In fact, they can complement each other when used together, creating models that leverage the best of both worlds—the stability and structured latent space of VAEs and the high-quality, realistic outputs of GANs. This has led to the development of hybrid models, where researchers combine elements of VAEs and GANs to achieve superior performance.

One such hybrid model is the VAE-GAN, which integrates a VAE’s encoder-decoder architecture with a GAN’s adversarial loss. Here’s how it works: the VAE handles the encoding of the data into a meaningful latent space and generates rough samples, while the GAN’s discriminator steps in to refine these outputs, improving their realism. The result is a model that can produce high-quality samples like a GAN, but with the robust latent structure of a VAE.

The key advantage of this synergy is that the VAE component ensures the model learns a smooth latent space, while the GAN component encourages the generation of realistic samples. This can be particularly useful in applications like semi-supervised learning, where having both strong data reconstruction and generation capabilities is important.

VAE-GAN Hybrids: The Best of Both Worlds

The VAE-GAN hybrid model takes the best features of both approaches, allowing for controlled generation of high-quality data. By using a VAE encoder to generate latent representations and a GAN discriminator to ensure realistic outputs, this hybrid offers the stability and interpretability of VAEs with the realism of GANs.

One of the most notable benefits of this approach is that it allows for easier interpolation between data points, thanks to the VAE’s structured latent space, while still producing sharper, more visually pleasing outputs. In fields like image generation, this balance can lead to more natural, smooth transitions between images without sacrificing clarity.

Additionally, VAE-GANs tend to mitigate the training instability that can plague standalone GANs, offering a more reliable way to train generative models without extensive tuning.

Applications of VAEs in Real-World AI

VAEs have found success in a variety of practical applications where robustness and structured latent spaces are more important than sheer realism. One of their most common uses is in anomaly detection, particularly in medical imaging. By training a VAE on healthy data, the model learns to generate typical patterns. When it encounters data that deviates significantly from these patterns (like a tumor or abnormal tissue), the reconstruction error signals a potential anomaly.

VAEs are also widely used in data compression. By compressing data into a compact latent space, they allow for efficient storage and transmission, which can be crucial for high-dimensional data like videos or 3D models.

In the realm of generative design, VAEs are applied to create new design variations, whether in fashion, architecture, or industrial design, offering creative ways to explore design possibilities while maintaining some level of control over the outcome.

Common GAN Use Cases in Deep Learning

GANs, with their ability to produce incredibly realistic outputs, are a favorite in areas that require high-quality image and video generation. Some of their most prominent use cases include:

- Deepfakes: GANs are at the core of deepfake technology, where they generate hyper-realistic videos and images of people who don’t exist or convincingly swap faces in video footage.

- Super-Resolution: GANs are often used to enhance the quality of low-resolution images, making them suitable for applications like satellite imagery enhancement or medical imaging where high detail is critical.

- Art and Creative AI: Artists and designers use GANs to create novel artworks, designs, and even music. Models like Artbreeder use GANs to allow users to combine different elements of images to create new, visually stunning artworks.

- Virtual Worlds: GANs are applied in the creation of synthetic data for training self-driving cars or video game environments, where they generate realistic virtual scenes that mimic the real world.

When to Choose VAEs Over GANs

While GANs are often the first choice when realism is the main goal, VAEs shine in cases where you need more control and interpretability. For example:

- Anomaly Detection: If the task involves spotting outliers or abnormalities in data (like in cybersecurity or healthcare), VAEs offer a more reliable way to model normal data distributions and flag deviations.

- Data Augmentation: For generating diverse datasets in machine learning, VAEs allow for the creation of variations in the data that follow a smooth and continuous latent space, making it easier to produce meaningful variations.

- Low-Resolution Data Generation: In situations where sharpness is less important than diversity or exploration (e.g., generating new product designs or exploring latent space interpolation), VAEs provide a great balance of flexibility and control.

When GANs Are a Better Fit

GANs are the go-to choice when the primary concern is output quality and realism. Some of the scenarios where GANs truly shine include:

- High-Resolution Image Generation: For tasks like creating photo-realistic images or deepfakes, GANs produce far superior results in terms of clarity and sharpness.

- Super-Resolution and Image Enhancement: GANs excel at taking low-quality images and transforming them into high-resolution versions, which can be critical in fields like microscopy or satellite imagery.

- Creative Applications: When realism and creativity merge, as in the case of AI-generated art, GANs enable the creation of vivid, original content that can inspire or augment human creativity.

The Future of Generative Models: VAEs, GANs, and Beyond

As deep generative models continue to evolve, the synergy between VAEs and GANs represents just the beginning of a larger trend in AI innovation. Both VAEs and GANs have their own strengths, but newer hybrid models and advancements are pushing the boundaries of what generative models can do.

For instance, the introduction of Diffusion Models and Normalizing Flows offers new ways to generate high-quality, complex data while preserving some of the benefits seen in VAEs and GANs. Diffusion models, for example, model the process of gradually transforming data from random noise into meaningful patterns, and have shown promise in generating even more detailed and diverse outputs than GANs.

Combining Multiple Models for Specialized Tasks

Looking forward, there’s a strong case for combining multiple types of generative models to solve specialized tasks. For example, in fields like drug discovery or scientific research, it’s crucial not only to generate realistic samples but also to have a deep understanding of the underlying data distribution. Combining the strengths of VAEs, GANs, and other models could lead to the next breakthrough in generating not only visual content but also more meaningful and functional data.

Ethical Considerations in the Era of Advanced Generative Models

While the technical possibilities are exciting, the future of generative models like VAEs and GANs also brings up ethical questions. The rise of deepfakes and AI-generated content has already sparked debates around misuse, privacy, and ownership of digital creations. As these models become more powerful, it’s crucial for researchers and practitioners to develop responsible practices and safeguards to ensure that these tools are used ethically.

In conclusion, both VAEs and GANs offer incredible possibilities for generating data, each with its own strengths and limitations. By exploring their synergies and looking to the future of generative modeling, we can unlock even more powerful and creative applications for AI-generated content.

FAQs: VAEs vs. GANs – A Synergistic Approach to Deep Generative Models

1. What are VAEs and GANs?

VAEs (Variational Autoencoders) and GANs (Generative Adversarial Networks) are both types of deep generative models. VAEs use an encoder-decoder structure to compress data into a latent space, then reconstruct it with added randomness. GANs use two networks, a generator and a discriminator, in an adversarial game to create highly realistic outputs.

2. How do VAEs differ from GANs?

VAEs focus on learning a smooth latent space and generate outputs that resemble the original data but with a focus on diversity rather than perfect realism. GANs, on the other hand, excel at producing realistic outputs, especially in terms of visual detail, but can be harder to train and may suffer from mode collapse (producing less variety in the generated samples).

3. Which model is better for generating realistic images?

GANs are typically better for generating high-quality, realistic images due to their adversarial training process, which encourages the generator to produce outputs that are indistinguishable from real data. VAEs often produce more blurry or smoother images in comparison.

4. Are VAEs easier to train than GANs?

Yes, VAEs are generally easier to train and more stable. They don’t rely on the delicate balance between two networks like GANs do, making them less prone to issues like mode collapse. However, VAEs may not reach the same level of output quality as GANs.

5. Can VAEs and GANs be used together?

Yes, combining VAEs and GANs into hybrid models, such as VAE-GANs, is a common approach to leverage the strengths of both. This allows you to benefit from the structured latent space of VAEs while also achieving the high-quality outputs of GANs.

6. What are the main advantages of VAEs?

VAEs are great for tasks where a well-structured latent space is important, such as in data exploration, anomaly detection, or generating diverse data samples. They offer interpretability and robustness in their outputs, even if they don’t achieve the same level of detail as GANs.

7. What are the challenges of training GANs?

Training GANs can be challenging due to their adversarial nature. Balancing the generator and discriminator is difficult, and if one becomes too strong, the other fails to improve. This can lead to mode collapse, where the generator produces limited varieties of data, or training instability.

8. When should I use VAEs over GANs?

Use VAEs when:

- You need interpretable latent spaces.

- You want to explore the variability within the data.

- You’re focusing on data reconstruction, anomaly detection, or data augmentation.

- You prefer more stable and easier-to-train models.

9. When should I use GANs over VAEs?

Use GANs when:

- You need highly realistic outputs like for deepfakes, image synthesis, or super-resolution.

- The visual quality of the generated data is more important than diversity.

- You are working on tasks requiring photorealism, such as game design, creative arts, or video generation.

10. What are the applications of VAE-GAN hybrid models?

VAE-GAN hybrids combine the advantages of both models and are used in:

- Image interpolation: Generating smooth transitions between different images.

- High-quality data generation: Achieving better control over the latent space while maintaining output realism.

- Data exploration: Understanding complex datasets by generating variations within the learned latent space.

11. What are the main applications of VAEs?

VAEs are widely used in:

- Anomaly detection (e.g., in medical imaging or cybersecurity).

- Data augmentation (creating new samples from existing data).

- Latent space exploration (interpolating between different data points).

- Feature extraction (compressing data into meaningful representations).

12. What are common use cases for GANs?

GANs are popular for:

- Deepfakes and other video manipulations.

- Super-resolution: Upscaling low-resolution images to high-resolution.

- AI-driven art: Generating novel artworks or media.

- Photo-realistic image generation for virtual environments, gaming, and training data for AI systems.

13. What are the future directions for VAEs and GANs?

Future advancements involve combining VAEs and GANs with other emerging models, such as Diffusion Models and Normalizing Flows, to improve both output quality and control. Additionally, ethical considerations will play an important role in shaping the responsible development and use of these models in the real world.

Useful Resources for Learning About VAEs, GANs, and Hybrid Models

- Original Research Papers:

- VAE Paper: Auto-Encoding Variational Bayes by Kingma and Welling (2013)

- GAN Paper: Generative Adversarial Nets by Goodfellow et al. (2014)

- VAE-GAN Hybrid Paper:

- Autoencoding beyond pixels using a learned similarity metric by Larsen et al. (2016)

- This paper introduces the VAE-GAN hybrid approach.

- Read the VAE-GAN Paper

- Autoencoding beyond pixels using a learned similarity metric by Larsen et al. (2016)

- Deep Learning with Python by François Chollet:

- This book provides practical insights into VAEs, GANs, and other generative models, with Python code examples using Keras.

- Hands-On Generative Models by David Foster:

- A great resource for learning about both VAEs and GANs with practical examples.

- GANs in Action by Jakub Langr and Vladimir Bok:

- This book offers a thorough guide to GANs, including training tips and tricks for stability and applications like image generation.

- GANs in Action

- PyTorch VAE Tutorial:

- A step-by-step tutorial that walks through implementing a Variational Autoencoder in PyTorch.

- VAE Tutorial with PyTorch

- Keras GAN Tutorial:

- A beginner-friendly tutorial on how to build GANs in Keras, including code for generating images.

- GAN Tutorial with Keras

- VAEs vs. GANs by Towards Data Science:

- A blog post that compares VAEs and GANs, explaining their strengths, weaknesses, and differences in applications.

- Distill.pub’s Interactive Guide to GANs:

- This interactive article provides a visual and intuitive explanation of GANs and their training process.

- Explore the GAN Guide

- TensorFlow GAN Tutorial:

- TensorFlow’s official tutorial on implementing GANs for image generation, complete with code examples.

- GAN Tutorial with TensorFlow

- VAE and GAN Applications in Bioinformatics:

- This research paper explores how VAEs and GANs are applied in bioinformatics for tasks like drug discovery and protein folding.

- Read the Bioinformatics Paper

- YouTube Tutorials:

- 3Blue1Brown’s Neural Networks: An excellent visual explainer of how neural networks work, leading up to discussions on VAEs and GANs.

- deeplearning.ai’s Generative Models Course: A comprehensive course that dives into VAEs, GANs, and more.

- VAEs and GANs in Healthcare by Nature:

- An article from Nature discussing how these generative models are revolutionizing healthcare.

- Read the Article

- Google Colab Notebooks for Hands-On Practice:

- VAE Notebook: Explore and play with a pre-built VAE model in Google Colab.

- GAN Notebook: A fully interactive GAN notebook for generating images using TensorFlow.