Understanding the Basics of Web Scraping

What is Web Scraping?

Web scraping involves extracting data from websites for analysis or other applications. Think of it as using code to “read” a webpage and save its content in a structured format, like a spreadsheet.

For AI projects, this can mean collecting large datasets to train machine learning models.

- Web scraping is crucial for gathering domain-specific datasets when no public datasets are available.

- It’s particularly useful for fields like healthcare, finance, and retail, where tailored information is key.

Key Tools for Scraping

Some tools and languages excel in web scraping:

- Beautiful Soup: A Python library that parses HTML and XML.

- Scrapy: A more advanced Python framework for large-scale scraping.

- Selenium: Best for scraping JavaScript-heavy pages.

These tools offer flexibility but differ in complexity and scale.

Benefits of Scraping for AI Projects

Web scraping provides real-world data, making AI models robust and accurate. For example:

- Training chatbots with live customer support queries.

- Enhancing recommendation engines with user review data.

Without authentic, domain-relevant data, AI models risk being irrelevant.

Legal and Ethical Guidelines for Data Collection

Copyright and Terms of Service Considerations

Every website has rules about its data usage, often outlined in the Terms of Service (ToS). Violating these terms might result in legal action. Always review and respect these guidelines.

- Use public or open datasets when possible.

- For private content, consider requesting explicit permission.

Avoiding Unethical Practices in Scraping

Scraping isn’t inherently unethical, but misuse can tarnish its reputation:

- Don’t scrape personal or sensitive data.

- Respect robots.txt files, which outline what parts of a site can be scraped.

Protecting User Data and Privacy

AI systems often need large amounts of user data. Always ensure compliance with privacy laws like GDPR or CCPA. Encrypt and anonymize data where needed.

Choosing the Right Tools for Your Domain

Python Libraries for Scraping

Python dominates the scraping world. Here’s why:

- Beautiful Soup: Perfect for beginners.

- Scrapy: Ideal for experienced users handling complex tasks.

- Pandas: Helps clean and format scraped data.

No-Code Scraping Tools for Non-Developers

For non-tech-savvy users, tools like Octoparse or ParseHub offer drag-and-drop functionality, making scraping more accessible.

Advanced Tools for Large-Scale Scraping

If you’re dealing with massive datasets, consider:

- Apache Nutch: A scalable web crawler.

- AWS Lambda: Combine scraping with cloud computing for efficiency.

Structuring and Cleaning Your Data

Why Structured Data is Essential

Scraped data often arrives as messy text or unstructured tables. For AI models to use it effectively, standardized formats are critical:

- Convert HTML into JSON, CSV, or databases.

- Ensure field consistency across all data points.

Tools for Cleaning Raw Scraped Data

Popular tools for cleaning include:

- OpenRefine: Handles bulk data cleaning tasks.

- Python’s Pandas: Manages inconsistencies programmatically.

Handling Duplicates, Inconsistencies, and Missing Data

AI thrives on clean data. Remove duplicates, standardize entries (e.g., date formats), and fill missing values with averages or placeholders. Imbalanced data? Consider oversampling techniques.

Handling Complex Websites and APIs

Scraping JavaScript-Heavy Websites

Modern websites use dynamic content powered by JavaScript, making traditional scraping tough. Tools like Selenium or headless browsers (e.g., Puppeteer) can simulate real user interaction.

Dealing with CAPTCHAs and Rate Limits

Websites protect themselves using CAPTCHAs and rate limiting. Solutions include:

- Using proxies or rotating IPs.

- Incorporating CAPTCHA solvers.

Alternatives to Scraping: Using APIs

When available, APIs provide a structured way to access data without breaking website policies. They’re faster, more reliable, and often safer legally. Examples include Twitter’s API for tweets or Google Maps API for location data.

Domain-Specific Considerations for AI Training Data

Identifying Critical Features for Your Domain

When scraping data for a specific domain, the focus should be on relevant features. For example:

- In healthcare, patient demographics and treatment outcomes might be critical.

- For finance, market trends, stock prices, or transaction histories are key.

By understanding what drives decision-making in your domain, you can prioritize the most impactful data during scraping.

Handling Specialized Terminology or Formats

Domains like law or medicine use complex jargon and unique formats. Consider:

- Using domain-specific parsers to extract key data accurately.

- Incorporating natural language processing (NLP) techniques for semantic understanding.

Real-World Examples

- Retail: Scraping e-commerce sites for pricing trends or customer sentiment.

- Healthcare: Mining research papers for clinical insights.

- Finance: Gathering stock data from trading platforms for predictive models.

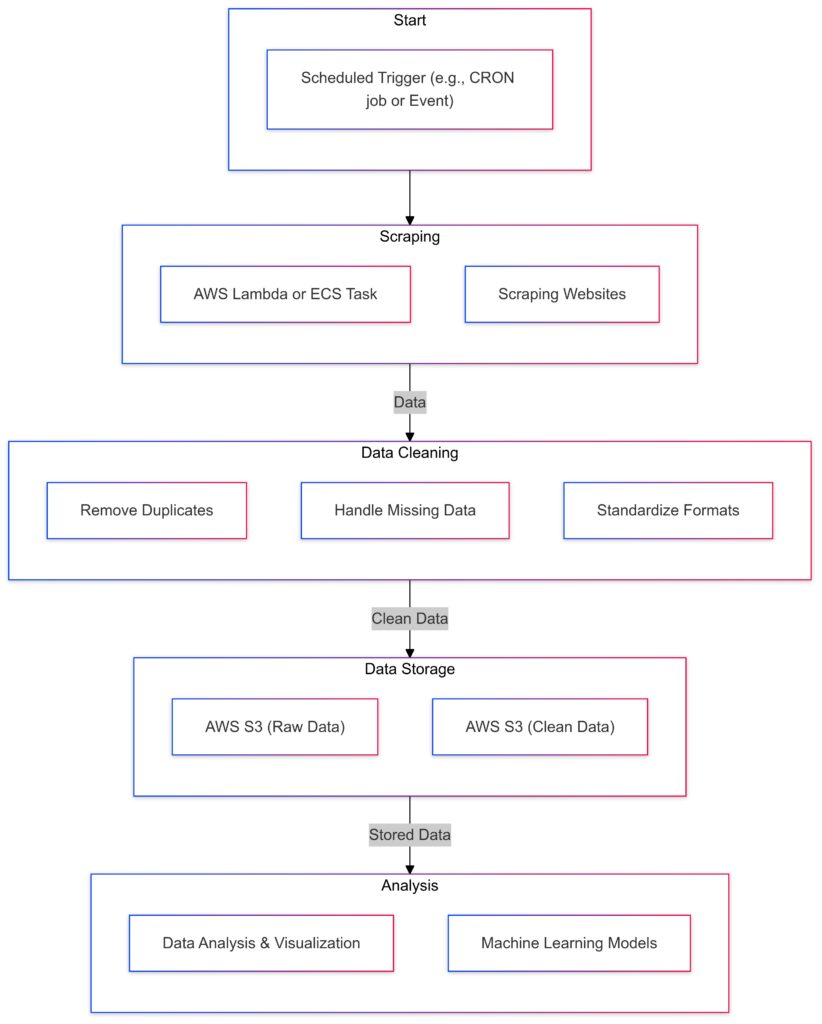

Automation and Scalability in Web Scraping

Building Automated Pipelines

Manually scraping data is inefficient for large-scale AI projects. Automation ensures consistency and scalability:

- Combine scraping tools like Scrapy with schedulers (e.g., Cron Jobs).

- Use cloud platforms like AWS Lambda for trigger-based scraping tasks.

Cloud-Based Scraping Solutions

Platforms like Google Cloud Functions or Azure Logic Apps streamline complex scraping tasks by leveraging the cloud. Benefits include:

- Faster processing speeds.

- Scalable resources for large datasets.

Managing Storage and Compute Resources

Massive datasets need proper handling. Consider:

- Databases: Use relational databases (MySQL, PostgreSQL) or NoSQL options (MongoDB) for unstructured data.

- Data lakes: Tools like Amazon S3 store vast amounts of raw data, ready for processing.

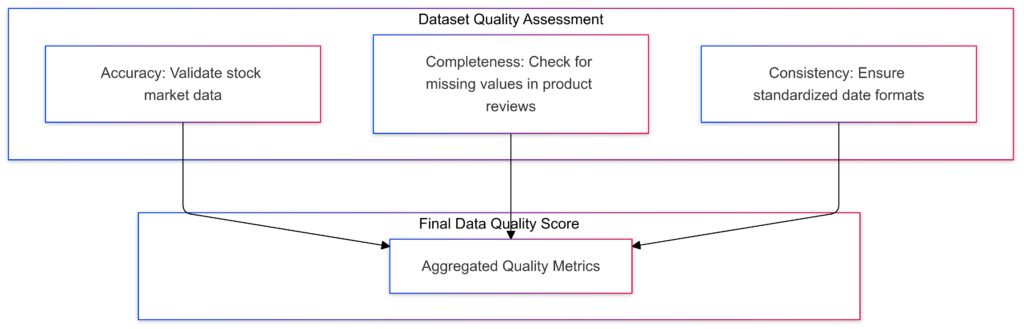

Ensuring Data Quality for AI Applications

Metrics to Assess Data Quality

High-quality data ensures effective AI training. Use these metrics:

- Completeness: Are all required fields populated?

- Accuracy: Is the data free from errors?

- Consistency: Are formats uniform across entries?

Regular Audits and Validations

Periodically assess your scraped datasets for:

- Outliers or anomalies.

- Drift in data trends over time.

Automating this process with Python scripts or tools like Great Expectations can save time.

Using Domain Experts for Labeling

For supervised learning, scraped data often requires labels. Collaborate with domain specialists to ensure accurate categorization and eliminate bias.

Navigating Challenges in Web Scraping

Legal Takedown Requests and Responses

Websites may issue takedown notices if they suspect improper scraping. To minimize risks:

- Scrape publicly available data and respect terms of service.

- Maintain documentation of your scraping activities for compliance purposes.

Handling Dynamic Changes in Website Structures

Websites often alter their layouts or URLs, breaking scraping scripts. Solutions include:

- Building resilient scrapers using CSS selectors or XPath.

- Automating updates to your scraper with AI-based parsers.

Troubleshooting Common Scraping Errors

Common issues like timeout errors or IP bans can derail your project. Fix these by:

- Increasing request intervals to avoid server overload.

- Employing rotating proxies or VPNs for anonymity.

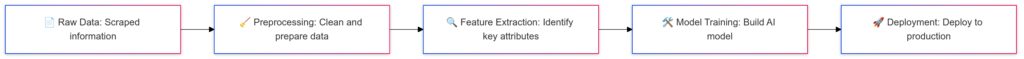

Integration into AI Pipelines

Transforming Scraped Data for AI Model Consumption

Scraped data needs preprocessing to fit into AI pipelines. Essential steps include:

- Normalizing text, numbers, or dates.

- Converting raw text into tokens or embeddings for NLP tasks.

Building Repeatable Workflows

Consistency in data handling is key for scalable AI applications:

- Use tools like Apache Airflow for managing scraping workflows.

- Document and version-control each step for reproducibility.

Leveraging Data Augmentation Techniques

To expand your dataset, apply augmentation methods such as:

- Text synthesis for NLP projects.

- Image cropping or rotations for computer vision datasets.

Optimizing Scraping for Specific AI Models

Tailoring Data for Machine Learning and Deep Learning

Different AI models require different types of data preparation:

- Supervised learning: Focus on labeled datasets. Ensure clear target variables.

- Unsupervised learning: Gather diverse and rich data for clustering or dimensionality reduction.

- Deep learning: Ensure sufficient volume and variety for training neural networks.

Addressing Model-Specific Needs

For specialized AI models like transformers or GANs, scraped data might need further processing:

- NLP tasks: Use tokenization, stemming, or lemmatization.

- Vision tasks: Preprocess images to match expected resolutions or formats.

Ethical AI: Ensuring Fairness and Bias Mitigation

Identifying Bias in Scraped Data

Data scraped from specific domains may contain systemic biases:

- Reviews might reflect cultural or regional preferences.

- Historical data can perpetuate stereotypes in predictions.

Techniques to Reduce Bias

- Use diverse data sources to balance representation.

- Regularly audit your dataset for imbalances or skewed patterns.

Promoting Ethical AI Practices

Work with ethical frameworks, such as AI Fairness 360, to ensure your data complies with fairness standards.

Leveraging Pretrained Models and Transfer Learning

Benefits of Using Pretrained Models

Pretrained models can speed up AI development by leveraging knowledge from existing datasets:

- BERT or GPT models for NLP tasks.

- ResNet or VGG models for computer vision.

Customizing Pretrained Models with Scraped Data

- Use transfer learning to fine-tune models on domain-specific data.

- Ensure compatibility by preprocessing your dataset to match the model’s input requirements.

Monitoring and Maintaining Your Scraping System

Regular Updates to Scraping Scripts

Websites change constantly, so scrapers must adapt:

- Use automated testing to identify script failures early.

- Schedule maintenance cycles to review and update scrapers.

Monitoring Data Collection in Real Time

Integrate monitoring tools like Elasticsearch or Kibana to track:

- Volume and quality of scraped data.

- Errors or anomalies in the scraping process.

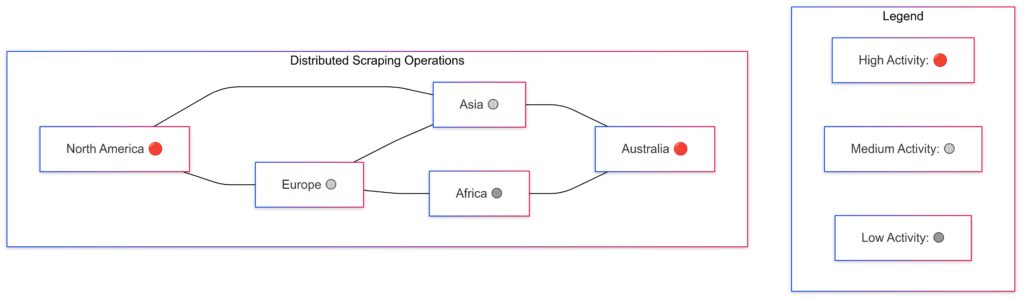

Scaling Scraping Operations for Enterprise AI

Building Distributed Scraping Architectures

For enterprise-scale AI projects, distribute scraping across multiple servers:

- Use frameworks like Scrapy Cluster for horizontal scaling.

- Employ distributed databases (e.g., Cassandra) for seamless data storage.

Balancing Cost and Performance

Cloud services like AWS, GCP, or Azure offer scalable solutions but can be costly. Use:

- Spot instances for cost-effective computing.

- Caching systems to reduce redundant scraping efforts.

Enterprise Use Cases

- E-commerce giants: Monitor competitors’ pricing at scale.

- Healthcare providers: Scrape research papers for the latest breakthroughs.

- Financial institutions: Track live market trends and global news.

Final Summary: Scraping Data for AI in Specific Domains

Web scraping is a powerful tool for collecting domain-specific data to train AI models. By understanding the basics of scraping, selecting the right tools, and following legal and ethical guidelines, you can build a robust foundation for data collection. Structuring and cleaning data ensures its usability, while automation and scalability make large-scale operations more efficient.

Domain-specific considerations, such as handling specialized formats or terminology, allow your AI to excel in its niche. Meanwhile, monitoring data quality and addressing bias promote fairness and reliability in your AI applications. Leveraging pretrained models and scaling operations for enterprise needs further expands the potential of web scraping for cutting-edge AI projects.

By integrating these strategies into your pipeline, you not only streamline the process but also ensure high-quality data—the lifeblood of effective AI. So, whether you’re building a chatbot, forecasting financial markets, or revolutionizing healthcare, this guide equips you to scrape smarter, train better, and achieve remarkable results.

FAQs

How can I scrape JavaScript-heavy websites?

JavaScript-heavy websites require tools like Selenium or Puppeteer, which simulate real user interactions and render dynamic content.

- Example: A flight booking site updates prices dynamically. Selenium can load the page, interact with dropdowns, and capture the live prices.

Another alternative is to use APIs, if available, as they often provide structured access to such data.

Is scraping legal and ethical?

Web scraping is legal if it complies with a website’s Terms of Service and doesn’t infringe on copyrights or privacy laws. Always respect robots.txt files and avoid scraping sensitive or personal data.

- Example: Scraping public news articles for sentiment analysis is generally acceptable. However, scraping private social media profiles without permission is not ethical or legal.

To stay compliant, explore open datasets or seek explicit permissions from website owners.

What are the common challenges in web scraping?

Scraping can encounter technical and legal roadblocks:

- Technical: Websites may use CAPTCHAs, rate limiting, or change their layouts frequently.

- Solution: Rotate IPs using proxies or integrate CAPTCHA solvers.

- Legal: Some sites may issue takedown requests or block IPs if they suspect misuse.

- Solution: Focus on publicly available data and document your practices for accountability.

How can I structure and clean scraped data?

Scraped data is often messy. Tools like Pandas or OpenRefine help organize and clean it.

- Example: After scraping product reviews, you might need to remove duplicates, fix date formats, and standardize star ratings.

For structured output, save data as JSON or CSV files, ensuring easy integration into machine learning pipelines.

What’s the difference between web scraping and using APIs?

- Web scraping extracts data directly from web pages, while APIs offer a structured and sanctioned way to access the same data.

- Example: Scraping a stock market website may yield unstructured data with formatting issues, while a stock market API delivers clean, real-time data in JSON or XML.

Whenever possible, prefer APIs for reliability and compliance.

Can I automate scraping for recurring tasks?

Yes, automation is key for recurring tasks. Use tools like Scrapy to set up pipelines that periodically scrape and store data.

- Example: If you’re monitoring product prices weekly, Scrapy can automate the process, saving results in a database or file for analysis.

Pair this with a scheduler like Cron Jobs or Airflow to run tasks at regular intervals.

What should I consider for domain-specific scraping?

Focus on the nuances of your domain:

- Healthcare: Extract medical terminology and case studies. Use NLP tools to process clinical data.

- Finance: Collect time-sensitive data like stock prices or earnings reports. Automate real-time updates.

- E-commerce: Capture product details, user reviews, and trends for competitive analysis.

Tailoring your strategy ensures you collect the most relevant data for your AI models.

How do I ensure data quality for AI projects?

Data quality is critical for accurate AI predictions. Always check:

- Completeness: No missing values.

- Consistency: Uniform formats across entries.

- Accuracy: Cross-check scraped data with original sources.

- Example: While scraping weather data, ensure temperature units (°C or °F) are consistent and correct.

Regular validation and domain expert reviews can help maintain high standards.

How can I scale web scraping for enterprise-level projects?

Scaling requires robust infrastructure:

- Use distributed scraping frameworks like Scrapy Cluster.

- Store large datasets in cloud storage solutions like AWS S3 or Google Cloud Storage.

- Example: For scraping millions of product listings across global e-commerce sites, distribute tasks across multiple servers using proxies to avoid IP bans.

This ensures faster, uninterrupted operations suitable for large-scale AI training.

What are the best practices for avoiding IP bans during scraping?

To avoid IP bans, adopt the following strategies:

- Use rotating proxies to distribute requests across multiple IPs.

- Introduce random delays between requests to mimic human browsing behavior.

- Respect the website’s rate limits and avoid overwhelming their servers.

- Example: If scraping a job board, send requests at random intervals and rotate IPs every 10 requests to stay under the radar.

Tools like Bright Data or ScraperAPI can simplify proxy management.

How can I scrape multiple pages of a website efficiently?

Scraping multi-page websites requires handling pagination. Look for next page buttons, URL patterns, or API endpoints.

- Example: On an e-commerce site, the URL for page 2 might be

example.com/products?page=2. Use loops to increment the page number dynamically.

Scrapy or Beautiful Soup allows you to automate such tasks by identifying and following pagination links.

Can web scraping handle real-time data collection?

Yes, but it requires efficient tools and strategies:

- Use WebSockets or long polling to fetch live updates.

- Automate frequent scraping with tools like Selenium or Puppeteer for dynamic content.

- Example: For monitoring stock prices, set up a script that collects data every minute and stores it in a database for analysis.

Real-time scraping works best when APIs are unavailable, but always consider the website’s server capacity to avoid disruptions.

What are some alternatives to web scraping?

If web scraping isn’t feasible, consider these options:

- APIs: Many platforms, like Twitter or OpenWeather, provide APIs with structured access to data.

- Data marketplaces: Platforms like Kaggle or AWS Data Exchange offer pre-collected datasets for purchase or download.

- Crowdsourcing: Use platforms like Mechanical Turk to label or collect data.

- Example: Instead of scraping social media for sentiment analysis, use Twitter’s API to gather tweets directly with hashtags or keywords.

What should I do if a website changes its structure?

Dynamic websites may frequently update their layouts, breaking your scraping scripts. To adapt:

- Use CSS selectors or XPath to identify elements dynamically.

- Employ AI-based parsers like DiffBot that adjust automatically to changes.

- Regularly update your scraper by running test scripts and debugging changes.

- Example: If a retailer moves the product price element from

<div>to<span>, update your scraper to reflect the new structure.

How can I scrape websites protected by CAPTCHAs?

CAPTCHAs are designed to block automated access, but they can be handled with:

- Captcha-solving services like 2Captcha or DeathByCaptcha.

- Human-in-the-loop systems, where manual intervention solves CAPTCHAs for high-value scraping tasks.

- Example: When scraping a ticket booking website, integrate a CAPTCHA solver service to bypass login restrictions.

Alternatively, consider negotiating API access with the website owner for hassle-free data collection.

What are some common pitfalls to avoid in web scraping?

Common mistakes include:

- Ignoring legal and ethical guidelines: Scraping without reviewing a website’s terms of service can lead to legal action.

- Overloading a website’s server: Sending too many requests in a short time might trigger IP bans or server slowdowns.

- Failing to preprocess data: Raw data is often messy; skipping preprocessing leads to poor AI model performance.

- Example: Scraping a blog for content without normalizing the text (removing HTML tags or special characters) can create inconsistencies in sentiment analysis models.

How do I integrate scraped data into AI models?

Integrating scraped data into AI pipelines involves preprocessing and structuring the data.

- Example: For training a chatbot, clean scraped customer service chat logs by removing irrelevant information like timestamps or agent IDs.

- Convert the cleaned data into formats like JSON or CSV. Tools like Pandas help streamline these tasks.

- Feed structured data into your AI model, ensuring alignment with input expectations.

What tools help with large-scale data storage for scraped content?

For storing scraped data:

- Databases: Use relational databases like PostgreSQL or NoSQL databases like MongoDB for structured and semi-structured data.

- Cloud Storage: Solutions like Google Cloud Storage or Amazon S3 are ideal for large datasets.

- Data Lakes: Use platforms like Delta Lake for unstructured data that you’ll analyze later.

- Example: When scraping millions of product reviews, store them in MongoDB for fast retrieval and analysis.

How can I anonymize scraping activity to protect my identity?

To prevent detection or legal trouble, anonymize your scraping activities:

- Use proxy servers to hide your IP address.

- Rotate user-agent headers to mimic different devices or browsers.

- Example: For scraping job listings, rotate between desktop and mobile user agents to mimic diverse traffic.

Tools like Tor or VPNs can further enhance anonymity.

Can I use scraped data for commercial purposes?

Using scraped data commercially depends on:

- Copyright restrictions: Ensure the data isn’t protected under copyright law.

- Terms of Service compliance: Review the website’s policies to avoid breaches.

- Example: Scraping product prices for personal analysis is usually acceptable, but reselling that data might violate terms of service.

For commercial use, seek permissions or rely on publicly available datasets to avoid legal issues.

Resources

Resources: Tools, Libraries, and Guides for Web Scraping

Here’s a curated list of resources to help you get started with web scraping for AI projects.

Web Scraping Libraries and Frameworks

- Beautiful Soup

A beginner-friendly Python library for parsing HTML and XML documents. Ideal for small to medium-scale scraping tasks. - Scrapy

A powerful Python framework for large-scale scraping. It supports asynchronous scraping, making it faster and more efficient. - Selenium

A browser automation tool perfect for scraping JavaScript-heavy websites by simulating user interactions. - Puppeteer

A Node.js library for controlling Chrome or Chromium, excellent for rendering dynamic content before scraping. - Playwright

A versatile alternative to Puppeteer, supporting multiple browsers for scraping and testing dynamic websites.

No-Code and Low-Code Tools

- Octoparse

A no-code tool for visual data scraping. Best for non-programmers who want quick results. - ParseHub

A cloud-based tool offering easy drag-and-drop functionality for scraping structured data from websites. - DataMiner

A browser extension that enables simple, visual scraping directly from your browser window.

Cloud-Based Scraping Platforms

- Bright Data

Formerly Luminati, this platform offers proxy services and scraping tools for enterprise-scale projects. - ScraperAPI

A proxy-based scraping service that simplifies handling CAPTCHAs and rotating IPs. - DiffBot

An AI-powered tool for extracting data from web pages without traditional scraping scripts.

Data Cleaning and Preprocessing Tools

- Pandas

A Python library for data manipulation and cleaning, widely used for processing scraped data. - OpenRefine

A free tool for cleaning and transforming raw data into structured formats. - Trifacta

A cloud-based platform for automating and cleaning datasets, especially for large-scale operations.

Educational Guides and Tutorials

- Web Scraping with Python (Real Python)

Comprehensive tutorials covering libraries like Beautiful Soup, Scrapy, and Selenium. - The Scrapy Documentation

An official guide for understanding and mastering Scrapy’s features. - DataCamp Web Scraping Guide

Beginner tutorials focusing on Beautiful Soup and Pandas integration. - Kaggle Datasets and Tutorials

Explore datasets and user-generated guides on scraping, cleaning, and using data for machine learning.

Proxies and Anonymization Tools

- Tor Project

Browse and scrape anonymously using the Tor network. - ProxyMesh

A rotating proxy service that simplifies web scraping without IP bans. - 2Captcha

A service for bypassing CAPTCHAs during scraping.

API Documentation

- Twitter API

Access public and historical tweets for NLP or sentiment analysis projects. - Google Maps API

A structured way to extract geospatial data. - OpenWeatherMap API

Fetch weather data for time-series analysis or predictions.

Books and Publications

- Web Scraping with Python (by Ryan Mitchell)

A practical book covering various libraries and techniques, including data cleaning and ethical scraping practices. - Data Science Projects with Python (by Stephen Klosterman)

Includes hands-on projects integrating web scraping and machine learning workflows. - Mining the Social Web (by Matthew A. Russell)

Focuses on scraping data from social media platforms for analytics and AI applications.

Online Communities and Forums

- Reddit: r/webscraping

A community where users share tools, scripts, and advice for solving scraping challenges. - Stack Overflow: Web Scraping

A Q&A platform with solutions to common web scraping issues. - Kaggle Forums

Join discussions on scraping, data preprocessing, and AI integrations.