What Is Commonsense Reasoning in AI?

The Foundation of Human-Like Understanding

Commonsense reasoning refers to the ability to draw logical inferences based on general world knowledge. For humans, it’s second nature to assume water flows downhill or that fire is hot. But for AI systems, these seemingly obvious facts aren’t inherently clear.

This ability allows us to navigate the world with ease, understanding contexts and filling gaps in information. AI, on the other hand, processes explicit rules and data. The gap between these paradigms is a core challenge in developing human-like intelligence.

Why AI Struggles with Commonsense

Unlike humans, AI lacks innate experiences. Its “knowledge” comes from training data, which can be incomplete or biased. Even the most advanced language models like GPTs struggle to understand implicit meaning. For instance, an AI might not grasp why “breaking a promise” involves a social, not physical, action.

This gap highlights the need for systems capable of reasoning beyond the data they’ve seen, something current architectures often fail to achieve.

The Role of Context in Commonsense Reasoning

Why Context Matters

Context is king when it comes to reasoning. Humans interpret the same phrase differently based on the situation. For example, “cold” can mean low temperature, a lack of emotion, or even a fashion compliment. AI systems often miss this nuance.

Examples of AI Missteps

- Misunderstanding humor or sarcasm.

- Taking figurative language literally.

- Failing to account for cultural differences in norms or idioms.

These missteps aren’t just amusing—they can undermine trust in AI systems, especially in applications like healthcare or customer service.

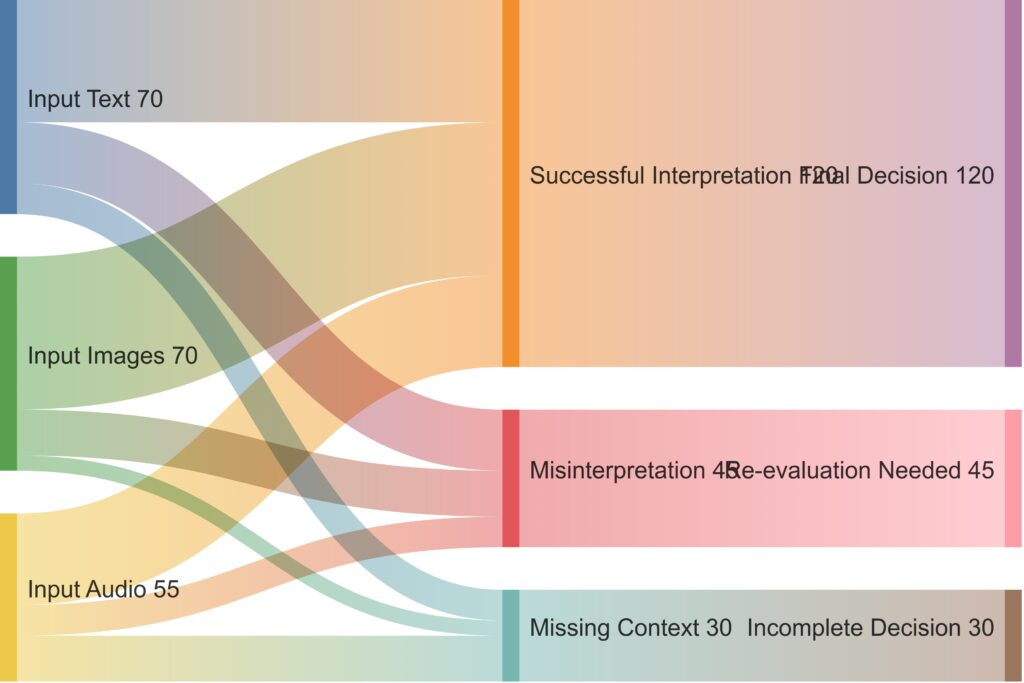

Outcomes:Successful Interpretation: Correctly understands the context and leads to the final decision.

Misinterpretation: Errors like misunderstanding sarcasm or figurative speech, requiring re-evaluation.

Missing Context: Incomplete understanding leading to partial or incorrect decisions.

The flow highlights how context impacts AI decision-making processes, emphasizing areas for improvement in handling misinterpretations and missing context.

Bridging the Context Gap

To handle context effectively, AI must learn from diverse, high-quality data. Techniques like multi-modal learning—integrating text, images, and audio—help improve contextual understanding. For example, an AI system could use visual data to understand that a “hot plate” isn’t referring to a temperature metaphor.

Key Challenges in Developing Commonsense AI

Data Limitations

AI relies heavily on data to “learn,” but commonsense is often absent from these datasets. Why? Because humans rarely write down the obvious. Training an AI to know that “water is wet” or that “people eat when they’re hungry” requires deliberately curated datasets.

Moreover, most training data reflects narrow, domain-specific knowledge, leaving AI ill-equipped to handle broader, open-ended reasoning tasks.

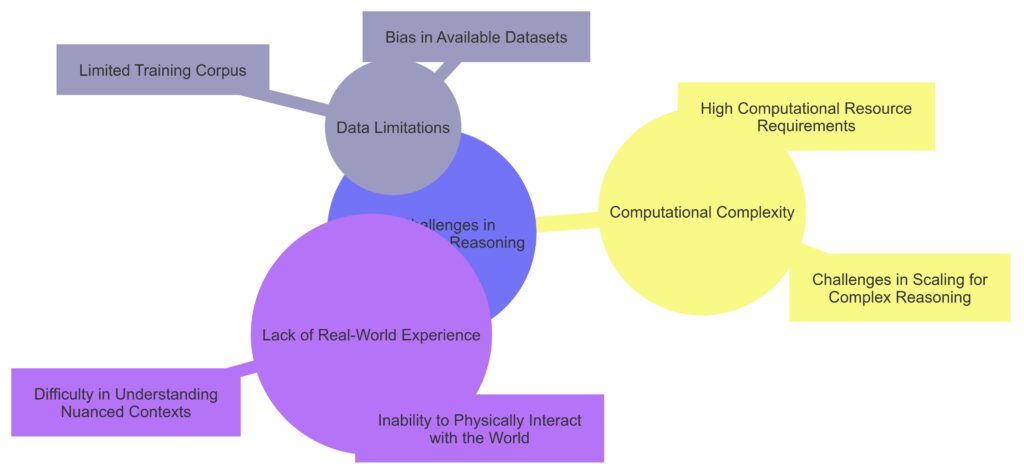

Data Limitations:

Limited Training Corpus: Insufficient data to cover the vast scope of commonsense knowledge.

Bias in Available Datasets: Systematic biases that affect model performance.

Computational Complexity:

High Computational Resource Requirements: Significant resources needed for processing.

Scalability Issues: Difficulty scaling models for more complex reasoning tasks.

Lack of Real-World Experience:

Inability to Physically Interact with the World: Limits experiential learning.

Difficulty in Understanding Nuanced Contexts: Struggles with subtleties and situational variance.

This structure highlights the multifaceted obstacles AI systems face in achieving effective commonsense reasoning.

Computational Complexity

Understanding the world is messy. Commonsense reasoning involves juggling probabilities, relationships, and uncertainties, which makes it computationally expensive. For example, deciding whether a cat would chase a mouse involves not just rules but situational reasoning, like hunger or environment.

Lack of Real-World Experience

AI doesn’t “live” in the world—it lacks sensory experiences and intrinsic motivations. Humans, for example, know not to touch fire because we’ve learned from experience. AI lacks such grounding and struggles to replicate it.

Strategies for Overcoming These Obstacles

Knowledge Graphs and Databases

Knowledge graphs, like Google’s Knowledge Graph or ConceptNet, store structured relationships between facts. These tools help AI systems infer relationships more effectively, acting as a simulated form of “common knowledge.”

For example, ConceptNet could help an AI deduce that “if someone is holding an umbrella, it might be raining.” However, even these tools are far from perfect, requiring regular updates and manual input.

Combining Symbolic AI with Machine Learning

Blending symbolic reasoning with machine learning is gaining traction. Symbolic AI focuses on logic and rules, while machine learning thrives on patterns in data. Together, they can provide systems with a better balance of structure and flexibility.

For instance, symbolic reasoning can help an AI understand basic rules like “water extinguishes fire,” while machine learning allows it to adapt those rules to new contexts.

The Promise of Multi-Modal AI

What Is Multi-Modal AI?

Multi-modal AI integrates multiple data types—text, images, video, and audio—to improve its understanding of the world. This approach mirrors human perception, where we combine visual, auditory, and contextual cues to make sense of complex scenarios.

For example, consider a video of someone slipping on ice. While text alone might describe “falling,” an AI analyzing both the video and accompanying commentary could infer the context (winter, icy ground, accidental slip).

Why Multi-Modality Enhances Commonsense

By processing different forms of data together, multi-modal systems can detect patterns that single-modality systems miss. For instance:

- Analyzing images to identify objects in context (a fork next to a plate suggests eating).

- Using audio cues to interpret emotional tone in spoken language.

This layered approach allows AI to better handle real-world complexity, reducing the chance of embarrassing misinterpretations.

Real-World Applications of Commonsense AI

Healthcare Decision-Making

In medicine, AI systems often assist in diagnosing or suggesting treatments. Commonsense reasoning is vital here: misinterpreting symptoms or medical records could lead to dangerous errors. A commonsense-capable AI might infer, for example, that a patient with persistent cough and fever could need an urgent check for pneumonia rather than merely suggesting rest.

Customer Support and Virtual Assistants

For chatbots like Siri or Alexa, commonsense reasoning helps improve user experience. Imagine asking, “What should I do if I lose my wallet?” A commonsense-aware assistant might suggest canceling credit cards or visiting a lost-and-found office—not just providing generic advice about wallets.

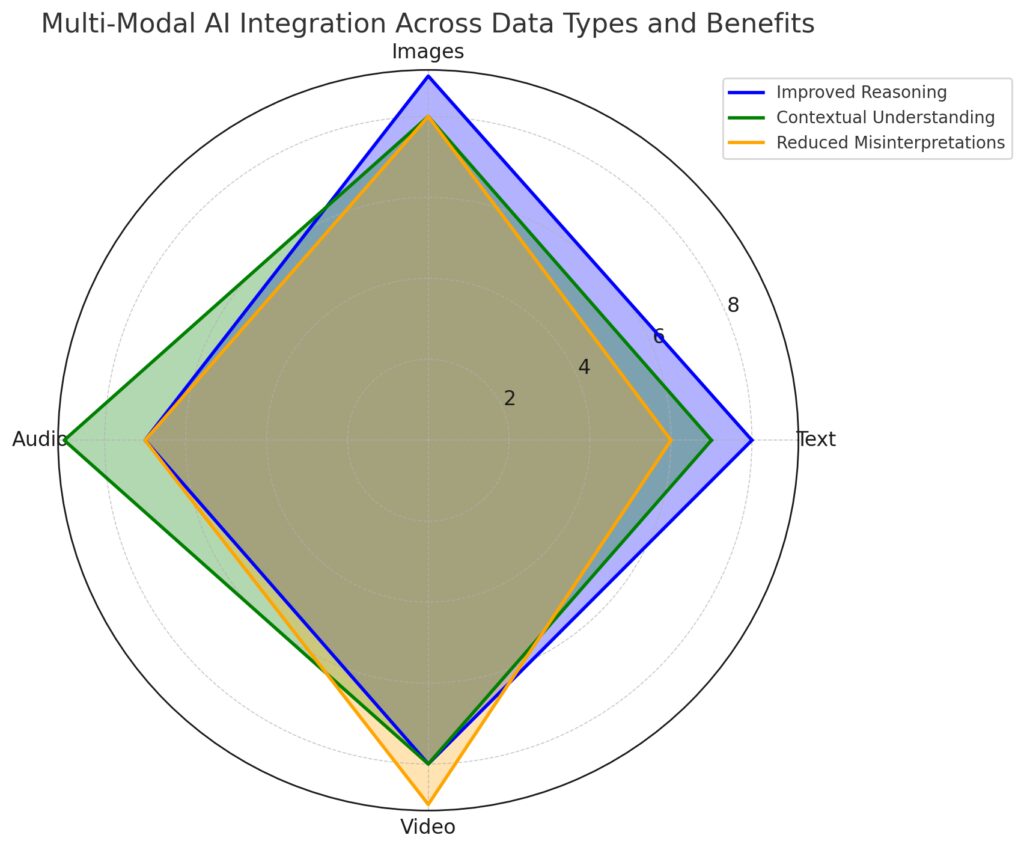

Data Types: Text, images, audio, and video.

Improved Reasoning: Achieves high reasoning capability by combining complementary information across modalities.

Contextual Understanding: Gains deep contextual insights, particularly from audio and video data.

Reduced Misinterpretations: Significantly reduces errors by leveraging cross-modal cues to clarify ambiguous data.

The chart visually demonstrates how multi-modal AI strengthens these benefits, particularly in data-rich scenarios.

Robotics and Autonomous Systems

Robots navigating real-world environments need commonsense. For instance, a delivery robot should avoid placing a package in the rain or blocking a doorway. Without this reasoning, even advanced robots can make silly and costly errors.

Education and Tutoring

AI tutors like Duolingo or Khan Academy are making learning more accessible. But without commonsense reasoning, they can frustrate users by misunderstanding queries or giving irrelevant explanations. Adding this capability ensures responses that are both accurate and intuitive.

Ethical Challenges of Commonsense AI

Bias in Data

AI systems inherit biases from their training data. For commonsense reasoning, this can amplify stereotypes or exclude perspectives. For example, if an AI learns from data that primarily reflects Western culture, it might struggle to understand non-Western norms, creating misunderstandings.

Misuse of Power

Highly capable systems with commonsense reasoning could be misused for manipulation or misinformation. For instance, chatbots that skillfully mimic human reasoning might exploit users’ trust to promote false narratives or biased agendas.

Bias in Data: Includes challenges like fairness, representation issues, and systemic bias.

Misuse of Power: Highlights risks such as exploitation, manipulation, and unregulated use.

Accountability: Focuses on trust, transparency, and responsibility.

Overlapping Areas:

Shared challenges include trust and transparency, which are critical across all domains.

Accountability and Decision-Making

Who is responsible when a commonsense AI system makes a mistake? Unlike deterministic programs, machine learning models can’t always explain their reasoning. This lack of transparency creates challenges for accountability, especially in high-stakes areas like autonomous driving or legal decision-making.

The Future of Commonsense AI

Advances on the Horizon

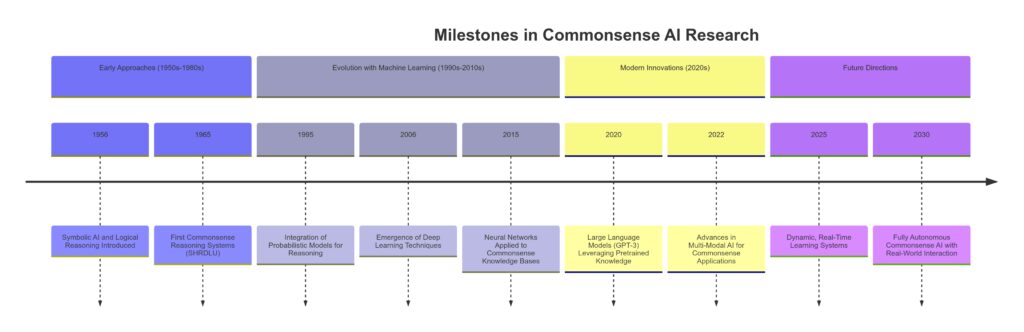

Innovative research is closing the commonsense gap. Efforts like OpenAI’s models or Facebook AI Research’s (FAIR) multi-modal systems are creating more robust frameworks for contextual reasoning. These advancements are supported by growing datasets, smarter algorithms, and cross-disciplinary collaboration.

The Road Ahead

While the field is progressing, true commonsense AI remains a moonshot. Researchers envision systems capable of real-time learning, nuanced understanding, and adaptable decision-making. But achieving this requires not only technical breakthroughs but also ongoing dialogue about ethics and safety.

| Feature | Current AI Systems | Future Goals |

|---|---|---|

| Contextual Understanding | 📄 Limited Relies on predefined datasets and lacks deep context awareness | 🌐 Comprehensive Interprets nuanced and dynamic contexts in real-world scenarios |

| Adaptability | ⚙️ Static Struggles to adapt to new environments without retraining | 🔄 Dynamic Adapts to changing environments in real-time without additional retraining |

| Real-Time Learning | ⏱️ Minimal Learning is batch-oriented and requires significant time for updates | ⚡ Instantaneous Updates and learns in real-time based on evolving scenarios |

| Human-Like Reasoning | 🤖 Basic Operates on patterns and rules, often missing commonsense logic | 🧠 Advanced Mimics human reasoning with commonsense logic integrated into decision-making |

| Integration with Multi-Modal Data | 📊 Isolated Processes data from individual modalities with limited cross-modal integration | 📈 Unified Seamlessly integrates text, images, audio, and video for richer commonsense reasoning |

| Scalability | 📉 Limited Struggles to handle large-scale, diverse applications | 🚀 Extensive Easily scales across global, multi-domain applications |

Bringing It All Together

Commonsense reasoning is the linchpin for AI systems that interact seamlessly with humans. While challenges abound, emerging solutions promise a brighter future for AI, transforming how machines think and act in our world. With sustained innovation, the dream of machines that “just get it” may soon be within reach.

FAQs

Can AI learn commonsense reasoning over time?

AI can improve with exposure to diverse data and advanced models like multi-modal AI or hybrid approaches combining symbolic reasoning with machine learning. However, achieving true commonsense reasoning comparable to humans remains a long-term goal.

What ethical concerns are associated with commonsense AI?

Key ethical concerns include bias in training data, the potential for misuse, and accountability for AI decisions. These challenges are particularly critical in sensitive applications like healthcare, autonomous driving, and law enforcement.

How is multi-modal AI helping overcome commonsense challenges?

Multi-modal AI integrates various data types—such as text, images, and audio—to improve contextual understanding. This approach mimics human perception, enabling AI to make better-informed and more accurate decisions.

What does the future hold for commonsense reasoning in AI?

Future advancements aim to make AI systems more adaptive, context-aware, and capable of real-time learning. With ongoing innovation and ethical oversight, AI could eventually achieve human-level commonsense reasoning, transforming industries and everyday life.

How does AI currently acquire commonsense knowledge?

AI acquires commonsense knowledge through large-scale datasets, knowledge graphs like ConceptNet, and training on diverse content from the internet. However, these methods often lack depth and don’t fully capture the nuances of human reasoning.

What is the role of symbolic AI in commonsense reasoning?

Symbolic AI uses predefined rules and logic to reason through problems, making it valuable for tasks requiring structured thinking. When combined with machine learning, symbolic AI helps bridge gaps in commonsense understanding by applying logical frameworks to supplement learned patterns.

Why are current datasets insufficient for teaching commonsense reasoning?

Most datasets used for AI training focus on explicit facts, domain-specific knowledge, or labeled examples. Commonsense knowledge, like “water extinguishes fire” or “people eat food when hungry,” isn’t commonly documented, making it difficult to include in training.

How does computational complexity affect commonsense reasoning in AI?

Commonsense reasoning often involves handling uncertainty, probabilities, and interrelated factors, which require significant computational power. Simulating this complexity in real-world scenarios, where multiple variables interact, remains a technological bottleneck.

Can commonsense reasoning improve AI reliability in high-stakes fields?

Yes, adding commonsense reasoning improves AI’s ability to make safe and logical decisions. For instance, in healthcare, commonsense-aware AI could avoid misdiagnosing based on improbable scenarios, and in robotics, it could prevent hazardous actions, like leaving an obstacle in someone’s path.

How do cultural differences affect AI’s commonsense reasoning?

Commonsense knowledge often varies by culture. For instance, customs around greetings or meal times differ globally. AI trained on data from one region may misinterpret norms or behavior from another, underscoring the need for diverse, representative training datasets.

What is the role of human oversight in commonsense AI development?

Human oversight ensures that AI learns commonsense in a way that aligns with ethical values and avoids harmful biases. Researchers and developers monitor AI training, refine its reasoning processes, and address gaps where the system falls short.

Can AI detect and respond to humor or sarcasm?

Detecting humor or sarcasm is notoriously difficult for AI because these rely heavily on tone, context, and shared knowledge. Advances in multi-modal AI and sentiment analysis are helping systems get better at understanding subtle cues, but the results are far from perfect.

What industries stand to benefit most from commonsense AI?

Healthcare, autonomous systems, education, and customer service are among the top beneficiaries. Commonsense AI enhances decision-making, improves safety, personalizes experiences, and makes interactions with machines feel more natural and human-like.

How are researchers evaluating commonsense in AI systems?

Researchers use benchmarks like Winograd Schemas, SWAG (Situations With Adversarial Generations), and CommonsenseQA to test how well AI systems reason through everyday scenarios. These tests evaluate AI’s ability to handle ambiguity, context, and logical inferences.

Will AI ever fully replicate human commonsense?

While AI is advancing rapidly, fully replicating human commonsense remains an ambitious goal. Humans have an innate ability to learn from experience, adapt to new environments, and understand subtle nuances—all of which are extremely complex to encode into machines.

Resources

Research Papers and Publications

- “Commonsense Reasoning and Knowledge in AI”

This foundational paper explores the key challenges and techniques in equipping AI systems with commonsense reasoning capabilities. Read it here. - “ConceptNet 5: A Large Semantic Network for Commonsense Knowledge”

A deep dive into ConceptNet, one of the most prominent knowledge graphs designed to support commonsense reasoning in AI. Access the study. - “The Winograd Schema Challenge as a Framework for Commonsense Reasoning”

This paper explains how the Winograd Schema Challenge is used to test and measure AI’s ability to reason through commonsense problems.

Online Tools and Frameworks

- ConceptNet

A freely available knowledge graph that provides structured relationships between concepts to aid commonsense understanding. Explore ConceptNet. - AllenNLP

A natural language processing library designed for research and practical applications, with a focus on reasoning tasks like CommonsenseQA. Visit AllenNLP. - COMET (Commonsense Transformers)

A tool for generating commonsense knowledge using transformers. Ideal for integrating inferential capabilities into AI systems. Check out COMET.