When deciding between XGBoost and Neural Networks for your machine learning project, it’s crucial to understand the strengths and weaknesses of each model. Both are popular choices.

But the best option depends on your specific use case, data characteristics, and project goals.

In this article, we’ll dive deep into the comparison of XGBoost vs. Neural Networks, discussing the advantages, limitations, and when to use each approach.

What is XGBoost?

XGBoost, short for Extreme Gradient Boosting, is a powerful and efficient implementation of gradient-boosted decision trees. It’s widely recognized for its speed and performance, especially in structured/tabular data.

Key Features of XGBoost

- Fast and Scalable: XGBoost is optimized for speed and resource efficiency. It can handle large datasets quickly.

- Regularization: It includes L1 and L2 regularization, which helps prevent overfitting.

- Tree-based Model: XGBoost uses decision trees, making it effective for structured data like numerical and categorical features.

Advantages of XGBoost

- Excellent for Structured Data: XGBoost excels when your data is in a tabular format with well-defined features.

- Out-of-the-Box Performance: With minimal tuning, XGBoost often delivers strong results, thanks to its efficient use of boosting.

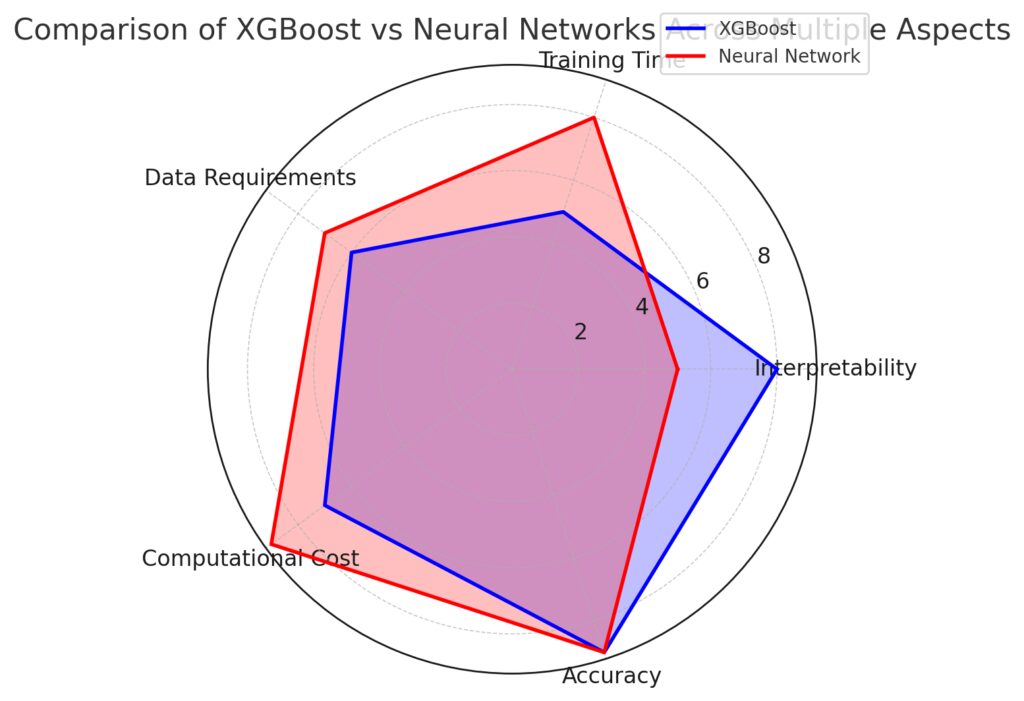

- Interpretability: Compared to neural networks, decision trees are easier to interpret, making XGBoost more suitable when explainability is a priority.

Limitations of XGBoost

- Not Ideal for Unstructured Data: XGBoost struggles with unstructured data like images, text, and audio.

- Can Overfit on Small Datasets: Despite regularization, XGBoost may overfit when trained on small datasets if not tuned carefully.

What are Neural Networks?

Neural Networks are a class of machine learning algorithms modeled after the human brain. They consist of layers of interconnected nodes, or “neurons,” that process information in a non-linear fashion. Neural networks are especially powerful for tasks involving unstructured data, such as image recognition, natural language processing, and speech analysis.

Key Features of Neural Networks

- Highly Flexible: Neural networks can be tailored to virtually any problem, from simple predictions to complex pattern recognition tasks.

- Deep Learning: When extended to deep neural networks, they excel at learning complex representations in data.

- Handles Unstructured Data: Neural networks shine in tasks involving image, text, and speech data, which are difficult for traditional models like XGBoost.

Advantages of Neural Networks

- Great for Unstructured Data: Neural networks are highly effective when working with images, audio, or text.

- Scalable to Complex Problems: With deep learning, neural networks can tackle very complex problems by learning intricate data representations.

- Automated Feature Learning: Neural networks can learn and extract important features from raw data without the need for extensive preprocessing.

Limitations of Neural Networks

- Requires Large Datasets: Neural networks typically need a lot of data to perform well, making them less ideal for small datasets.

- Computationally Expensive: Training deep neural networks can require significant computational power, especially on larger models.

- Difficult to Interpret: Neural networks are often referred to as “black boxes” due to their complex structure, making them hard to interpret.

XGBoost vs. Neural Networks: Head-to-Head Comparison

1. Data Type

- XGBoost: Best for structured/tabular data with clear feature definitions.

- Neural Networks: Superior for unstructured data like images, text, and audio.

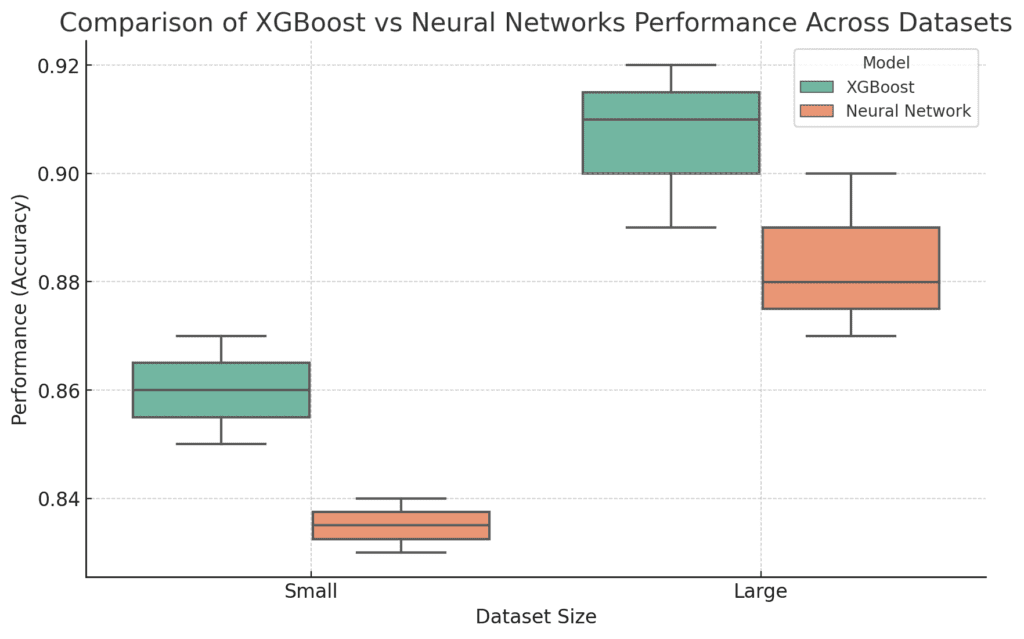

2. Performance

- XGBoost: Consistently strong performance for most structured datasets with relatively low computational cost.

- Neural Networks: Can outperform other models in highly complex tasks, but often requires more data and resources.

3. Model Interpretability

- XGBoost: Offers more interpretability, making it a better choice in applications where explainability is important (e.g., finance, healthcare).

- Neural Networks: More difficult to interpret, often considered a “black box.”

4. Computational Resources

- XGBoost: Less resource-intensive and generally faster to train.

- Neural Networks: Typically more computationally expensive, especially as you increase the depth and complexity of the network.

5. Training Time

- XGBoost: Faster training times, especially on large datasets.

- Neural Networks: Often requires more time to train, particularly for deep learning models.

When to Use XGBoost

Consider using XGBoost in the following scenarios:

- Your data is structured: If you’re working with tables of data with defined columns and features, XGBoost is likely to perform well.

- You need interpretability: When decisions need to be explained clearly, such as in medical diagnosis or financial models, XGBoost’s decision trees offer more transparency.

- You have limited computational resources: XGBoost can run efficiently on less powerful hardware, making it a better choice for projects with limited resources.

When to Use Neural Networks

Neural Networks might be the right choice if:

- Your data is unstructured: For images, audio, or natural language data, neural networks are the go-to solution.

- You have a large dataset: Neural networks tend to outperform other models when provided with vast amounts of data.

- The problem requires feature learning: If your task involves discovering complex patterns or relationships in the data (e.g., image classification or language modeling), neural networks excel by automatically learning important features.

Conclusion: XGBoost or Neural Networks?

The choice between XGBoost and Neural Networks ultimately depends on the nature of your data and project requirements. If you’re dealing with structured data and need a fast, interpretable model, XGBoost is likely the best choice. However, if your project involves unstructured data or requires advanced feature learning, Neural Networks may offer the flexibility and power you need.

Further Reading

FAQs

Are Neural Networks harder to train than XGBoost?

Yes, Neural Networks are often more difficult and time-consuming to train than XGBoost. They require careful tuning, more computational power, and large datasets. XGBoost, on the other hand, typically requires less tuning and trains faster, especially on structured data.

Can XGBoost handle image or text data?

XGBoost is not designed for unstructured data such as images or text. While it can be used with engineered features from such data, Neural Networks are far superior for tasks like image classification or natural language processing because they excel at automatically extracting features from raw data.

How does model interpretability differ between XGBoost and Neural Networks?

XGBoost is more interpretable because it is based on decision trees, making it easier to understand how it arrives at predictions. Neural Networks, however, are often considered “black boxes,” as their deep and complex structures make it difficult to explain the decision-making process.

Which model is better for structured data?

XGBoost is typically the better choice for structured data. It is fast, efficient, and often outperforms more complex models, including Neural Networks, on tabular datasets where the relationships between features are simpler.

Do Neural Networks outperform XGBoost on all tasks?

No, Neural Networks do not outperform XGBoost on all tasks. While Neural Networks excel at unstructured data and tasks requiring complex pattern recognition, XGBoost often performs better on structured data, particularly when the dataset is smaller or more straightforward.

When should I choose Neural Networks over XGBoost?

You should choose Neural Networks when working with unstructured data, such as images, text, or audio, or when your task requires learning complex patterns. If you have a large dataset and sufficient computational resources, Neural Networks will likely yield better results for these types of problems.

Is XGBoost more resource-efficient than Neural Networks?

Yes, XGBoost is generally more resource-efficient. It requires less computational power and can train faster on structured data compared to Neural Networks, which often require significant computational resources, especially when deep learning techniques are applied.

Are both models suitable for real-time applications?

XGBoost can be more suitable for real-time applications because of its faster training times and lower computational overhead. Neural Networks can also be used in real-time systems but may require more tuning and optimization to ensure they meet real-time performance requirements.

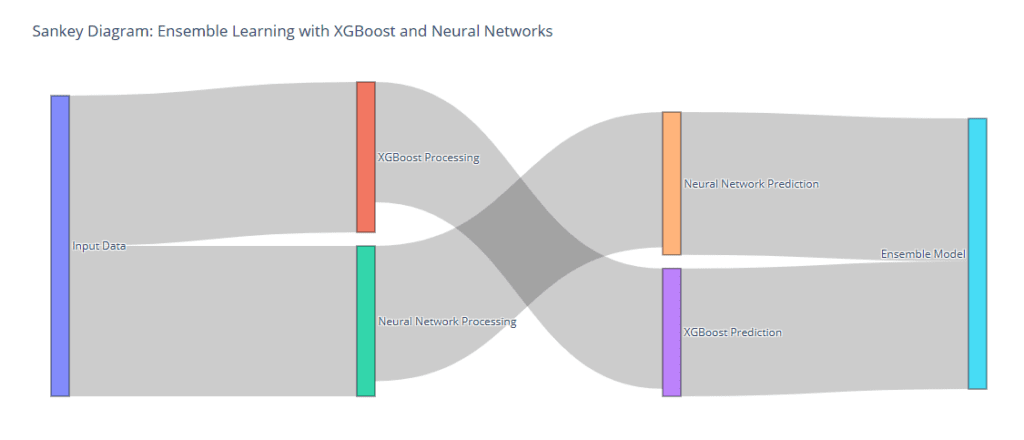

Can I use XGBoost and Neural Networks together?

Yes, you can use XGBoost and Neural Networks together in what’s known as an ensemble model. In such an approach, predictions from both models are combined to improve overall performance. For example, you might use Neural Networks to handle unstructured data and XGBoost to deal with structured features, then merge their predictions.

Which model has better generalization to unseen data?

Both models can generalize well to unseen data if properly tuned, but XGBoost has built-in regularization techniques (L1 and L2) that help prevent overfitting, making it easier to control generalization. Neural Networks, on the other hand, are prone to overfitting, especially with smaller datasets, unless regularization techniques like dropout or early stopping are applied.

Are Neural Networks better for time series data?

Neural Networks—especially Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs)—are typically better suited for handling time series data because they can learn temporal dependencies. However, XGBoost can also be adapted to work with time series data, especially when the temporal features are manually engineered.

How much data do I need for Neural Networks?

Neural Networks generally require large amounts of data to perform optimally. They thrive in environments where there are millions of data points or more, while XGBoost can perform well even with moderate-sized datasets.

Can XGBoost be used for classification and regression?

Yes, XGBoost can be used for both classification and regression tasks. It is highly versatile and can handle binary classification, multi-class classification, and regression tasks with ease.

Are Neural Networks better for classification or regression tasks?

Neural Networks are highly flexible and work well for both classification and regression tasks. However, for simpler regression tasks or small datasets, XGBoost often outperforms Neural Networks due to its ability to handle structured data more efficiently.

Which model is more suitable for beginners?

XGBoost is generally easier for beginners to start with because it requires less data and computational power, and provides strong out-of-the-box performance with minimal tuning. Neural Networks, especially deep learning models, can be more challenging for beginners due to their complexity and need for more data.

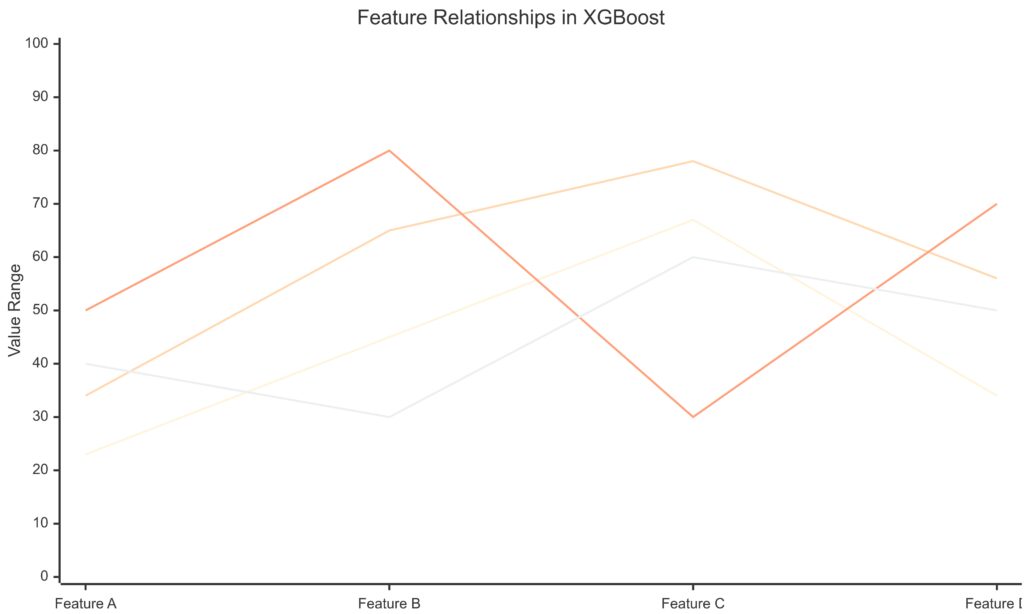

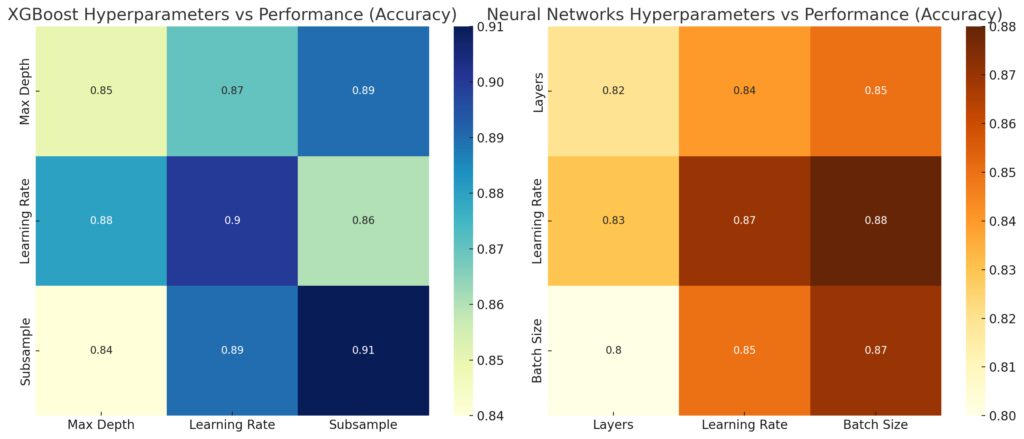

How does hyperparameter tuning differ between XGBoost and Neural Networks?

In XGBoost, hyperparameter tuning involves adjusting parameters like the learning rate, max depth of trees, and regularization terms. While important, these parameters are generally easier to tune than those in Neural Networks, where tuning involves more complex choices like the number of layers, neurons per layer, learning rate, and activation functions.

Can XGBoost or Neural Networks handle missing data?

XGBoost has a built-in capability to handle missing data, making it a good choice for datasets with incomplete records. Neural Networks do not handle missing data natively, so preprocessing steps like imputation are typically required before feeding the data into the network.

Is model training faster in XGBoost or Neural Networks?

XGBoost is typically faster to train than Neural Networks, especially on structured data. Neural Networks, particularly deep networks, require more time due to their complexity, large number of parameters, and need for powerful computational resources like GPUs.

Are pre-trained models available for XGBoost and Neural Networks?

Pre-trained models are more commonly found for Neural Networks, especially in tasks involving image recognition (e.g., VGG, ResNet) or natural language processing (e.g., GPT, BERT). These models can be fine-tuned on your specific task. XGBoost, however, usually requires training from scratch on your dataset, as pre-trained models are less common.