Named Entity Recognition (NER) is a core Natural Language Processing (NLP) task that extracts structured information from unstructured text. Traditionally, NER models require vast amounts of labeled training data. But Zero-Shot and Few-Shot Learning (ZSL & FSL) are changing the game.

Can AI truly recognize entities without prior training? Let’s explore how these cutting-edge techniques are reshaping NER.

Understanding NER and Its Challenges

What Is Named Entity Recognition (NER)?

NER is an NLP task that identifies and classifies entities such as:

- People (e.g., “Elon Musk”)

- Organizations (e.g., “OpenAI”)

- Locations (e.g., “New York”)

- Dates & Events (e.g., “WWII” or “March 2024”)

Traditional NER models rely on large, annotated datasets to detect these entities accurately. But what happens when labeled data is scarce?

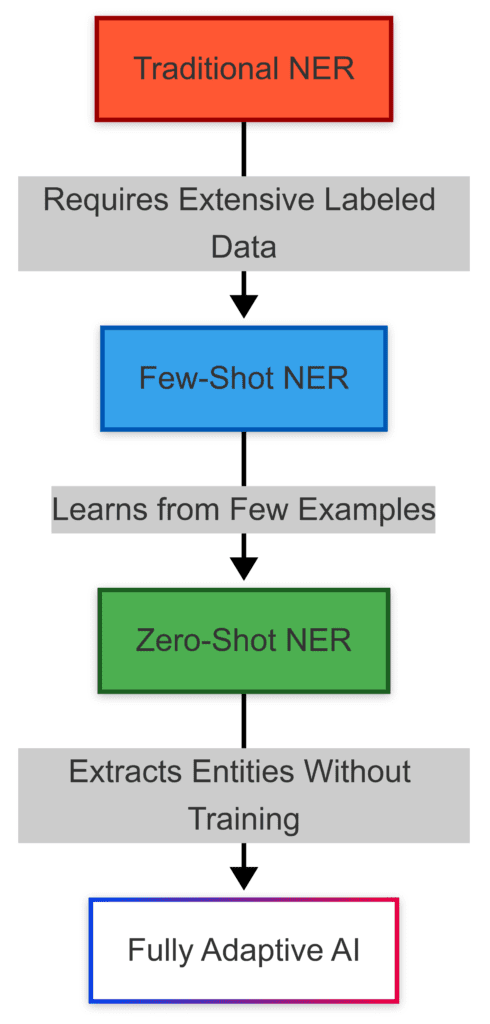

The evolution of Named Entity Recognition from traditional supervised learning to Zero-Shot and Few-Shot approaches.

The Data Bottleneck in NER Models

High-performing NER models often need thousands to millions of labeled examples. However, creating such datasets is:

- Expensive – Requires human annotators and domain expertise.

- Time-consuming – Takes weeks or months to curate quality data.

- Domain-sensitive – A general NER model may struggle with specialized fields like medicine or law.

This is where Zero-Shot and Few-Shot Learning offer a paradigm shift.

What Is Zero-Shot Learning in NER?

How Zero-Shot Learning Works

Zero-Shot Learning (ZSL) enables AI models to perform tasks without any labeled training examples. Instead, these models rely on:

- Pretrained Language Models – Like GPT, BERT, and T5, which understand vast amounts of text.

- Prompt Engineering – Using natural language instructions to guide the model.

- Semantic Similarity – Matching unseen entities based on prior knowledge.

For example, if asked to identify chemical names, a Zero-Shot model can generalize from its language understanding, even without explicit training on chemistry texts.

Key Advantages of ZSL for NER

- No need for labeled data – Saves time and costs.

- Scalable across domains – Works in diverse fields like finance, healthcare, or cybersecurity.

- Quick adaptation – New entity types can be recognized with just a well-designed prompt.

Limitations of Zero-Shot NER

- Lower accuracy – Lacks task-specific fine-tuning.

- Prompt sensitivity – Poorly designed prompts lead to misinterpretations.

- Knowledge gaps – Can only identify entities it has encountered in training data.

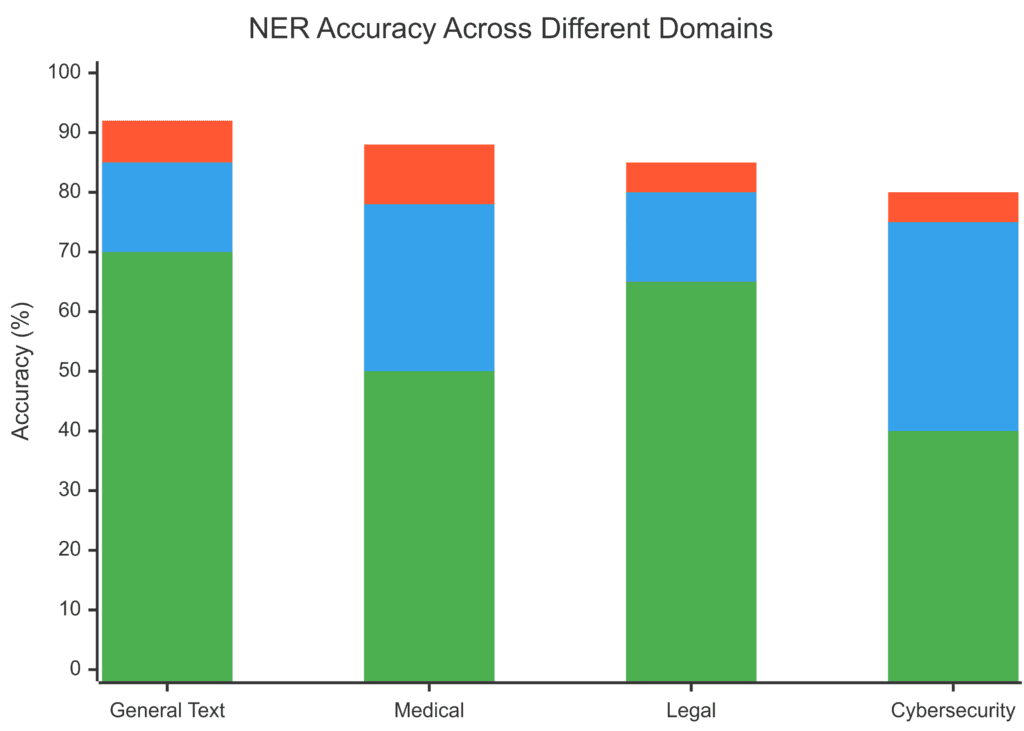

Performance comparison of Zero-Shot, Few-Shot, and Traditional NER across different domains.

Few-Shot Learning: A Middle Ground Approach

How Few-Shot Learning Works

Few-Shot Learning (FSL) provides the model with a few labeled examples (typically 1 to 100) to improve its understanding of new entity types.

It leverages:

- Fine-tuning on small datasets – Adapts models with minimal supervision.

- Meta-learning techniques – Helps AI generalize from limited samples.

- Contextual embeddings – Uses word relationships to recognize entities.

For example, an FSL-powered NER model trained on just five examples of medical terms could generalize well across unseen clinical texts.

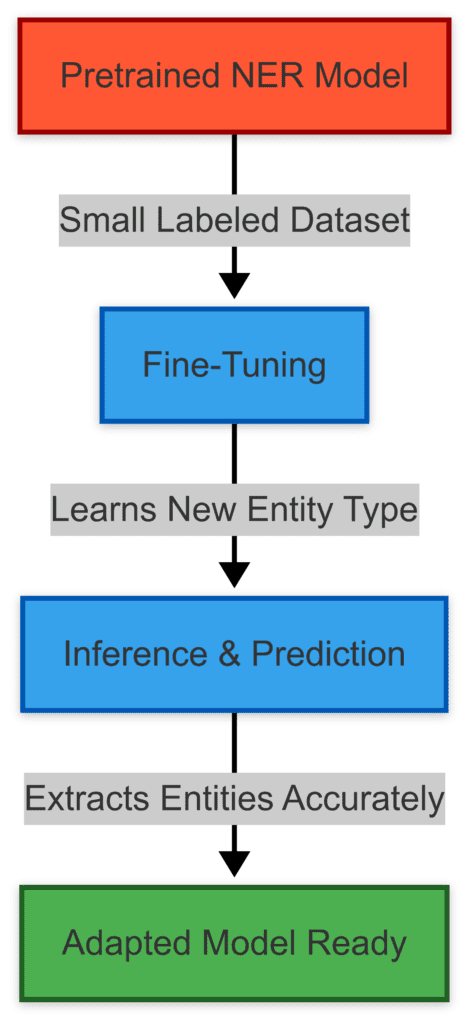

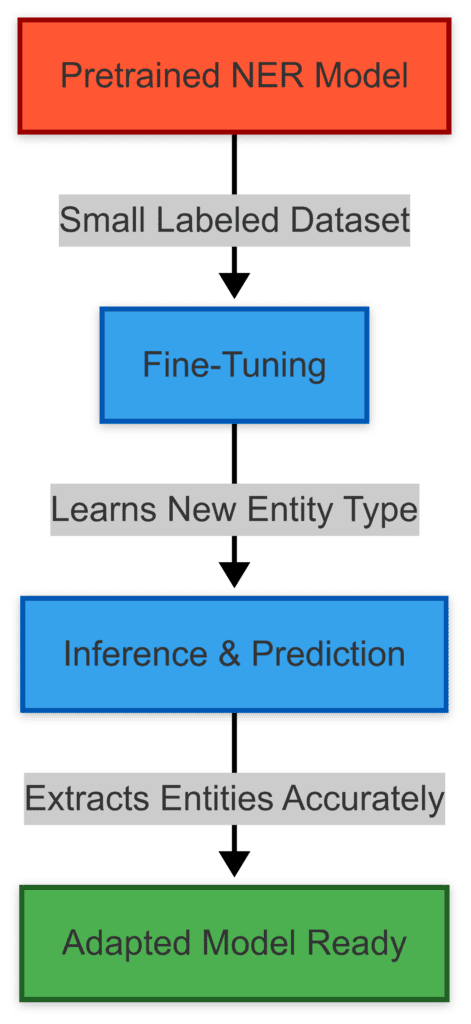

The adaptation process of a Few-Shot NER model when encountering a new entity type.

Why Few-Shot NER Is Powerful

- Requires minimal data – A few examples can significantly boost performance.

- Domain adaptability – Works for niche fields like biomedicine, law, or engineering.

- Higher accuracy than Zero-Shot – Some task-specific learning improves reliability.

Challenges of Few-Shot NER

- Still data-dependent – Needs some labeled examples.

- Sensitive to example quality – Poorly chosen samples lead to biases.

- Computational cost – Fine-tuning even on small datasets requires resources.

Applications of Zero-Shot and Few-Shot NER

1. Legal and Compliance Document Processing

Legal texts often contain complex entity types (e.g., contract clauses, case laws). Zero-Shot NER can identify legal entities without prior training, making compliance automation faster.

2. Biomedical Text Mining

Few-Shot Learning is useful in medicine, where new disease names, drug names, and gene interactions emerge constantly. Instead of retraining a model, providing a handful of examples allows it to adapt quickly.

3. Financial News & Risk Analysis

Finance-related NER models can track company names, stock symbols, and economic events. Zero-Shot models help detect emerging entities, while Few-Shot learning refines accuracy.

4. Cybersecurity Threat Intelligence

Cybersecurity teams need to extract malware names, attack types, and threat actors from reports. Few-Shot NER helps identify new threats with minimal labeled data.

5. Customer Support & Sentiment Analysis

Companies use NER for automated customer support to recognize product names, complaint types, or user feedback trends. Zero-Shot models can categorize new issues on the fly.

This is just the start—Zero-Shot and Few-Shot NER are unlocking new possibilities in AI-driven information extraction.

Techniques and Architectures for Zero-Shot and Few-Shot NER

1. Pretrained Language Models for Zero-Shot NER

Zero-Shot NER relies on powerful pretrained transformers like:

- GPT-4, T5, and BERT – Models trained on vast text corpora.

- RoBERTa & XLM-R – Multilingual models for entity recognition in different languages.

- Bart & T5 for Text2Text – Converting raw text into structured entity labels.

Zero-Shot NER accuracy across different languages and entity categories, highlighting performance gaps in non-English languages.

Instead of traditional training, these models use:

- Masked Language Modeling (MLM) – Predicting missing words to learn contextual embeddings.

- Contrastive Learning – Understanding entity relationships without explicit labels.

- Prompt-based NER – Defining entity types through natural language instructions.

For example, a Zero-Shot NER prompt could be:

“Identify all company names in this text.” The model understands the request without prior entity training.

2. Few-Shot Learning with Meta-Learning

Few-Shot NER benefits from meta-learning techniques like:

- Prototypical Networks – Learn a few entity examples, then compare new words by distance in embedding space.

- MAML (Model-Agnostic Meta-Learning) – Adapts quickly with minimal labeled data.

- TAPT (Task-Adaptive Pretraining) – Fine-tuning on small, domain-specific datasets.

This allows models to generalize across low-resource languages, technical domains, and niche industries.

3. Adapter-Based Fine-Tuning for NER

Instead of retraining entire models, adapters enable efficient fine-tuning by:

- Adding lightweight layers to frozen transformers.

- Minimizing memory usage while adapting to new entity types.

- Performing domain adaptation without losing general knowledge.

For instance, a biomedical adapter can fine-tune a general NER model to recognize disease names, gene symbols, and drug compounds with just a few examples.

4. Reinforcement Learning for Adaptive NER

Some state-of-the-art Few-Shot NER models use Reinforcement Learning (RL) to:

- Iteratively refine predictions based on feedback.

- Optimize decision-making for ambiguous entities.

- Reduce annotation dependency by improving self-learning.

For example, OpenAI’s ChatGPT refines its entity recognition via reinforcement learning with human feedback (RLHF), improving responses dynamically.

5. Benchmarking Zero-Shot & Few-Shot NER

Performance is evaluated using datasets like:

- CoNLL-2003 – Standard for news-based entity recognition.

- OntoNotes 5.0 – Covers multiple text genres (news, conversations, etc.).

- MIT Movie & Restaurant – For domain-specific NER.

- MedMentions – Biomedical entity recognition.

Metrics used:

- F1-score – Measures precision and recall balance.

- Zero-Shot Transfer Accuracy – Evaluates generalization to unseen data.

- Few-Shot Adaptation Speed – Assesses how quickly a model learns from minimal examples.

Recent studies show that Zero-Shot models achieve 60-80% accuracy on general NER tasks, while Few-Shot learning can reach 85-95% with minimal fine-tuning.

What’s Next for NER?

Advancements in prompt engineering, unsupervised learning, and multimodal AI are making Zero-Shot and Few-Shot NER even more powerful. Future directions include:

- Neural-symbolic hybrid models – Combining deep learning with rule-based systems.

- Self-supervised entity learning – Training models without human-labeled data.

- Multimodal NER – Extracting entities from text, images, and speech.

AI is moving closer to human-like entity recognition—without needing massive datasets.

Real-World Use Cases & Practical Implementation of Zero-Shot and Few-Shot NER

1. Enterprise Search & Document Automation

Many organizations handle millions of documents, from contracts to research papers. Traditional NER struggles with domain-specific jargon, but Zero-Shot and Few-Shot NER make it easier to:

- Extract contract clauses (e.g., non-compete, liability terms).

- Identify key stakeholders (e.g., companies, executives, investors).

- Tag relevant legal references (e.g., GDPR, SEC regulations).

🚀 Example: A legal tech company can use Few-Shot NER to analyze case laws with just a handful of labeled samples—reducing manual review time by 80%.

2. Healthcare & Biomedical Research

Medical terminology evolves rapidly, making static NER models obsolete within months. Few-Shot NER helps:

- Detect new disease names (e.g., COVID-19 variants).

- Extract drug interactions from clinical notes.

- Identify patient symptoms for faster diagnosis.

🔬 Example: A pharmaceutical company can train a Few-Shot NER model on rare disease names with only a few dozen examples, improving medical literature search accuracy.

3. Financial Analysis & Market Intelligence

In finance, entities like company names, stock symbols, and economic indicators change frequently. Zero-Shot NER can:

- Track emerging market trends in news articles.

- Extract earnings reports and company performance data.

- Detect fraudulent transactions by identifying unusual entity patterns.

💰 Example: A hedge fund can use Zero-Shot NER on SEC filings to monitor new financial regulations without retraining its AI models.

4. Cybersecurity & Threat Detection

New malware names, hacking groups, and exploit techniques emerge daily. Few-Shot NER improves threat intelligence by:

- Identifying new cyber threats in dark web forums.

- Extracting attack vectors from security reports.

- Recognizing suspicious IP addresses and domains.

🛡️ Example: A cybersecurity firm can fine-tune a Few-Shot model with just 10 samples of a new phishing campaign to automate threat detection.

5. Customer Support & Sentiment Analysis

Companies analyze millions of customer queries for better service. Zero-Shot NER helps:

- Recognize product names and complaint types in support tickets.

- Extract key themes from online reviews (e.g., “battery life issues”).

- Detect emerging brand sentiment in social media.

💬 Example: An e-commerce platform can use Zero-Shot NER to categorize customer complaints without pre-labeled training data, reducing response time.

How to Implement Zero-Shot & Few-Shot NER in Your Projects

1. Using Hugging Face Transformers

Popular libraries like Hugging Face’s transformers make it easy to apply Zero-Shot and Few-Shot NER.

Zero-Shot NER Example (Using pipeline)

pythonfrom transformers import pipeline

classifier = pipeline("zero-shot-classification", model="facebook/bart-large-mnli")

text = "Elon Musk is the CEO of Tesla, which is headquartered in Palo Alto."

labels = ["Person", "Company", "Location"]

result = classifier(text, labels)

print(result)

💡 This approach allows entity extraction without task-specific training!

2. Fine-Tuning a Few-Shot NER Model

Using few labeled examples, you can fine-tune a model like BERT for NER:

pythonfrom transformers import AutoModelForTokenClassification, TrainingArguments, Trainer

model = AutoModelForTokenClassification.from_pretrained("dbmdz/bert-large-cased-finetuned-conll03-english")

trainer = Trainer(model=model, args=TrainingArguments(output_dir="./ner_model"))

trainer.train()

🔧 This method improves entity recognition accuracy with minimal training data!

3. Prompt Engineering for Zero-Shot NER

Many GPT-based models (like GPT-4 or T5) can extract entities using well-structured prompts:

arduino"Extract all company names from the following text: 'Amazon and Google reported record earnings last quarter.'"

📌 Carefully crafted prompts improve Zero-Shot performance!

Future of Zero-Shot and Few-Shot NER

1. Multimodal Entity Recognition

Future AI will extract entities not just from text but also images, audio, and videos (e.g., recognizing company logos in news broadcasts).

2. Self-Supervised NER

AI will learn new entity types automatically—without needing human-labeled data—by leveraging unsupervised learning techniques.

3. Federated Learning for Private NER

Privacy-preserving NER will allow models to learn from sensitive medical or legal data without exposing private information.

🚀 Bottom Line: Zero-Shot and Few-Shot NER are revolutionizing AI-driven information extraction, making entity recognition faster, cheaper, and more adaptable than ever.

Would you like a comparison of top Zero-Shot & Few-Shot models for different use cases?

FAQs

How accurate is Zero-Shot NER compared to traditional models?

Zero-Shot NER performs reasonably well on general entity types but struggles with domain-specific terms. While fine-tuned models achieve 90-95% accuracy, Zero-Shot approaches often range between 60-80% depending on:

- Model quality (e.g., GPT-4 vs. BART).

- Task complexity (simple entity types vs. technical jargon).

- Prompt clarity (well-structured instructions improve results).

📌 Example: A Zero-Shot model may easily recognize “Apple” as a company but could misclassify less common corporate names like “Xilinx.”

Can Few-Shot NER work with just 5-10 labeled examples?

Yes! Few-Shot models leverage meta-learning and pretrained embeddings, allowing them to generalize from a handful of examples. The key is:

- Diverse examples – Cover different entity structures.

- Clear labeling – Ambiguous examples can mislead the model.

- Context-awareness – Training on full sentences improves entity detection.

📌 Example: If given just five drug names, a Few-Shot NER model can extrapolate patterns to detect new pharmaceutical entities in medical reports.

What’s the best model for Zero-Shot NER?

The best model depends on use case and language support. Some top choices include:

- GPT-4 – Strong generalization but expensive API-based access.

- T5 (Text-to-Text Transfer Transformer) – Effective for prompt-based NER.

- BART (Bidirectional Auto-Regressive Transformers) – Works well with zero-shot classification.

- RoBERTa & XLM-R – Great for multilingual Zero-Shot NER.

📌 Example: A multinational company may prefer XLM-R to detect entities across multiple languages, while a chatbot might rely on GPT-4 for flexibility.

Does Zero-Shot NER work for specialized fields like law or medicine?

Zero-Shot models struggle with highly technical fields unless they have domain-specific pretraining. Instead, Few-Shot Learning is more effective for:

- Legal contracts – Recognizing case laws and clauses.

- Medical research – Extracting disease names and treatments.

- Cybersecurity reports – Identifying attack types and threat actors.

📌 Example: A Zero-Shot model might misinterpret “Habeas Corpus” as a person’s name instead of a legal term, whereas Few-Shot fine-tuning corrects this.

How can I improve Zero-Shot NER with better prompts?

Zero-Shot NER heavily relies on prompt engineering. To improve results:

- Be explicit – “Find company names” is better than “Find entities.”

- Use examples – “Find companies like Tesla, Microsoft, and IBM.”

- Break down tasks – Separate different entity types instead of asking for all at once.

📌 Example: Instead of asking, “Find people and companies in this text,” use separate prompts:

✅ Prompt 1: “Identify all person names.”

✅ Prompt 2: “Identify all company names.”

This reduces confusion and improves accuracy.

Can I fine-tune a model on Few-Shot data without GPU access?

Yes, but performance depends on model size and dataset complexity. Options include:

- Lightweight models – Use DistilBERT instead of full BERT.

- Cloud-based fine-tuning – Services like Hugging Face offer Colab notebooks.

- Adapter-based tuning – Instead of retraining the whole model, fine-tune small task-specific layers.

📌 Example: A researcher without GPU access can fine-tune DistilBERT on 10-20 entity examples using Google Colab for free.

What are some real-world failures of Zero-Shot NER?

Zero-Shot models can fail when dealing with:

- Ambiguous names – “Amazon” (company vs. rainforest).

- Uncommon entities – New startups, emerging slang, or niche brands.

- Multilingual errors – Names like “Paris” (city vs. person’s name).

📌 Example: When tested on sports news, a Zero-Shot model misclassified “Ronaldo” as a company instead of a person.

How can businesses adopt Few-Shot NER without a full AI team?

Many no-code or low-code platforms now support Few-Shot learning, including:

- Hugging Face AutoNLP – Fine-tune models with minimal coding.

- Google AutoML NLP – Train custom NER models with a GUI.

- OpenAI’s API – Use prompt-based NER without training data.

📌 Example: A marketing firm can use OpenAI’s API to analyze customer reviews without hiring data scientists.

Can Zero-Shot NER be used for real-time applications?

Zero-Shot NER is feasible for real-time entity recognition, but performance depends on:

- Model size – GPT-4 and T5 are powerful but slow for real-time use.

- Latency requirements – API-based models introduce delays.

- Optimized execution – Smaller models like DistilBERT can run faster on edge devices.

📌 Example: A customer support chatbot can use Zero-Shot NER to detect product names instantly, but for financial fraud detection, a fine-tuned Few-Shot model may be faster.

How does Few-Shot NER compare to weakly supervised learning?

Few-Shot NER requires a small set of high-quality labeled examples, whereas weakly supervised learning leverages:

- Distant supervision – Uses existing databases (e.g., Wikipedia) to auto-label data.

- Noisy labeling techniques – Uses heuristics to generate training data.

- Semi-supervised learning – Trains on both labeled and unlabeled data.

📌 Example: Instead of hand-labeling 50 medical papers, weakly supervised learning might auto-label terms using a medical dictionary. Few-Shot learning, however, adapts faster and more accurately with just a few real examples.

Is Zero-Shot NER safe for handling sensitive data like medical records?

Zero-Shot models are often hosted on external APIs (e.g., OpenAI, Google), raising privacy concerns. Solutions include:

- On-premise deployment – Running models like BERT locally to avoid data leakage.

- Federated learning – Training NER models across devices without sharing raw data.

- Differential privacy – Ensuring that outputs don’t expose sensitive details.

📌 Example: A hospital using NER to extract patient information from medical records should avoid API-based Zero-Shot models due to HIPAA compliance issues.

Can Few-Shot NER be used for low-resource languages?

Yes! Few-Shot learning is a game-changer for low-resource languages by:

- Leveraging multilingual models like XLM-R and mBERT.

- Training on a small number of cross-lingual examples.

- Using transfer learning from high-resource languages.

📌 Example: Instead of needing 10,000 labeled sentences in Swahili, a Few-Shot model can adapt using just 50 well-annotated examples from English.

What’s the best way to evaluate a Zero-Shot or Few-Shot NER model?

Key evaluation metrics include:

- F1-score – Balances precision and recall.

- Entity overlap accuracy – Measures how well extracted entities match ground truth.

- Zero-shot transferability – Assesses performance across unseen entity types.

📌 Example: If a model identifies “Tesla” as a company but misses “SpaceX”, precision is high but recall is low. A high F1-score ensures both are correctly captured.

What are the main failure cases for Few-Shot NER?

Even with Few-Shot learning, models can fail due to:

- Insufficient examples – 5-10 samples may not capture entity variability.

- Bias in training data – A Few-Shot model trained on U.S. companies may struggle with Asian business names.

- Lack of context – Models may misinterpret abbreviations (e.g., “WHO” as an entity vs. a question word).

📌 Example: A legal AI trained on U.S. case law might fail to detect European legal entities unless given a few relevant examples.

Can Zero-Shot and Few-Shot NER handle abbreviations and acronyms?

Zero-Shot NER struggles with abbreviations unless they are widely known (e.g., NASA, FBI). Few-Shot learning improves this by:

- Providing contextual examples – Showing that “WHO” means World Health Organization, not a pronoun.

- Using domain-specific embeddings – Training on medical acronyms (e.g., “BP” = blood pressure).

- Leveraging external knowledge – Linking to entity databases like Wikidata.

📌 Example: If asked to extract medical terms, a Zero-Shot model may mistake “ALS” as an acronym for multiple meanings (disease vs. programming language). Few-Shot learning reduces ambiguity.

What industries benefit most from Zero-Shot and Few-Shot NER?

Industries that require rapid entity extraction without labeled data include:

- LegalTech – Extracting case law citations, contracts, and legal clauses.

- Healthcare – Identifying drugs, diseases, and patient conditions.

- Finance – Recognizing companies, stock symbols, and risk factors.

- Cybersecurity – Detecting new attack vectors, malware, and hacker groups.

📌 Example: A law firm can use Few-Shot NER to tag contract clauses without creating a massive annotated dataset.

How can Zero-Shot NER improve over time?

While Zero-Shot models don’t train on new data, they improve through:

- Better prompts – Optimizing instructions for clarity.

- Continual learning – Updating the base language model with newer text.

- Hybrid approaches – Combining Zero-Shot predictions with rule-based refinements.

📌 Example: A news monitoring AI can use Zero-Shot NER for emerging company names but periodically fine-tune on fresh financial reports for better accuracy.

Are there open-source tools for testing Zero-Shot and Few-Shot NER?

Yes! Some top tools include:

- Hugging Face Transformers – Pretrained models for NER.

- Spacy + AdapterHub – Adapter-based Few-Shot fine-tuning.

- Snorkel – Weak supervision for semi-labeled NER.

- OpenAI API – GPT-based Zero-Shot entity extraction.

📌 Example: A startup can use Hugging Face’s pipeline to apply Zero-Shot NER without writing custom machine learning code.

Resources

Official Model & Framework Documentation

- Hugging Face Transformers – Pretrained NER models & zero-shot pipelines.

- spaCy NER – Guide to Named Entity Recognition in spaCy.

- OpenAI GPT API – Prompt-based Zero-Shot entity extraction.

- Google AutoML Natural Language – No-code entity recognition.

Research Papers & Academic References

- Zero-Shot Learning for Information Extraction (Levy et al., 2017) – Early research on zero-shot entity extraction.

- Few-Shot NER with Meta-Learning (Huang et al., 2020) – Exploring Few-Shot adaptation in NER tasks.

- T5: Exploring Transfer Learning with a Text-to-Text Transformer (Raffel et al., 2020) – T5’s role in Zero-Shot NLP tasks.

- BART: Denoising Autoencoder for Pretraining Sequence-to-Sequence Models (Lewis et al., 2020) – BART’s effectiveness in Zero-Shot classification.

Practical Tutorials & GitHub Repositories

- Hugging Face NER Fine-Tuning – Hands-on guide for Few-Shot learning.

- Facebook’s Zero-Shot NLI Model (BART) – Used for Zero-Shot classification & entity recognition.

- Few-Shot Learning with Prototypical Networks – Meta-learning techniques for NER.

- AdapterHub for Few-Shot Fine-Tuning – Lightweight domain adaptation for transformers.

Community Forums & Discussions

- Hugging Face Forum – Discussions on Zero-Shot & Few-Shot NLP.

- r/MachineLearning (Reddit) – AI research discussions.

- Kaggle NLP Community – NER projects and notebooks.

- Stack Overflow – NLP Tag – Troubleshooting NER implementation issues.

Benchmark Datasets for NER

- CoNLL-2003 – Standard dataset for person, location, and organization recognition.

- OntoNotes 5.0 – Multi-domain dataset for entity recognition.

- MIT Movie & Restaurant NER – Domain-specific datasets for few-shot testing.

- MedMentions – Large biomedical NER dataset.