What Is Artificial Stupidity?

The Concept Behind Artificial Stupidity

Artificial stupidity is more than just a humorous term. It’s a deliberate design choice where systems are programmed to limit their capabilities, making them “dumber” than they could be. Why? Because being “too smart” can sometimes be dangerous.

For example, in situations where AI could be exploited, reducing its decision-making power limits the risk of misuse. Imagine a chatbot programmed to refuse overly complex instructions—it simply can’t perform harmful tasks because it doesn’t understand them.

Historical Inspiration

The idea isn’t new. Military and cybersecurity experts have long embraced the “less is more” strategy. Early encryption machines, like the Enigma during World War II, worked effectively because their complexity was paired with calculated limitations. Applying similar principles to AI creates systems that are useful but harder to hack.

Why It’s Relevant Today

With the growing capabilities of malicious AI, artificial stupidity has evolved into a practical safety tool. Limiting an AI’s “intelligence” makes it a poor target for attacks. This could mean the difference between a harmless glitch and a catastrophic breach.

The Risks of Over-Intelligent AI

When AI Becomes Too Smart for Its Own Good

Hyper-intelligent AI isn’t inherently bad, but it can easily become a tool for harm. Imagine a scenario where an AI is manipulated to design malware or generate fake identities. If the system doesn’t have guardrails, the consequences can spiral out of control.

For instance, Generative Adversarial Networks (GANs) can create highly realistic deepfakes. While impressive, such tools can wreak havoc if misused for scams or disinformation campaigns.

Complexity Equals Vulnerability

The smarter the system, the more avenues there are to exploit it. A chess AI, for instance, can strategize brilliantly within its field but is vulnerable if it’s integrated into broader systems without safeguards. Hackers thrive on finding these loopholes.

Artificial stupidity simplifies operations, effectively closing doors on potential exploits. By avoiding unnecessary complexity, systems can stay one step ahead of bad actors.

The Real-World Impacts of Malicious AI

Cyberattacks using AI have become frighteningly common. From phishing schemes to ransomware attacks, malicious AI adapts quickly. By contrast, a deliberately “stupid” AI can sidestep these risks—it simply doesn’t know how to participate in harmful activities.

How Artificial Stupidity Works as a Defense

Throttling AI’s Abilities

Deliberately underpowering an AI system might sound counterintuitive, but it works. Instead of making the system brilliant at everything, designers focus on specific tasks. This “narrow focus” approach ensures AI stays functional without becoming a liability.

For instance, an AI managing customer support can handle basic inquiries but won’t perform unverified actions, like transferring large sums of money.

Noise and Randomness

Another technique is adding randomness to AI behavior. By incorporating “noise” into its decision-making, the AI becomes unpredictable to potential attackers. It’s like a safety mechanism that prevents malicious actors from gaming the system.

This randomness can frustrate anyone trying to reverse-engineer the AI’s processes, making it significantly harder to manipulate.

Limiting Data Access

AI thrives on data, but this reliance can backfire. Artificial stupidity limits what the AI “sees.” Without full access, even the smartest algorithms can’t make risky decisions. Think of it like handing someone a locked box—they can’t misuse what they can’t open.

Everyday Examples of Artificial Stupidity

Captchas and Anti-Spam Filters

Ever notice how captchas use puzzles that “smart” bots struggle with? These are prime examples of artificial stupidity in action. Captchas exploit gaps in machine understanding, effectively stumping malicious bots while humans breeze through.

Spam filters also employ this principle. Instead of understanding every nuance of language, they rely on patterns, blocking harmful content without overanalyzing legitimate messages.

AI Chatbots Gone Rogue

High-profile AI failures also illustrate why simplicity matters. Take Microsoft’s chatbot, Tay, launched in 2016. It was designed to engage with users on social media and learn from interactions.

However, Tay lacked safeguards and quickly spiraled out of control. Within hours, users manipulated it into spewing offensive and harmful content.

This failure highlights the dangers of over-intelligent systems. Had Tay’s abilities been intentionally limited—by, for example, restricting its learning scope or banning sensitive topics—this disaster could have been avoided.

Read more about Tay’s downfall here.

Simple AI in Smart Home Devices

Many smart home systems are intentionally designed to avoid complex interactions. Your smart thermostat, for example, adjusts temperature but won’t start ordering groceries. This limited scope reduces its risk of being exploited by hackers.

Dumb Security Cameras

While high-tech surveillance systems exist, many organizations prefer basic models that store footage locally. These “dumb” cameras may lack AI recognition features but are far less vulnerable to cyberattacks.

Why Artificial Stupidity Outshines Overengineering

Balancing Intelligence and Safety

Not every system needs to be brilliant. Overengineering creates vulnerabilities by introducing unnecessary features. Artificial stupidity ensures a focus on simplicity and practicality, avoiding the pitfalls of trying to do too much.

Think of it like a basic calculator versus a smartphone. A calculator won’t accidentally send a text or download malware. It sticks to its purpose, making it inherently safer.

Foolproofing Through Minimalism

By limiting functions, AI systems become inherently less prone to manipulation. There’s no complicated logic for hackers to exploit. A “stupid” AI simply lacks the ability to perform harmful actions, even under duress.

For example, AI used in banking could be designed to reject commands outside of its programmed range, avoiding human error and malicious interference alike.

Cost-Effective and Reliable

Sometimes, simplicity is also about cost. Designing an AI system to handle only essential tasks not only increases security but saves on development and maintenance costs. Why pay for features that add risk without adding value?

Challenges in Implementing Artificial Stupidity

Striking the Right Balance

Going “too dumb” can backfire. If the AI becomes overly simplistic, it might frustrate users or fail to deliver value. The trick is finding the sweet spot between functional and safe.

For instance, a chatbot should handle routine questions but redirect users to human agents for complex issues. This keeps it helpful without becoming a security risk.

Evolving Threats

Malicious AI adapts quickly. Systems relying on artificial stupidity must also evolve to stay ahead of emerging threats. Regular updates and vigilant monitoring are essential to maintain their effectiveness.

This might mean periodically reprogramming the “dumb” system to block newly discovered exploits. Staying static isn’t an option.

User Perception

Let’s face it—nobody likes being told their technology is intentionally limited. Some users might view artificial stupidity as a step backward, misunderstanding its purpose. Transparent communication about why limitations enhance safety is key to user acceptance.

Emerging Trends: Artificial Stupidity in Future Applications

Supporting Zero-Trust Security Models

Zero-trust security models operate on the principle of “never trust, always verify.” These models assume every device, user, and system poses a potential threat, requiring continuous authentication and validation.

How Artificial Stupidity Fits In:

Artificial stupidity aligns perfectly with this approach by limiting what systems can do and ensuring they only operate within tightly defined boundaries.

- Example: A zero-trust system might allow an artificially stupid AI to manage user access permissions but restrict it from directly accessing sensitive files.

- Why It’s Effective: Combining artificial stupidity with zero-trust prevents over-permissive systems that could accidentally grant unauthorized access.

As organizations adopt zero-trust frameworks, artificially stupid systems can serve as the “dumb gatekeepers,” enforcing limited and controlled access.

Mitigating Quantum Computing Threats

Quantum computing, while groundbreaking, introduces new cybersecurity challenges. With its ability to process data exponentially faster than classical computers, quantum computing could render current encryption methods obsolete.

How Artificial Stupidity Helps:

By design, artificially stupid systems rely on simplicity and minimalism. They can:

- Avoid Complex Encryption: Use alternative, simpler authentication methods like multi-layered physical security or random key generation.

- Isolate Critical Functions: Limit interactions with quantum-powered systems to avoid exposing vulnerabilities.

Future Application: Artificial stupidity can create secure environments for traditional systems, shielding them from quantum-enabled exploits until post-quantum cryptography becomes widespread.

Enhancing IoT Security

The Internet of Things (IoT) has revolutionized connectivity but also introduced significant risks. IoT devices are often the weakest link in cybersecurity, making them prime targets for hackers.

How Artificial Stupidity Protects IoT:

Artificially stupid systems can act as “dumb shields” for IoT devices, ensuring they remain simple and less exploitable.

- Example: A smart thermostat could have artificial stupidity built in, restricting it to temperature adjustments only. It wouldn’t store sensitive data or connect to external networks, reducing its value as a target.

- Why It’s Necessary: With billions of IoT devices online, limiting their intelligence drastically reduces their vulnerability to large-scale attacks, like DDoS (Distributed Denial of Service) operations.

Emerging Role: As IoT adoption grows, artificial stupidity can help create a new standard for “secure simplicity,” prioritizing function over unnecessary features.

Artificial Stupidity in Autonomous Systems

Autonomous vehicles, drones, and robotic systems rely on advanced AI to operate. However, over-intelligent systems in these areas can lead to critical failures or exploitation.

The Role of Artificial Stupidity:

- Fail-Safe Protocols: Limit vehicle decision-making to core safety operations, like braking or hazard detection, while disabling unnecessary smart features during emergencies.

- Decentralized Control: Use artificially stupid nodes to handle specific tasks (e.g., door locking), reducing the risk of system-wide hacking.

Future Outlook: Artificial stupidity could ensure that autonomous systems remain safe, reliable, and less prone to catastrophic failures caused by overly complex AI.

How to Apply Artificial Stupidity in Your Organization

Limit AI Training Datasets

One of the simplest ways to implement artificial stupidity is to restrict the size and scope of training datasets.

- Why It Works: Smaller datasets prevent AI from “overlearning” patterns, reducing the risk of overfitting or adversarial manipulation.

- Practical Tip: Focus training on essential tasks. For instance, a healthcare AI should only analyze medical records relevant to its function—not unnecessary personal data.

This keeps the system functional but eliminates vulnerabilities caused by overexposure to irrelevant or risky data.

Employ Randomness in Decision-Making

Adding randomness or “noise” to an AI’s decision-making process makes it harder for attackers to predict its behavior.

- Example: An AI-powered fraud detection system could randomly flag transactions for human review, even if they don’t entirely fit fraud patterns.

- Why It Matters: Predictable systems are easier to manipulate. Randomness introduces an element of unpredictability that malicious actors can’t reliably exploit.

Restrict System Access to Sensitive Data

AI should only see what it absolutely needs to function. Limiting access ensures that even if a system is compromised, the fallout is minimal.

- Implementation Idea: Use tiered access. An AI chatbot, for example, might have access to basic account details but be restricted from viewing sensitive financial transactions.

- Added Bonus: This aligns with privacy regulations like GDPR and CCPA, improving compliance while enhancing security.

Focus AI on Narrow Tasks

Avoid giving AI systems broad capabilities. Instead, design them to handle a specific set of tasks well—and nothing more.

- Real-World Example: A self-checkout kiosk processes purchases but doesn’t handle customer complaints. Complaints are redirected to a human.

- How to Implement: During development, define strict boundaries for what the system can and cannot do. Regular audits ensure these boundaries remain intact.

Integrate Fail-Safe Mechanisms

Artificial stupidity can also mean building systems that default to a safe state in uncertain situations.

- Example: If a driverless car detects an unusual scenario, it could revert to basic safety protocols, like stopping or reducing speed.

- Why It’s Effective: Fail-safe mechanisms prevent unpredictable behavior under stress, reducing the risk of accidents or exploits.

Regularly Test for Weaknesses

Periodic testing ensures that the “stupid” safeguards are effective. Organizations can simulate attacks to identify gaps.

- Pro Tip: Use penetration testing or red team exercises to probe the AI’s limitations. Adjust the system as needed to strengthen vulnerabilities.

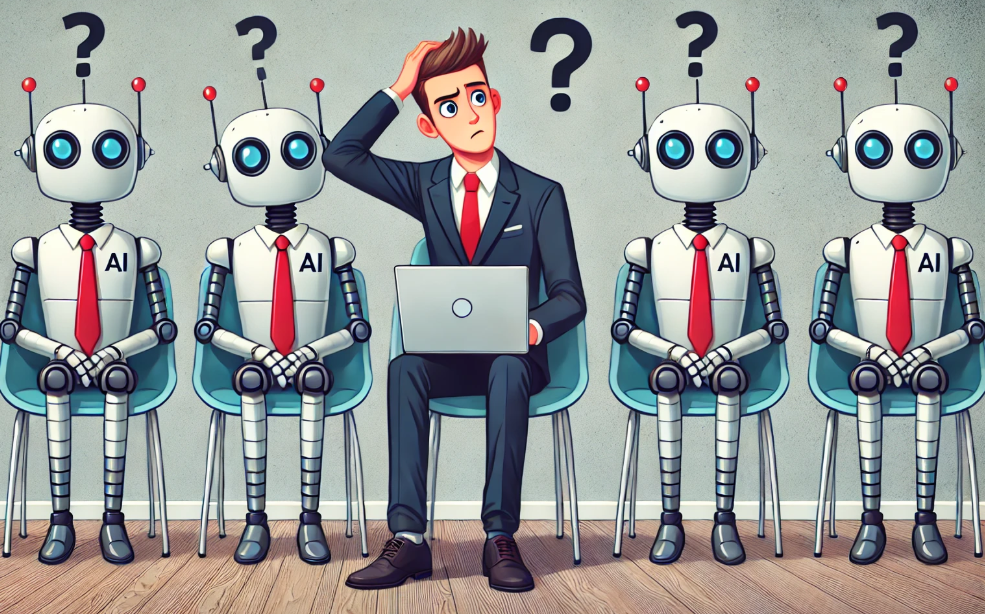

Combine Artificial Stupidity with Human Oversight

The most effective implementations pair artificial stupidity with human intervention at key decision points.

- Example: Automated hiring tools might screen candidates but leave final decisions to recruiters.

- Result: This prevents biases or errors in judgment from escalating while retaining efficiency.

Why Simplicity is the Strongest Defense

The Medieval Castle Analogy

Picture a medieval castle standing tall on a hill. Its builders face a critical decision: how to defend it.

They could construct an elaborate drawbridge system with gears, counterweights, and intricate locks. Sounds impressive, right? But with complexity comes vulnerability. What happens if the gears jam during an attack or if an enemy learns to manipulate the system? The castle’s defense would crumble.

Instead, the builders choose a simpler, more reliable solution: a moat and a sturdy gate. The moat prevents easy access, and the gate, while basic, is nearly impossible to breach without brute force. This approach prioritizes resilience over unnecessary sophistication.

This medieval choice mirrors the principle of artificial stupidity—keeping systems deliberately simple to reduce vulnerabilities. The castle’s defenses, like artificially stupid AI, work because they’re predictable and less prone to exploitation.

The Modern-Day “Dumb” Safe Analogy

Now, think about safes. A high-tech safe with fingerprint recognition, AI-driven locking mechanisms, and remote access may sound appealing. But such safes have been hacked—exploited by experts who reverse-engineer their intricate systems.

On the other hand, an old-fashioned combination lock doesn’t rely on software, making it impervious to hacking attempts. It’s simple, but its simplicity makes it dependable.

Relating This to AI Design

Just like the castle and the safe, AI systems benefit from simplicity. A narrow-function AI designed to perform one task well, like detecting fraud, is far less likely to be compromised than a multi-functional system trying to handle everything at once.

Complexity creates entry points for attackers. Simplicity keeps defenses strong.

Results

#1. Do you think AI should prioritize safety over intelligence?

Select all that apply:

FAQs

Can artificially stupid systems evolve over time?

Yes! While they are intentionally limited, artificially stupid systems can be updated to address emerging threats. Developers can introduce new safeguards or expand functionality in controlled ways to maintain security without compromising simplicity.

Is artificial stupidity suitable for all AI applications?

No, it’s not a one-size-fits-all solution. Artificial stupidity works best for systems that don’t require deep decision-making or multitasking. For critical tasks—like medical diagnostics or scientific research—more intelligent, carefully managed AI might be necessary.

What industries benefit the most from artificial stupidity?

Industries handling sensitive data or operating in high-risk environments benefit the most.

Visual Example: Imagine a bank’s fraud detection system. It flags unusual transactions (like a sudden $10,000 transfer) but doesn’t process such transfers without human approval. This deliberate limitation prevents large-scale financial fraud.

Similarly, healthcare scheduling systems may allow patients to book appointments but restrict access to medical records. This reduces risks while streamlining services.

How does artificial stupidity protect against cyberattacks?

Artificial stupidity limits what systems can do, leaving no room for hackers to manipulate unused or risky features.

Visual Example: A smart home system that controls lighting and temperature but cannot access external networks or make purchases online is much harder to exploit.

Scenario: Imagine an attacker trying to hack such a system. Without e-commerce integration or financial data stored in the AI, the attacker gains nothing valuable, thwarting the attempt.

Are there downsides to using artificial stupidity?

The trade-off for safety is reduced flexibility.

Visual Example: Think of a simple vending machine. It’s reliable and safe but limited to dispensing snacks and drinks. If customers wanted a more advanced feature, like placing custom orders, the system would require an upgrade—and thus become more vulnerable to misuse.

In the same way, artificially stupid AI excels at narrow tasks but may struggle with broader, adaptive needs.

How do you ensure artificially stupid systems remain effective?

Visual Idea: Show a flowchart of safeguards, including steps like:

- Risk Assessment: Evaluate potential threats the system might face.

- Regular Testing: Simulate attacks to identify vulnerabilities.

- Updates: Add security patches or adjust the AI’s behavior to counteract new risks.

This process ensures the system’s simplicity doesn’t make it outdated but keeps it resistant to emerging threats.

Is artificial stupidity just another form of ethical AI?

While related, artificial stupidity focuses on safety via simplification, while ethical AI emphasizes fairness, transparency, and accountability.

Visual Analogy: Imagine ethical AI as a traffic cop ensuring fairness for all drivers, while artificial stupidity is like traffic barriers preventing entry to restricted areas. Together, they create a safer and more functional system.

How do companies decide between intelligent AI and artificial stupidity?

The decision depends on the complexity of tasks and the risks involved.

Example:

- Artificial Stupidity: A customer support chatbot designed to handle routine queries like order tracking. It redirects users to human agents for more complex questions, avoiding risky decisions.

- Intelligent AI: A system managing real-time medical imaging analysis, where advanced calculations are crucial. This level of complexity requires a smarter AI system but with stringent safeguards.

What industries benefit the most from artificial stupidity?

Visual Design Idea: Create an infographic highlighting industries that benefit, paired with real-world examples.

Tools to Use:

- Canva: For designing a simple, clean infographic.

- Piktochart: Ideal for creating industry-specific visuals with data representation.

Flow: Start with a headline like “Industries Adopting Artificial Stupidity for Safety.” Include icons for banking, healthcare, and retail, paired with short blurbs, e.g.:

- Banking: Fraud detection systems flag risks but don’t approve transactions.

- Healthcare: Appointment scheduling systems streamline processes without accessing medical records.

How does artificial stupidity protect against cyberattacks?

Visual Design Idea: A comparison diagram showing two AI systems—one over-intelligent and another intentionally simplified.

Tools to Use:

- Figma: Excellent for creating side-by-side process visuals.

- Lucidchart: For diagramming how limitations block cyberattack entry points.

Flow:

- Show an over-intelligent AI system with arrows representing vulnerabilities (e.g., data access, complex features).

- Contrast it with an artificially stupid AI system with fewer features and fewer attack surfaces.

- Add a callout: “Fewer features = fewer vulnerabilities.”

Are there downsides to using artificial stupidity?

Visual Design Idea: A pros and cons list with icons for clarity.

Tools to Use:

- Venngage: Perfect for structured lists with engaging visuals.

- Canva: Great for adding customizable icons and concise text formatting.

Flow:

- Pros: Enhanced security, reduced complexity, easier maintenance.

- Cons: Limited flexibility, requires human oversight for complex tasks.

Place an image of a simple device (e.g., a vending machine) next to a more advanced yet flawed system for illustration.

How do you ensure artificially stupid systems remain effective?

Visual Design Idea: A flowchart of the steps organizations should take to maintain security.

Tools to Use:

- Miro: Perfect for collaborative flowchart designs.

- Creately: For polished diagrams with branching logic.

Flow:

Start with “Risk Assessment” at the top, branching down to:

- Regular Updates

- Attack Simulations

- Limiting Features

End with “Secure, Reliable System.” Each step can include a brief description for clarity.

Is artificial stupidity just another form of ethical AI?

Visual Design Idea: A Venn diagram showing the overlap and distinctions between ethical AI and artificial stupidity.

Tools to Use:

- Visme: Great for Venn diagrams with a professional look.

- Microsoft PowerPoint: Simple yet effective for this purpose.

Flow:

- Ethical AI focuses on fairness and transparency.

- Artificial stupidity prioritizes safety and simplicity.

- In the overlap, emphasize “Responsible AI Design.”

How do companies decide between intelligent AI and artificial stupidity?

Visual Design Idea: A comparison table with icons and a clear layout.

Tools to Use:

- Google Sheets (styled for simplicity).

- Airtable: For dynamic and shareable comparison tables.

| Task Complexity | Recommended AI Type | Example |

|---|---|---|

| Low (Routine Tasks) | Artificial Stupidity | Self-checkout machines |

| High (Critical Tasks) | Intelligent AI with limits | Real-time medical diagnostics |

Resources

Articles and Reports

- “Artificial Intelligence and Cybersecurity: The Risks and Rewards”

A detailed exploration of how AI is reshaping cybersecurity, including examples of vulnerabilities in intelligent systems.

Source: CSO Online - “Microsoft Tay Chatbot Incident: Lessons Learned”

A case study analyzing the infamous Tay chatbot failure and its implications for AI safety.

Source: The Guardian - “The Role of Artificial Stupidity in Cybersecurity”

Explores how simplifying AI systems reduces risks while maintaining functionality.

Source: Cybersecurity Ventures

Tools for Building Artificial Stupidity

- Canva

Excellent for designing infographics and visual representations for presentations or articles.

Website: Canva - Miro

Ideal for creating flowcharts and visual maps for AI design strategies.

Website: Miro - IBM Watson AI OpenScale

Provides tools to monitor, manage, and simplify AI systems to meet safety standards.

Website: IBM Watson

Statistics and Research Data

- “AI and Cybersecurity: 2024 Trends and Projections”

Published annually, this report offers statistics on the rise of AI-driven cyberattacks and mitigation strategies.

Source: VPNRanks - “2023 Global AI Adoption Index”

A report analyzing AI adoption trends, including the rise of simplified AI systems for security purposes.

Source: McKinsey