Reinforcement learning (RL) has evolved rapidly, becoming one of the most exciting areas in artificial intelligence. It empowers machines to learn from the environment and make decisions with minimal human intervention. In this tutorial, we’ll dive deep into advanced reinforcement learning algorithms like PPO and A3C, explore how to create custom environments using OpenAI Gym and Unity, and understand how to merge deep learning with reinforcement learning for complex decision-making tasks.

Understanding Advanced Reinforcement Learning Algorithms

What Are PPO and A3C?

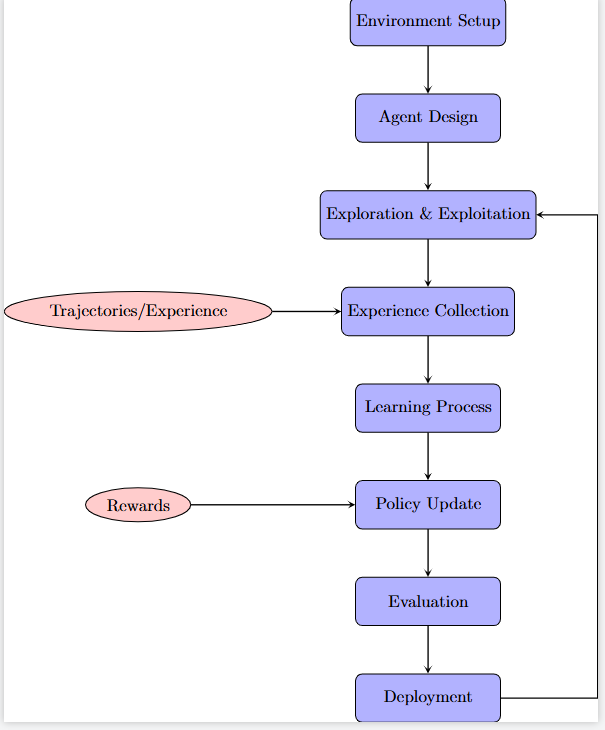

Proximal Policy Optimization (PPO) and Asynchronous Advantage Actor-Critic (A3C) are state-of-the-art algorithms in reinforcement learning. They balance exploration and exploitation, making them effective for complex environments.

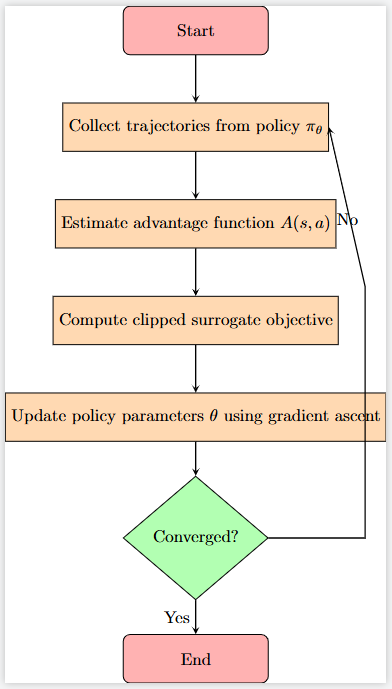

Step 1: Proximal Policy Optimization (PPO)

PPO is a powerful policy gradient method. Unlike traditional methods, it maintains a balance between improving the policy and avoiding drastic updates that might degrade performance.

- Implement PPO:

- Start by installing necessary libraries like

TensorFloworPyTorch. - Define the environment using OpenAI Gym.

- Create a policy network and a value function network.

- Use the PPO update rule to optimize your policy iteratively.

- Key Concept: PPO uses a clip function to restrict how much the policy can change at each step, ensuring stability.

A flowchart depicting the PPO algorithm’s structure.

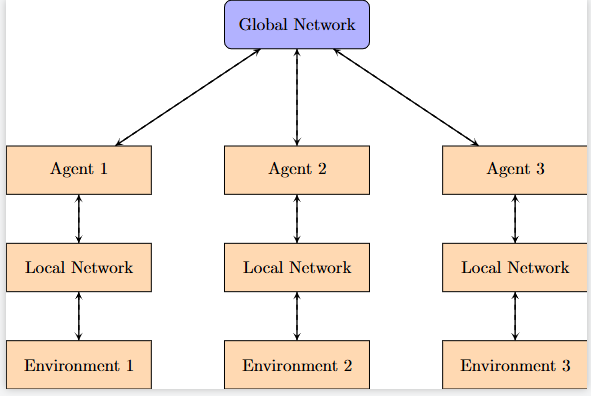

Step 2: Asynchronous Advantage Actor-Critic (A3C)

A3C is a parallel algorithm that uses multiple agents to explore different parts of the environment simultaneously. It trains a global network while each agent trains its local network, which synchronizes with the global network periodically.

- Implement A3C:

- Set up multiple agents in separate threads.

- Define an actor-critic architecture where the actor determines the action, and the critic evaluates it.

- Synchronize local networks with the global network after a certain number of steps.

- Key Concept: A3C leverages the advantages of parallel computing to speed up training and avoid overfitting.

Diagram of A3C with multiple agents training in parallel.

When to Use PPO vs. A3C

- PPO is more stable and suitable for environments where careful updates are crucial.

- A3C excels in scenarios where faster training is necessary, especially in large-scale environments.

Building Simulated Environments for Reinforcement Learning

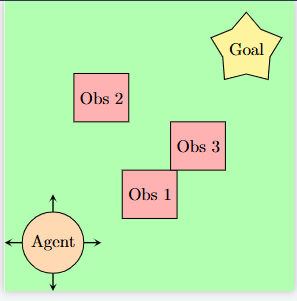

Step 3: Creating Custom Environments with OpenAI Gym

OpenAI Gym provides a toolkit for developing and comparing reinforcement learning algorithms. Creating a custom environment is crucial for tasks that standard environments don’t cover.

- Define the environment:

- Inherit from

gym.Env. - Implement key methods like

__init__,reset, andstep. - Customize the reward structure, state space, and action space to match your specific problem.

- Testing:

- Use simple agents to interact with your environment.

- Tune the environment to ensure it is challenging yet solvable.

Step 4: Leveraging Unity for Complex Environments

Unity ML-Agents Toolkit allows you to create complex, visually rich environments. Unity is particularly useful for simulations requiring intricate physics or 3D modeling.

- Install Unity ML-Agents:

- Download Unity and the ML-Agents Toolkit.

- Set up a basic environment in Unity and integrate the ML-Agents package.

- Designing a Custom Environment:

- Create your scene using Unity’s editor.

- Attach an agent script that interacts with the environment.

- Use Unity’s physics engine to simulate realistic scenarios.

- Training with Unity:

- Export the Unity environment as a

*.exeor*.appfile. - Use PPO or A3C to train an agent within this environment.

A Unity scene used for reinforcement learning with ML-Agents

Combining Deep Learning with Reinforcement Learning

Step 5: Introduction to Deep Reinforcement Learning

Deep reinforcement learning (DRL) merges deep learning’s ability to handle high-dimensional input spaces with RL’s decision-making capabilities.

- Why Combine Deep Learning and RL?

- DRL is essential for tasks involving visual inputs (like raw pixels) or environments with complex dynamics.

- Algorithms like DQN (Deep Q-Network) and DDPG (Deep Deterministic Policy Gradient) are designed to work with deep neural networks, enabling agents to learn from high-dimensional data.

An overview of the deep reinforcement learning workflow

Step 6: Implementing Deep Reinforcement Learning

Deep Q-Network (DQN)

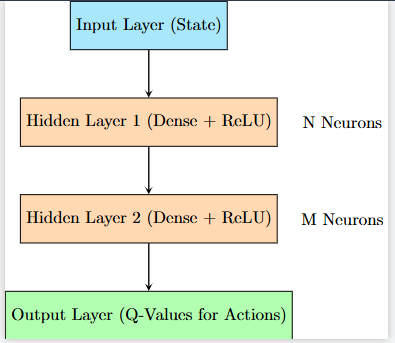

DQN is a value-based method where a neural network approximates the Q-value function.

- Implementing DQN:

- Create a neural network to predict Q-values for each action given a state.

- Use an experience replay buffer to store and sample experiences for training.

- Train the network using the Bellman equation to minimize the loss between predicted and target Q-values.

The architecture of a Deep Q-Network (DQN)

Deep Deterministic Policy Gradient (DDPG)

DDPG is an actor-critic method suitable for continuous action spaces. It uses two neural networks: one for the policy (actor) and another for the value function (critic).

- Implementing DDPG:

- Create actor and critic networks.

- Use target networks to stabilize training by slowly updating the target Q-values.

- Combine with techniques like Hindsight Experience Replay (HER) to improve sample efficiency.

Example of DDPG applied to a continuous action space problem

Conclusion: Putting It All Together

Mastering advanced reinforcement learning involves understanding cutting-edge algorithms, building custom environments, and integrating deep learning techniques. Start by experimenting with PPO and A3C in familiar environments, then progress to creating your own challenges using OpenAI Gym and Unity. Finally, leverage deep reinforcement learning to tackle complex, high-dimensional problems.

With these tools and techniques, you’re well on your way to building sophisticated RL models that can handle anything from simple tasks to advanced simulations.

Ready to take your reinforcement learning projects to the next level? Explore more advanced techniques and dive deeper into each algorithm.

Links to resources and further readings below.

Resources

- OpenAI Spinning Up in Deep RL – A great resource for understanding deep reinforcement learning concepts and algorithms.

- DeepMind’s Reinforcement Learning Course – Comprehensive learning materials from one of the leading AI research labs.

- Reinforcement Learning: An Introduction by Sutton and Barto – The foundational textbook on reinforcement learning, available online for free.

- Berkeley CS285: Deep Reinforcement Learning – A graduate-level course that covers advanced topics in reinforcement learning.

- Coursera’s Advanced Machine Learning Specialization – Includes a course on advanced reinforcement learning techniques.